NOV 3, 2025

Beyond Text Prompts: How Cartwheel Built Pose-Faithful 3D Generation with Gemini Flash 2.5

Generative models have created new possibilities for artists and designers. However, for professional creators, translating a specific creative vision into a generated image remains a significant challenge. Text-only prompting can often feel like a "slot machine," making it difficult to achieve precise control over a character's pose, camera angle, and composition.

Cartwheel, a platform for 3D AI-native game and media creation, is addressing this problem by building a novel solution on top of Google's advanced models, in this case Gemini 2.5 Flash Image Nano Banana. Their "Pose Mode" feature within Cartwheel Studio moves beyond simple text-to-image generation by incorporating 3D-native controls, giving creators direct, iterative control over their output.

The challenge: bridging the gap between intent and output

In professional creative workflows, precision is essential. An artist, advertiser, or game designer often needs to create a character in a specific pose or from a specific angle to fit a storyboard or campaign brief.

"At a high level, image generators have been hard to control," said Jonathan Jarvis, co-founder of Cartwheel. "It's hard to achieve a vision that you actually have. We've always wanted to let you just go in and directly manipulate the character."

This requirement for direct manipulation led Cartwheel to develop a multimodal pipeline that integrates 3D posing, text prompting, and multiple AI models to work in concert.

The solution: a multi-model pipeline for pose-faithful generation

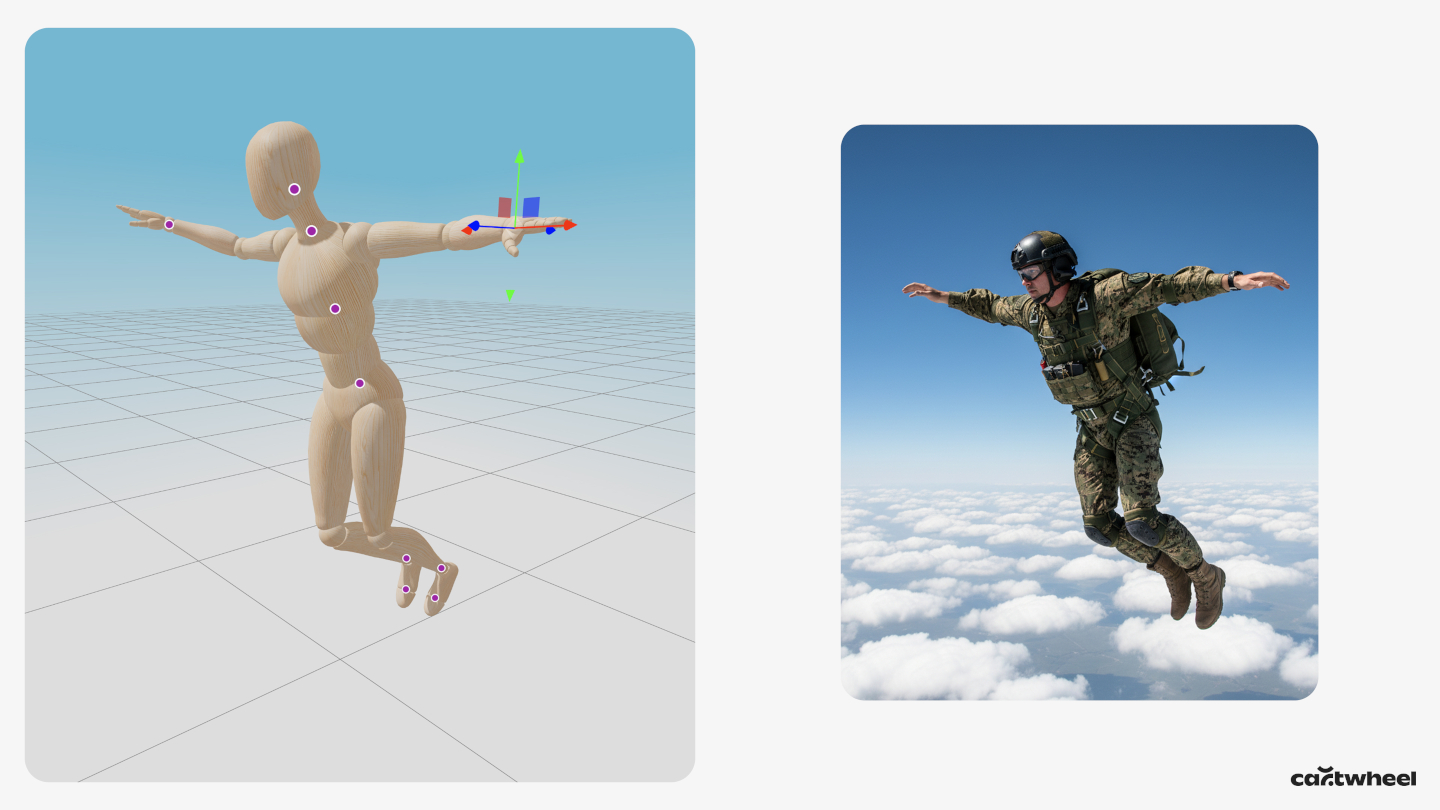

Instead of relying solely on text, Cartwheel's Pose Mode presents the user with a 3D mannequin. The user can directly click and drag the mannequin's limbs to create a specific pose and adjust the virtual camera to any angle. This 3D scene then becomes a primary input for the generative process.

The technical workflow is as follows:

- Pose labeling with Gemini 2.5 Flash. First, a screenshot of the posed 3D mannequin is sent to Gemini 2.5 Flash. Cartwheel uses 2.5 Flash for this step, as its speed is ideal for the low-latency requirement of a real-time creative tool. The model's task is to return a simple text label describing the pose, such as "a character in a jumping pose" or "a character saluting."

- Multimodal prompt assembly. This 2.5 Flash-generated pose label is then automatically combined with the user's own descriptive text prompt (e.g., "a robot in a field of flowers").

- Conditioned image generation. Finally, this combined text prompt is sent to a high-fidelity, pose-faithful image model, Gemini 2.5 Flash Image, along with the original screenshot of the 3D pose. This multimodal prompt—which includes both the image of the pose and the detailed text description—conditions Gemini 2.5 Flash Image to generate an image that strictly adheres to the pose and camera angle, while applying the artistic style, character, and scene details from the text.

This chaining of models—using 2.5 Flash for visual analysis and labeling, and 2.5 Flash Image for final, conditioned rendering—allows Cartwheel to offer a unique workflow that combines the intuitive control of 3D software with the creative power of generative AI.

The results: unlocking character consistency from any angle

This approach has proven effective at generating images that were previously difficult to create. "Rendering characters from any angle but the front did not work in any other model," noted Andrew Carr, co-founder of Cartwheel. "As soon as you rotated the camera, it fell apart."

Because most image models are trained on data that overwhelmingly features characters from the front, they struggle to create less common compositions, such as high-angle shots or views from behind. By providing the pose as a direct visual input, Cartwheel's tool bypasses this training data bias, allowing an artist to generate consistent characters from any angle they choose.

This workflow significantly accelerates the creative process. A task that might have previously required hours of iterative prompting or manual compositing by a 3D artist can now be accomplished in seconds.

What's next: from static images to generative video

Cartwheel is already planning the next steps for this technology. The team is experimenting with integrating a library of 150,000 pre-categorized poses that users can search and refine, further speeding up the workflow.

The long-term vision is to extend this pose-to-pixel pipeline into motion. The same 3D pose and rendered image could serve as the start frame for a video-to-video model, such as Veo. This would allow a creator to pose a character, render it in any style, and then animate it using a text prompt, creating a seamless workflow from 3D posing to a final, stylized animation.

By building on top of multimodal models like those in the Gemini family, Cartwheel is demonstrating how developers can create sophisticated tools that provide artists with the control and consistency they need, moving generative AI from a tool of chance to one of precise creative intent.