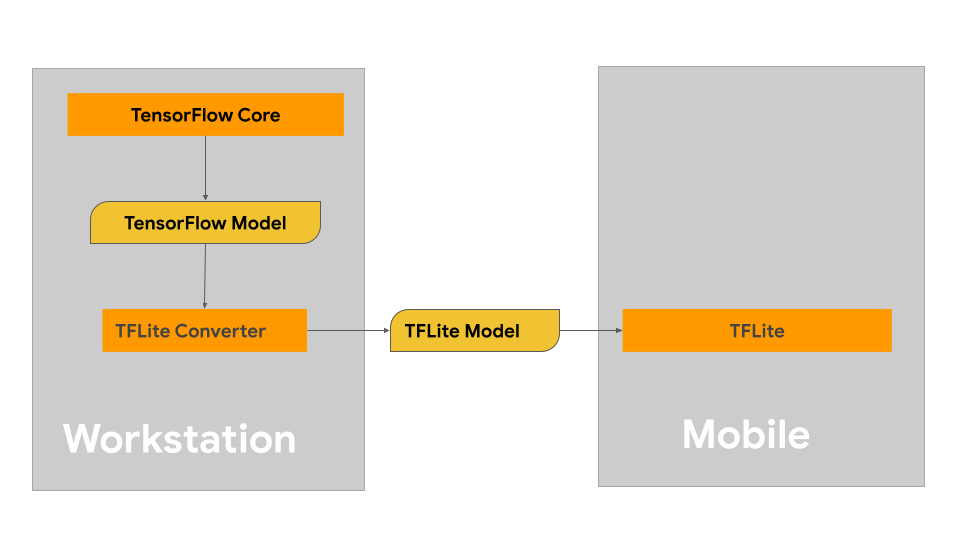

本頁提供相關指引,說明如何建構 TensorFlow 模型,並轉換為 LiteRT 模型格式。您搭配 LiteRT 使用的機器學習 (ML) 模型,原本是使用 TensorFlow 核心程式庫和工具建構及訓練而成。使用 TensorFlow Core 建構模型後,您可以將模型轉換為更小巧、更有效率的 ML 模型格式,也就是 LiteRT 模型。

如果您已有要轉換的模型,請參閱「轉換模型總覽」頁面,瞭解如何轉換模型。

建構模型

如果您要為特定用途建構自訂模型,應先開發及訓練 TensorFlow 模型,或擴充現有模型。

模型設計限制

開始模型開發程序前,請先瞭解 LiteRT 模型的限制,並在建構模型時留意這些限制:

- 運算能力有限:與配備多個 CPU、高記憶體容量和 GPU/TPU 等專用處理器的伺服器相比,行動裝置和邊緣裝置的運算能力有限。雖然運算能力和專用硬體相容性不斷提升,但可有效處理的模型和資料仍相對有限。

- 模型大小:模型的整體複雜度 (包括資料前處理邏輯和模型中的層數) 會增加模型的記憶體內大小。大型模型可能執行速度過慢,或無法在行動裝置或邊緣裝置的可用記憶體中執行。

- 資料大小 - 在行動裝置或邊緣裝置上,機器學習模型可有效處理的輸入資料大小有限。如果模型使用大型資料庫 (例如語言、圖片或短片資料庫),可能無法在這些裝置上運作,且可能需要裝置外儲存空間和存取解決方案。

- 支援的 TensorFlow 作業 - 與一般 TensorFlow 模型相比,LiteRT 執行階段環境支援的機器學習模型作業子集。開發要搭配 LiteRT 使用的模型時,請追蹤模型與 LiteRT 執行階段環境功能的相容性。

如要進一步瞭解如何為 LiteRT 建立有效、相容的高效能模型,請參閱「效能最佳做法」。

模型開發

如要建構 LiteRT 模型,您必須先使用 TensorFlow 核心程式庫建構模型。TensorFlow 核心程式庫是較低階的程式庫,提供 API 來建構、訓練及部署機器學習模型。

TensorFlow 提供兩種做法。您可以開發自己的自訂模型程式碼,也可以從 TensorFlow Model Garden 中提供的模型實作開始著手。

Model Garden

TensorFlow Model Garden 提供許多先進的機器學習 (ML) 模型實作方式,適用於視覺和自然語言處理 (NLP)。您也可以使用工作流程工具,在標準資料集上快速設定及執行這些模型。Model Garden 中的機器學習模型包含完整程式碼,因此您可以使用自己的資料集測試、訓練或重新訓練模型。

無論您是想為知名模型設定效能基準、驗證最近發布的研究結果,還是擴充現有模型,Model Garden 都能協助您達成機器學習目標。

自訂模型

如果您的用途不在 Model Garden 模型支援的範圍內,可以使用 Keras 等高階程式庫開發自訂訓練程式碼。如要瞭解 TensorFlow 的基礎知識,請參閱 TensorFlow 指南。如要開始使用範例,請參閱 TensorFlow 教學課程總覽,其中包含初學者到專家級教學課程的指標。

模型評估

開發模型後,請評估模型效能,並在終端使用者裝置上測試模型。TensorFlow 提供幾種做法。

- TensorBoard 是一項工具,可在機器學習工作流程中提供必要的測量結果和視覺化效果。你可以追蹤實驗指標 (例如損失和準確率)、將模型圖視覺化、將嵌入項目投影到較小尺寸的空間等。

- 我們為每個支援的平台提供基準化工具,例如 Android 基準化應用程式和 iOS 基準化應用程式。您可以使用這些工具評估及計算重要效能指標的統計資料。

模型最佳化

由於 TensorFlow Lite 模型有資源限制,因此模型最佳化有助於確保模型效能良好,並減少運算資源用量。機器學習模型的效能通常取決於推論大小和速度與準確度之間的平衡。LiteRT 目前支援透過量化、修剪和分群法進行最佳化。如要進一步瞭解這些技術,請參閱「模型最佳化」主題。TensorFlow 也提供模型最佳化工具包,其中包含可實作這些技術的 API。