AI for every

developer

Unlock AI models to build innovative apps and transform development workflows with tools across platforms.

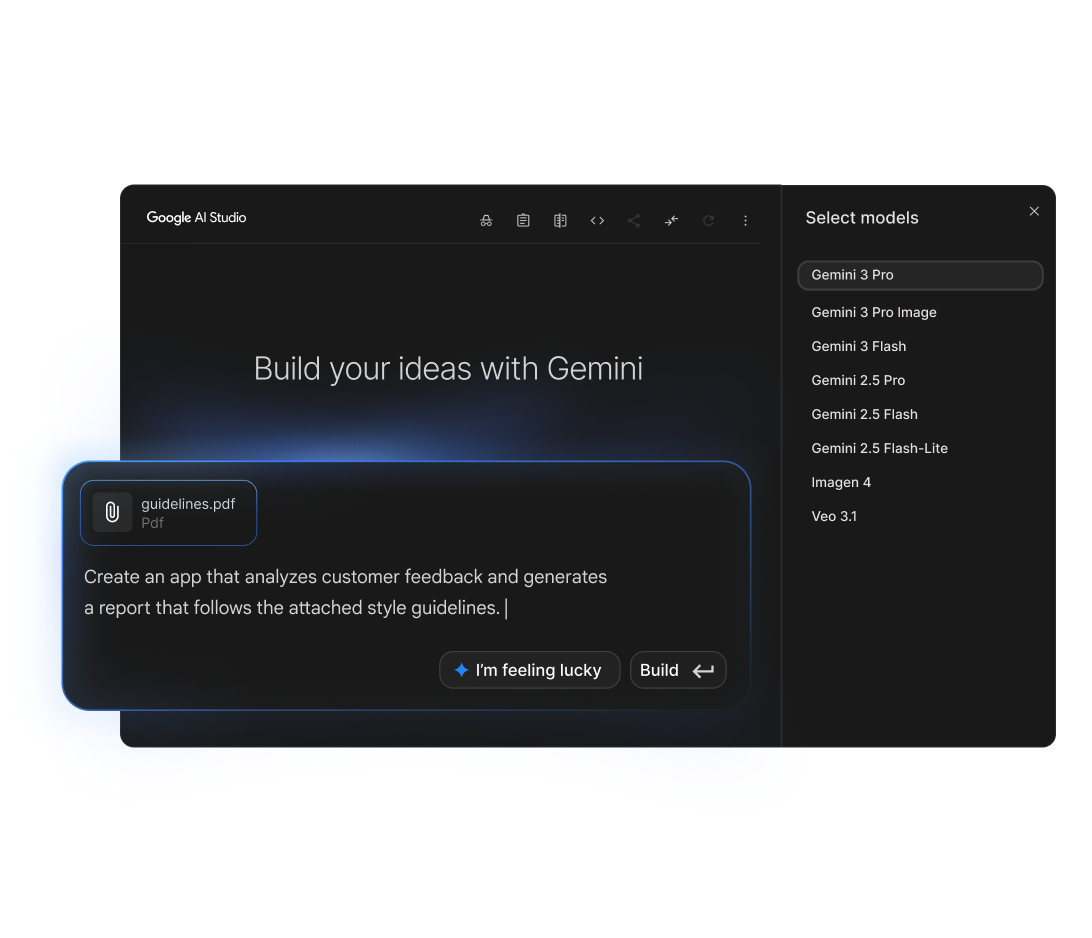

Explore models in Google AI Studio

Start building

Get started building with cutting-edge AI models and tools

Integrate Google AI models with an API key

Build with cutting-edge AI models, like Gemini, Imagen, and Veo, from Google DeepMind

View Gemini API docs

Own your AI with Gemma open models

Build custom AI solutions and retain complete control. Tailor Gemma models, built from the same research and technology as Gemini, with your own data.

Build with Gemma

Run AI models on-device with Google AI Edge

Build and deploy edge ML solutions across mobile, web, and embedded applications, from simple APIs to custom pipelines, with support across all major frameworks.

Explore Google AI Edge

Build with AI responsibly

Build trusted and secure AI with guidance for responsible design, development, and deployment of models and applications.

Build Responsible AI

Boost productivity with AI code assistance

Agents

Gemini empowers you to be more productive by acting as your coding agent. It can plan and execute tasks, freeing you to focus on what matters most.

Analysis and insights

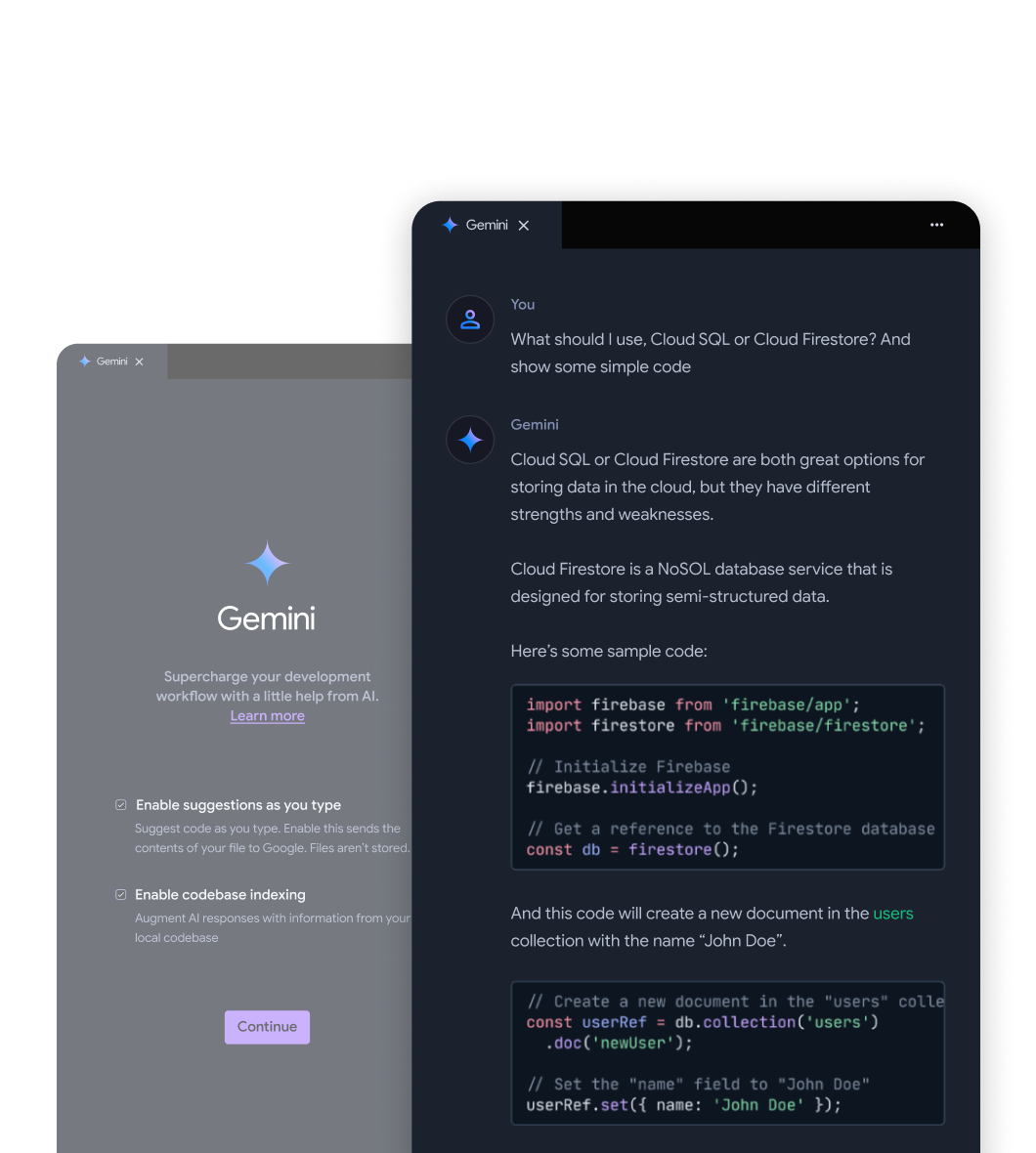

Improve code quality and fix issues with code analysis. Get insights, suggestions, and code snippets within your existing development environment.

Code generation

Gemini adds AI-powered code completion with natural language understanding to create entire code blocks from your descriptions, revolutionizing your development workflow.

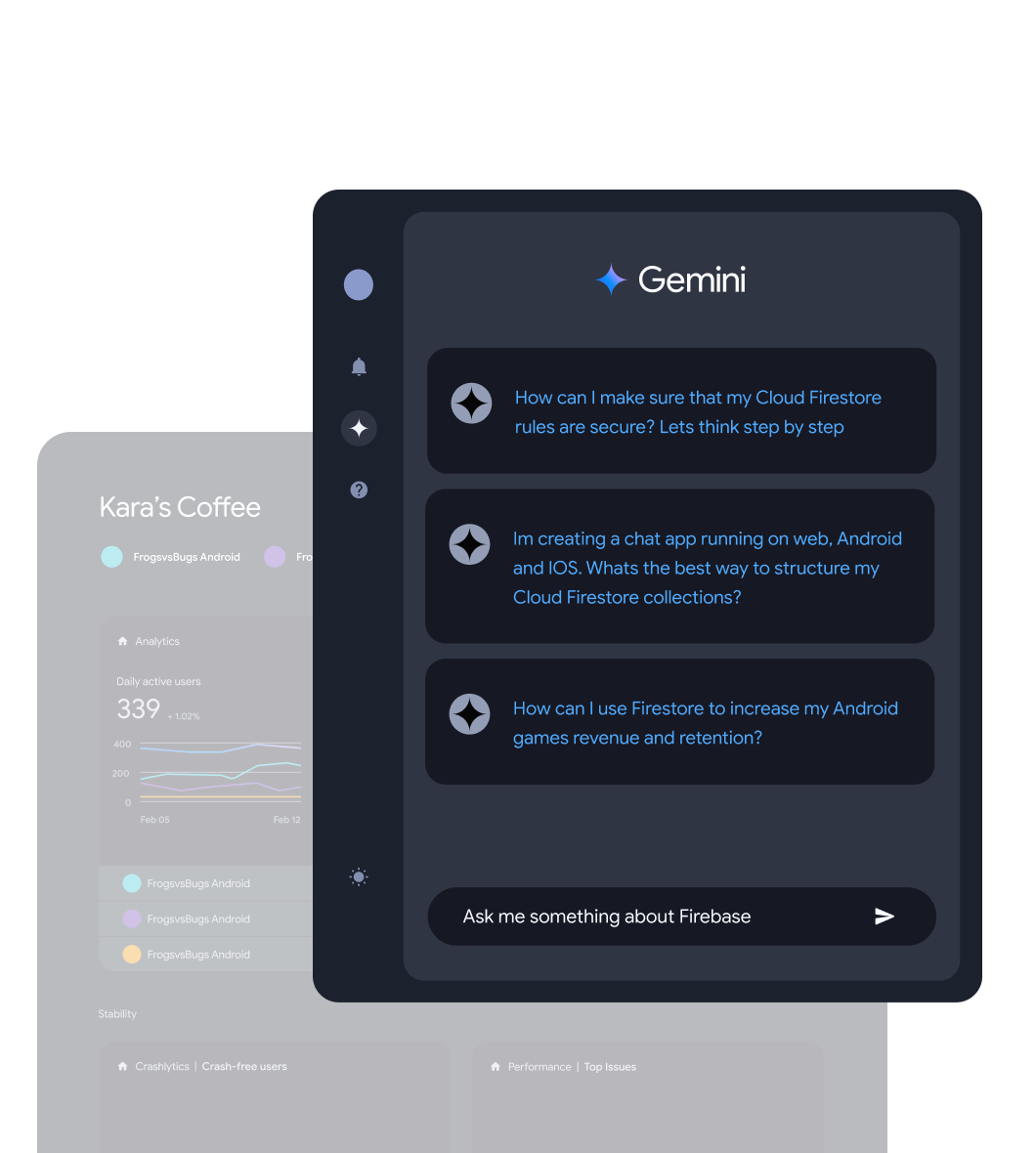

Code chat

Ask development questions and receive responses that help you reduce errors, solve problems, and become a better developer. Gemini understands the context of your environment to give you the best responses for your questions.

Explore apps built with the Gemini API

Join the community

Tap into the power of our community forum. Get answers, build together, and be part of the conversation.