AI Edge's Google Cloud solution for testing and benchmarking on-device machine learning (ML) at scale.

Optimizing ML model performance across diverse mobile devices can be challenging. Manual testing is slow, costly, and often inaccessible to most developers, leading to uncertainties in real-world model performance. Google AI Edge Portal solves this by enabling LiteRT model benchmarking across a wide-range of mobile devices, helping developers find the best configurations for large-scale ML model deployment.

Optimizing mobile ML deployment

Simplify & accelerate testing cycles across the diverse hardware landscape: Effortlessly assess model performance across hundreds of representative mobile devices in minutes.

Proactively assure model quality & identify issues early: Pinpoint hardware-specific performance variations or regressions (like on particular chipsets or memory-constrained devices) before deployment.

Lower device testing cost & access latest hardware: Test on diverse and continually growing fleet of physical devices (currently 100+ device models from various Android OEMs) without the expense and complexity of maintaining your own lab.

Unlock powerful, data-driven decisions & business intelligence: Google AI Edge Portal delivers rich performance data and comparisons, providing the crucial business intelligence needed to confidently guide model optimization and validate deployment readiness.

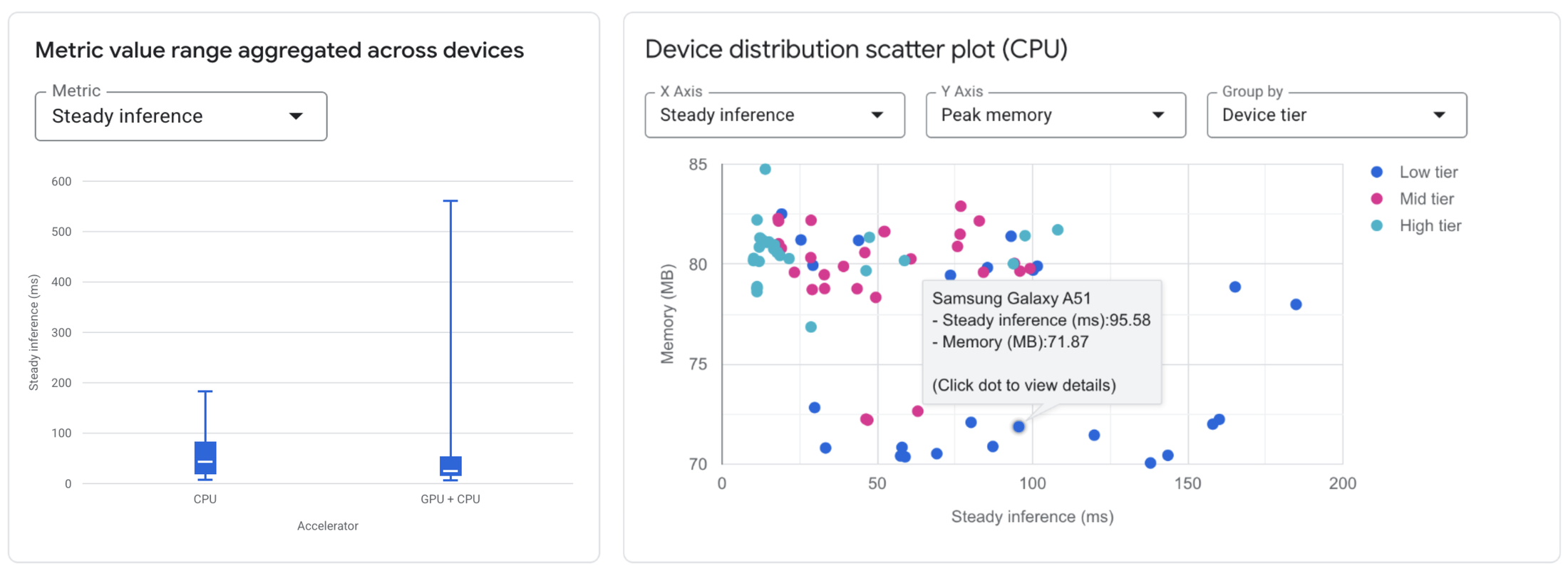

Example benchmark:

How Google AI Edge Portal helps you benchmark your LiteRT models

Upload & configure: Upload your model file via the UI or point to it in your Google Cloud Storage bucket.

Select accelerators: Specify testing against CPU or GPU (with automatic CPU fallback). NPU support is planned for future releases.

Select devices: Choose target devices from our diverse pool using filters (device tier, brand, chipset, RAM) or select curated lists with convenient shortcuts.

Create a New Benchmark Job on 100+ Devices. (Note: GIF is accelerated and edited for brevity)

From there, submit your job and await completion. Once ready, explore the results in the Interactive Dashboard:

Compare configurations: Quickly visualize how performance metrics (e.g., average latency, peak memory) differ when using different accelerators across all tested devices.

Analyze device impact: See how a specific model configuration performs across the range of selected devices. Use histograms and scatter plots to quickly identify performance variations tied to device characteristics.

Detailed metrics: Access a detailed, sortable table showing specific metrics (initialization time, inference latency, memory usage) for each individual device, alongside its hardware specifications.

View Benchmark Results on the interactive Dashboard. (Note: GIF is accelerated and edited for brevity)

Join the Google AI Edge Portal private preview

Google AI Edge Portal is available in private preview for allowlisted Google Cloud customers. During this private preview period, access is provided at no charge, subject to the preview terms.

This preview is ideal for developers and teams building mobile ML applications with LiteRT who need reliable benchmarking data across diverse Android hardware and are willing to provide feedback to help shape the product's future. To request access, complete our sign-up form here to express interest. Access is granted via allowlisting.