您使用 LiteRT 的機器學習 (ML) 模型,原本是使用 TensorFlow 核心程式庫和工具建構及訓練。使用 TensorFlow Core 建構模型後,您可以將其轉換為更小、更有效率的 ML 模型格式,也就是 LiteRT 模型。本節提供指南,說明如何將 TensorFlow 模型轉換為 LiteRT 模型格式。

轉換工作流程

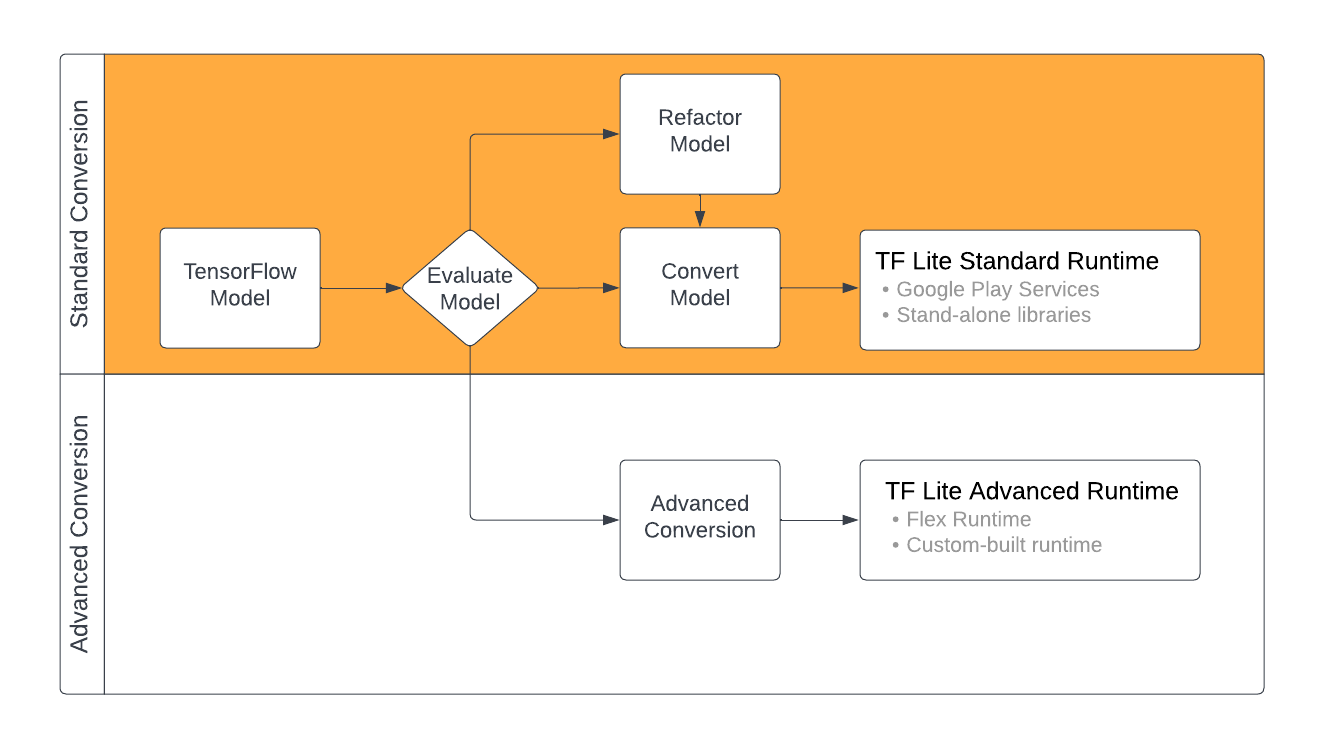

將 TensorFlow 模型轉換為 LiteRT 格式時,可採用的路徑會因機器學習模型的內容而異。首先,您應評估模型是否可直接轉換。這項評估會根據模型使用的 TensorFlow 運算,判斷標準 LiteRT 執行階段環境是否支援模型內容。如果模型使用的作業不在支援的作業集中,您可以重構模型或使用進階轉換技術。

下圖顯示轉換模型的高階步驟。

圖 1. LiteRT 轉換工作流程。

以下各節將說明評估及轉換模型,以便搭配 LiteRT 使用的程序。

輸入模型格式

轉換工具支援下列輸入模型格式:

- SavedModel (建議使用):以一組檔案形式儲存在磁碟上的 TensorFlow 模型。

- Keras 模型:使用高階 Keras API 建立的模型。

- Keras H5 格式:Keras API 支援的 SavedModel 格式輕量替代方案。

- 以具體函式建構的模型:使用低階 TensorFlow API 建立的模型。

您可以將 Keras 和具體函式模型儲存為 SavedModel,並使用建議路徑進行轉換。

主題。如果您有 Jax 模型,可以使用 TFLiteConverter.experimental_from_jax API 將其轉換為 LiteRT 格式。請注意,這個 API 處於實驗模式時,可能會有所變動。

轉換評估

評估模型是嘗試轉換模型前的重要步驟。評估時,請判斷模型內容是否與 LiteRT 格式相容。此外,您也應根據模型使用的資料大小、硬體處理需求,以及模型的整體大小和複雜度,判斷模型是否適合在行動裝置和邊緣裝置上使用。

對於許多模型,轉換器應該都能直接運作。不過,LiteRT 內建運算子程式庫僅支援部分 TensorFlow 核心運算子,因此部分模型可能需要額外步驟,才能轉換為 LiteRT。此外,基於效能考量,LiteRT 支援的部分作業設有使用限制。請參閱運算子相容性指南,判斷模型是否需要重構以進行轉換。

模型轉換

LiteRT 轉換工具可使用 TensorFlow 模型產生 LiteRT 模型 (副檔名為 .tflite 的最佳化 FlatBuffer 格式)。您可以載入 SavedModel,或直接轉換您在程式碼中建立的模型。

轉換工具會採用 3 個主要標記 (或選項),自訂模型的轉換作業:

- 相容性標記可讓您指定轉換是否應允許自訂運算子。

- 最佳化旗標可讓您指定要在轉換期間套用的最佳化類型。最常用的最佳化技術是訓練後量化。

- 中繼資料標記可讓您在轉換後的模型中新增中繼資料,以便在裝置上部署模型時,更輕鬆地建立平台專屬的包裝函式程式碼。

你可以使用 Python API 或指令列工具轉換模型。如需在模型上執行轉換器的逐步操作說明,請參閱「轉換 TF 模型」指南。

通常您會將模型轉換為標準的 LiteRT 執行階段環境,或是 LiteRT (Beta 版) 的 Google Play 服務執行階段環境。部分進階用途需要自訂模型執行階段環境,因此轉換程序會增加額外步驟。如需更多指引,請參閱 Android 總覽的「進階執行階段環境」一節。

進階轉換

如果在模型上執行轉換器時遇到錯誤,很可能是運算子相容性問題。TensorFlow Lite 不支援所有 TensorFlow 運算。如要解決這些問題,可以重構模型,或使用進階轉換選項,為模型建立修改過的 LiteRT 格式,以及自訂執行階段環境。

- 如要進一步瞭解 TensorFlow 和 LiteRT 模型相容性考量事項,請參閱「模型相容性總覽」。

- 「模型相容性總覽」下的主題涵蓋模型重構的進階技術,例如「選取運算子」指南。

- 如需作業和限制的完整清單,請參閱 LiteRT 作業頁面。