The machine learning (ML) models you use with LiteRT are originally built and trained using TensorFlow core libraries and tools. Once you've built a model with TensorFlow core, you can convert it to a smaller, more efficient ML model format called a LiteRT model. This section provides guidance for converting your TensorFlow models to the LiteRT model format.

Conversion workflow

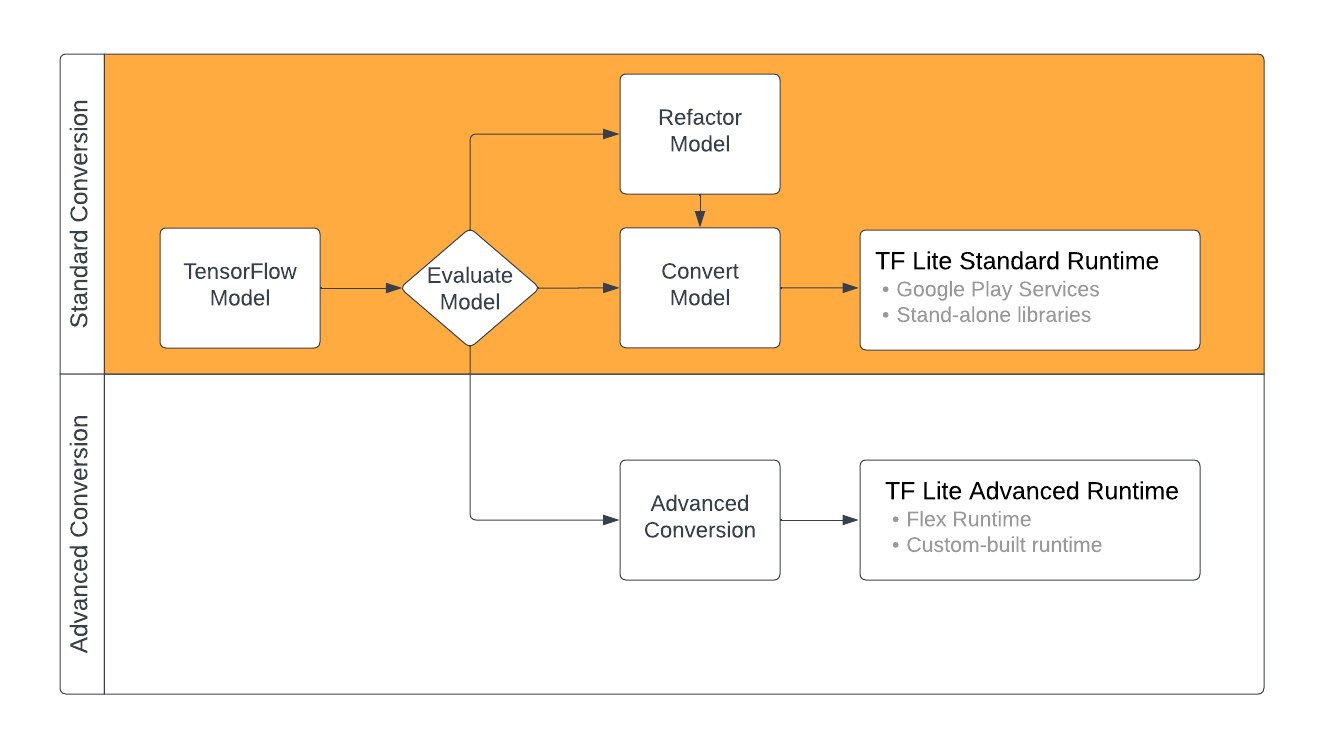

Converting TensorFlow models to LiteRT format can take a few paths depending on the content of your ML model. As the first step of that process, you should evaluate your model to determine if it can be directly converted. This evaluation determines if the content of the model is supported by the standard LiteRT runtime environments based on the TensorFlow operations it uses. If your model uses operations outside of the supported set, you have the option to refactor your model or use advanced conversion techniques.

The diagram below shows the high level steps in converting a model.

Figure 1. LiteRT conversion workflow.

The following sections outline the process of evaluating and converting models for use with LiteRT.

Input model formats

You can use the converter with the following input model formats:

- SavedModel (recommended): A TensorFlow model saved as a set of files on disk.

- Keras model: A model created using the high level Keras API.

- Keras H5 format: A light-weight alternative to SavedModel format supported by Keras API.

- Models built from concrete functions: A model created using the low level TensorFlow API.

You can save both the Keras and concrete function models as a SavedModel and convert using the recommeded path.

If you have a Jax model, you can use the TFLiteConverter.experimental_from_jax

API to convert it to the LiteRT format. Note that this API is subject to change

while in experimental mode.

Conversion evaluation

Evaluating your model is an important step before attempting to convert it. When evaluating, you want to determine if the contents of your model is compatible with the LiteRT format. You should also determine if your model is a good fit for use on mobile and edge devices in terms of the size of data the model uses, its hardware processing requirements, and the model's overall size and complexity.

For many models, the converter should work out of the box. However, LiteRT builtin operator library supports a subset of TensorFlow core operators, which means some models may need additional steps before converting to LiteRT. Additionally some operations that are supported by LiteRT have restricted usage requirements for performance reasons. See the operator compatibility guide to determine if your model needs to be refactored for conversion.

Model conversion

The LiteRT converter takes a TensorFlow model and generates a LiteRT model (an

optimized FlatBuffer format identified

by the .tflite file extension). You can load a SavedModel or directly convert

a model you create in code.

The converter takes 3 main flags (or options) that customize the conversion for your model:

- Compatibility flags allow you to specify whether the conversion should allow custom operators.

- Optimization flags allow you to specify the type of optimization to apply during conversion. The most commonly used optimization technique is post-training quanitization.

- Metadata flags allow you to add metadata to the converted model which makes it easier to create platform specific wrapper code when deploying models on devices.

You can convert your model using the Python API or the Command line tool. See the Convert TF model guide for step by step instructions on running the converter on your model.

Typically you would convert your model for the standard LiteRT runtime environment or the Google Play services runtime environment for LiteRT (Beta). Some advanced use cases require customization of model runtime environment, which require additional steps in the conversion proceess. See the advanced runtime environment section of the Android overview for more guidance.

Advanced conversion

If you run into errors while running the converter on your model, it's most likely that you have an operator compatibility issue. Not all TensorFlow operations are supported by TensorFlow Lite. You can work around these issues by refactoring your model, or by using advanced conversion options that allow you to create a modified LiteRT format model and a custom runtime environment for that model.

- See the Model compatibility overview for more information on TensorFlow and LiteRT model compatibility considerations.

- Topics under the Model compatibility overview cover advanced techniques for refactoring your model, such as the Select operators guide.

- For full list of operations and limitations see LiteRT Ops page.

Next steps

- See the convert TF models guide to quickly get started on converting your model.

- See the optimization overview for guidance on how to optimize your converted model using techniques like post-training quanitization.

- See the Adding metadata overview to learn how to add metadata to your models. Metadata provides other uses a description of your model as well as information that can be leveraged by code generators.