High-performance ML & GenAI deployment on edge platforms

Efficient conversion, runtime, and optimization for on-device machine learning.

Built on the battle-tested foundation of TensorFlow Lite

LiteRT isn't just new; it's the next generation of the world's most widely deployed machine learning runtime. It powers the apps you use every day, delivering low latency and high privacy on billions of devices.

Trusted by the most critical Google apps

100K+ applications, billions of global users

LiteRT highlights

Cross platform ready

Unleash genAI

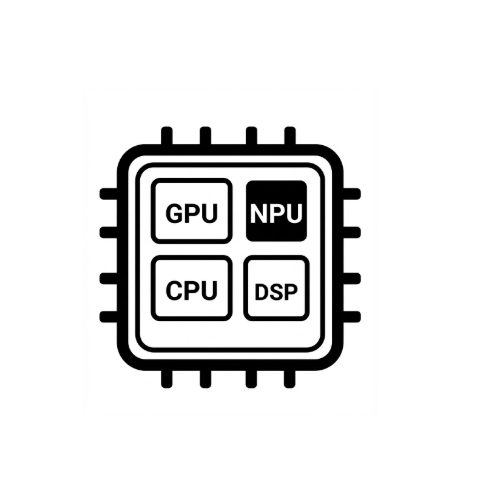

Simplified hardware acceleration

Multi-framework support

Deploy via LiteRT

Obtain a model

Use .tflite pre-trained models or convert PyTorch, JAX or TensorFlow models to .tflite

Optimize the model

Optionally Quantize the model

Run

Pick desired accelerator and run on LiteRT

Samples, models, and demo

See sample app

Tutorials show you how to use LiteRT with complete, end-to-end examples.

See genAI models

Pre-trained, out-of-the-box Gen AI models.

See demos - Google AI Edge Gallery App

A gallery that showcases on-device ML/GenAI use cases