為人工智慧 (AI) 模型 (例如 Gemini 或 Gemma) 建立提示,以便完美捕捉您的意圖,這項工作可能不容易。這通常 您必須手動撰寫提示,然後在各種用途中進行測試 並確定符合您的需求您可以根據結果更新指定目標 加入新句子或在同一處變更字詞 另一個例子。這過程不是很原則,不一定能獲得最佳 也就是預測結果

Google 已開發一種方法,利用 LLM 回覆 會根據網頁內容自動更新提示範本 以淺顯易懂的語言,針對模型輸出內容提供意見回饋。您的意見回饋會連同提示和模型輸出內容傳送至 LLM,以便更新提示,讓提示更符合您預期的行為。

這個方法有兩種方式:

- 開放原始碼

model-alignmentPython 程式庫可讓您 靈活地將此做法納入軟體和工作流程。 - 這項方法的版本已整合至 Vertex AI Studio,只要按幾下滑鼠,即可使用這個工作流程。

開放原始碼程式庫

模型對齊功能是開放原始碼 Python 程式庫,以 PyPI 上的套件形式發布,可透過 API 對齊來自使用者回饋的提示。這個程式庫是根據我們研究的 鼓勵使用者提供意見 根據標籤資料自動建立分類器。

使用模型對齊程式庫為 Gemma 整理提示範本

|

|

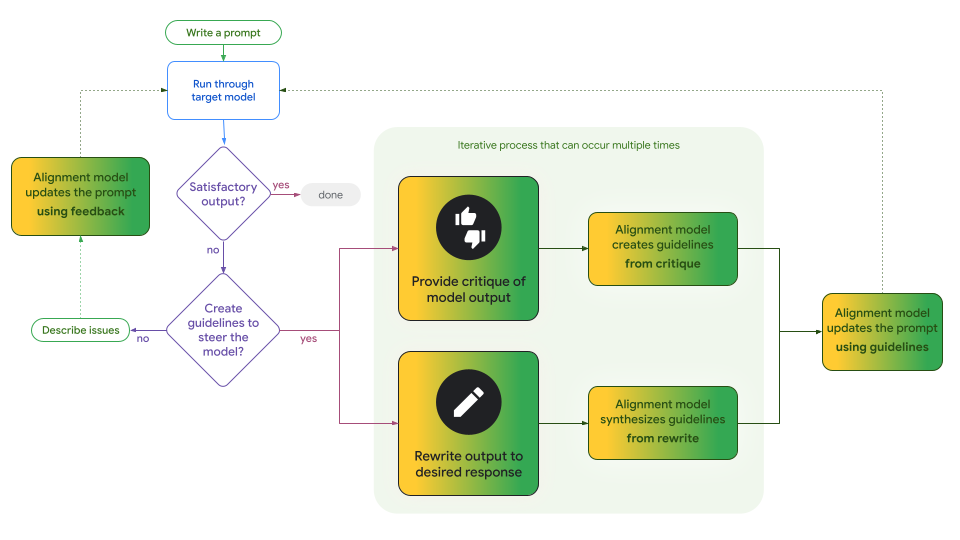

這個程式庫支援兩種自動更新提示範本的工作流程:

- 根據原則反覆更新:這個工作流程會使用 LLM 從模型輸出內容的間接批評,或直接編輯模型輸出內容,藉此提煉規範。你可以 先反覆建立一或多項規範,再傳送至大型語言模型 更新提示範本以符合這些規範你可以 並搭配 LLM 資料彙整指南

- 直接批評模型輸出結果。這個工作流程會收集您對整個模型輸出的意見回饋,並將這些意見回饋與提示和模型輸出結果,直接提供給 LLM,以產生更新的提示範本。

這兩種工作流程對你的應用程式來說可能都很實用。值得注意的是 權衡取捨是製定實用、具體的流程 有助於您做出明智決策 資訊公開。

圖 1. 這張流程圖說明兩個 Model Alignment 程式庫工作流程的差異,以及如何根據規範直接更新提示範本。請注意,這項程序是重複進行的,而且這些工作流程並非互斥,您隨時可以切換。

查看採用 Gemini 的 Colab 筆記本,即可: 同時使用這兩種工作流程,為 Gemma 2 調整提示。

Vertex AI Studio 中的對齊

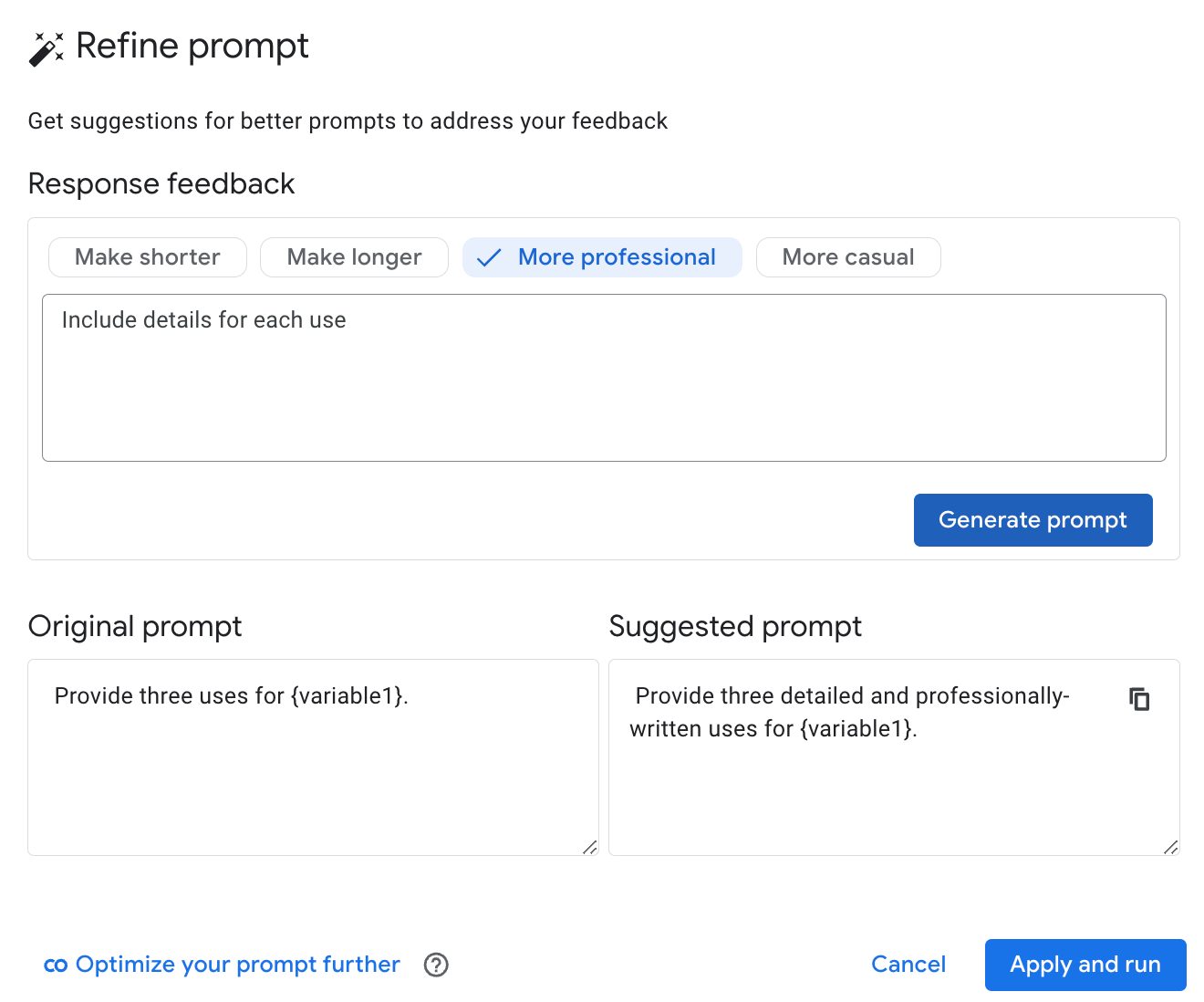

Google 的 Vertex AI Studio 新增了一個 透過「直接」工作流程執行「修正提示」功能 使用 ModelAlign 開放原始碼程式庫來補強程式碼的不足之處 包括執行、評估和比較工具

執行提示後,您可以針對模型應採取的不同行為提供意見回饋,而 Vertex AI Studio 會使用 Gemini 草擬重寫內容。您可以接受建議的變更,並按一下按鈕重新執行更新的提示,也可以更新意見回饋,讓 Gemini 編寫另一個候選版本。

圖 2. 「修正提示」Vertex AI Studio 的功能 根據使用者意見回饋更新提示

連結

您可以自行探索模型對齊功能:

- 執行這個 Colab 筆記本,我們會運用 Gemini 調整多項工作 以這兩種校正方法為基礎,開啟開放權重 Gemma 2 模型的提示。

- 試試「修正提示」模型校正特徵 Vertex AI Studio