This toolkit provides resources and tools to apply best practices for responsible use of open models, such as Gemma, including:

- Guidance on setting safety policies, safety tuning, safety classifiers and model evaluation.

- The Learning Interpretability Tool for investigating and debugging Gemma's behavior in response to prompts.

- The LLM Comparator for running and visualizing comparative evaluation results.

- A methodology for building robust safety classifiers with minimal examples.

This version of the toolkit focuses on English text-to-text models only. You can provide feedback to make this toolkit more helpful through the feedback mechanism link at the bottom of the page.

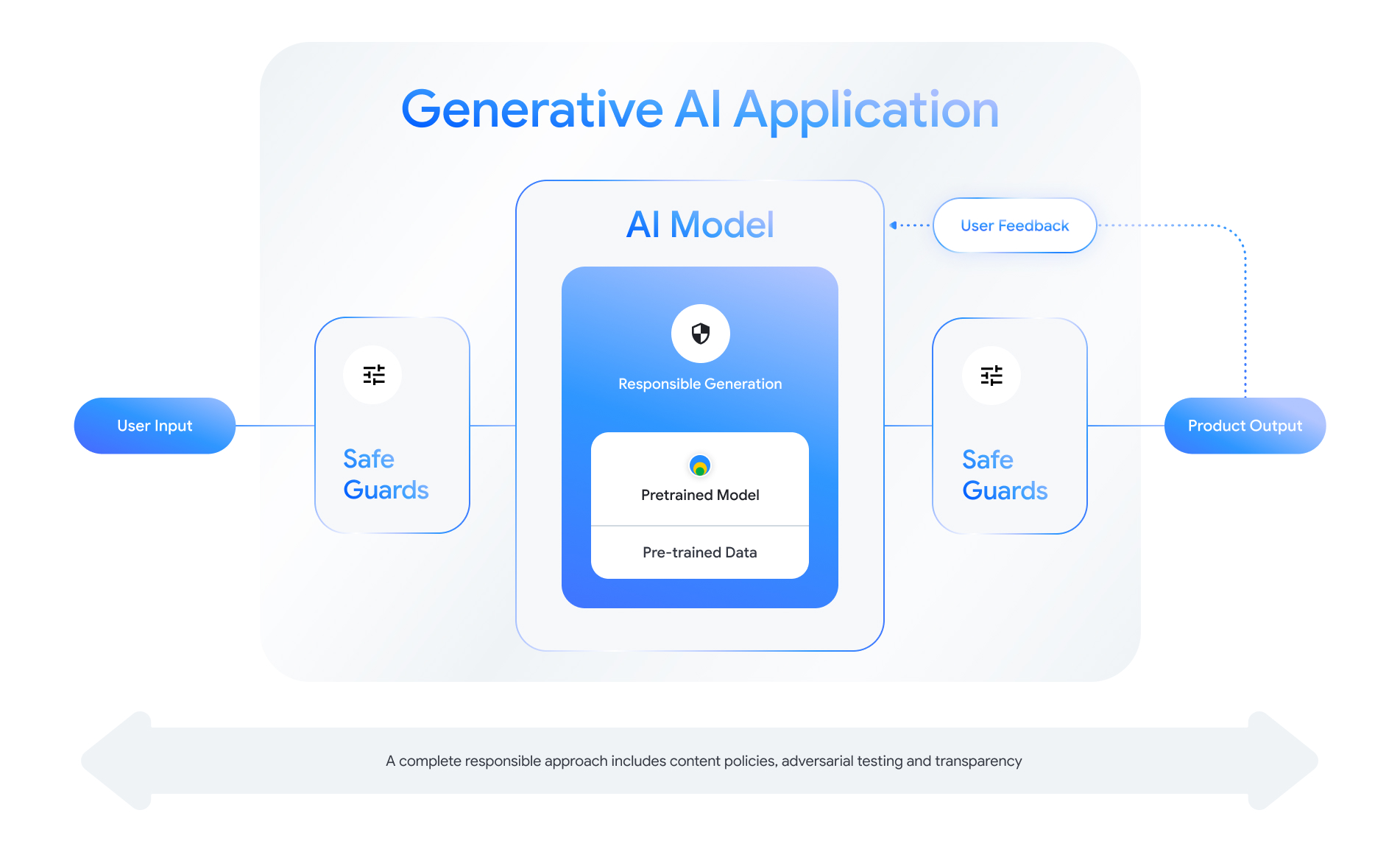

When building with Gemma, you should take a holistic approach to responsibility and consider all the possible challenges at the application and model levels. This toolkit covers risk and mitigation techniques to address safety, privacy, fairness, and accountability.

Check out the rest of this toolkit for more information and guidance: