Gemini API 通过文件搜索工具实现检索增强生成 ("RAG")。文件搜索功能会导入、分块和索引您的数据,以便根据您提供的提示快速检索相关信息。然后,这些信息会用作模型的上下文,让模型能够提供更准确、更相关的回答。

为了让开发者能够以简单实惠的方式使用文件搜索,我们决定在查询时免费提供文件存储和嵌入生成服务。您只需在首次为文件编制索引时支付创建嵌入内容的费用(按适用的嵌入模型费用)以及正常的 Gemini 模型输入 / 输出 token 费用。这种新的结算模式使得文件搜索工具的构建和扩展更加简单高效。

直接上传到文件搜索商店

以下示例展示了如何直接将文件上传到文件搜索存储区:

Python

from google import genai

from google.genai import types

import time

client = genai.Client()

# File name will be visible in citations

file_search_store = client.file_search_stores.create(config={'display_name': 'your-fileSearchStore-name'})

operation = client.file_search_stores.upload_to_file_search_store(

file='sample.txt',

file_search_store_name=file_search_store.name,

config={

'display_name' : 'display-file-name',

}

)

while not operation.done:

time.sleep(5)

operation = client.operations.get(operation)

response = client.models.generate_content(

model="gemini-3-flash-preview",

contents="""Can you tell me about [insert question]""",

config=types.GenerateContentConfig(

tools=[

types.Tool(

file_search=types.FileSearch(

file_search_store_names=[file_search_store.name]

)

)

]

)

)

print(response.text)

JavaScript

const { GoogleGenAI } = require('@google/genai');

const ai = new GoogleGenAI({});

async function run() {

// File name will be visible in citations

const fileSearchStore = await ai.fileSearchStores.create({

config: { displayName: 'your-fileSearchStore-name' }

});

let operation = await ai.fileSearchStores.uploadToFileSearchStore({

file: 'file.txt',

fileSearchStoreName: fileSearchStore.name,

config: {

displayName: 'file-name',

}

});

while (!operation.done) {

await new Promise(resolve => setTimeout(resolve, 5000));

operation = await ai.operations.get({ operation });

}

const response = await ai.models.generateContent({

model: "gemini-3-flash-preview",

contents: "Can you tell me about [insert question]",

config: {

tools: [

{

fileSearch: {

fileSearchStoreNames: [fileSearchStore.name]

}

}

]

}

});

console.log(response.text);

}

run();

如需了解详情,请参阅 uploadToFileSearchStore 的 API 参考文档。

导入文件

或者,您也可以上传现有文件,然后将其导入到文件搜索存储空间:

Python

from google import genai

from google.genai import types

import time

client = genai.Client()

# File name will be visible in citations

sample_file = client.files.upload(file='sample.txt', config={'name': 'display_file_name'})

file_search_store = client.file_search_stores.create(config={'display_name': 'your-fileSearchStore-name'})

operation = client.file_search_stores.import_file(

file_search_store_name=file_search_store.name,

file_name=sample_file.name

)

while not operation.done:

time.sleep(5)

operation = client.operations.get(operation)

response = client.models.generate_content(

model="gemini-3-flash-preview",

contents="""Can you tell me about [insert question]""",

config=types.GenerateContentConfig(

tools=[

types.Tool(

file_search=types.FileSearch(

file_search_store_names=[file_search_store.name]

)

)

]

)

)

print(response.text)

JavaScript

const { GoogleGenAI } = require('@google/genai');

const ai = new GoogleGenAI({});

async function run() {

// File name will be visible in citations

const sampleFile = await ai.files.upload({

file: 'sample.txt',

config: { name: 'file-name' }

});

const fileSearchStore = await ai.fileSearchStores.create({

config: { displayName: 'your-fileSearchStore-name' }

});

let operation = await ai.fileSearchStores.importFile({

fileSearchStoreName: fileSearchStore.name,

fileName: sampleFile.name

});

while (!operation.done) {

await new Promise(resolve => setTimeout(resolve, 5000));

operation = await ai.operations.get({ operation: operation });

}

const response = await ai.models.generateContent({

model: "gemini-3-flash-preview",

contents: "Can you tell me about [insert question]",

config: {

tools: [

{

fileSearch: {

fileSearchStoreNames: [fileSearchStore.name]

}

}

]

}

});

console.log(response.text);

}

run();

如需了解详情,请参阅 importFile 的 API 参考文档。

分块配置

将文件导入到文件搜索商店后,系统会自动将其分解为多个块,然后进行嵌入、编入索引并上传到您的文件搜索商店。如果您需要更精细地控制分块策略,可以指定 chunking_config 设置,以设置每个块的 token 数上限和重叠 token 数上限。

Python

from google import genai

from google.genai import types

import time

client = genai.Client()

operation = client.file_search_stores.upload_to_file_search_store(

file_search_store_name=file_search_store.name,

file_name=sample_file.name,

config={

'chunking_config': {

'white_space_config': {

'max_tokens_per_chunk': 200,

'max_overlap_tokens': 20

}

}

}

)

while not operation.done:

time.sleep(5)

operation = client.operations.get(operation)

print("Custom chunking complete.")

JavaScript

const { GoogleGenAI } = require('@google/genai');

const ai = new GoogleGenAI({});

let operation = await ai.fileSearchStores.uploadToFileSearchStore({

file: 'file.txt',

fileSearchStoreName: fileSearchStore.name,

config: {

displayName: 'file-name',

chunkingConfig: {

whiteSpaceConfig: {

maxTokensPerChunk: 200,

maxOverlapTokens: 20

}

}

}

});

while (!operation.done) {

await new Promise(resolve => setTimeout(resolve, 5000));

operation = await ai.operations.get({ operation });

}

console.log("Custom chunking complete.");

如需使用您的文件搜索商店,请将其作为工具传递给 generateContent 方法,如上传和导入示例所示。

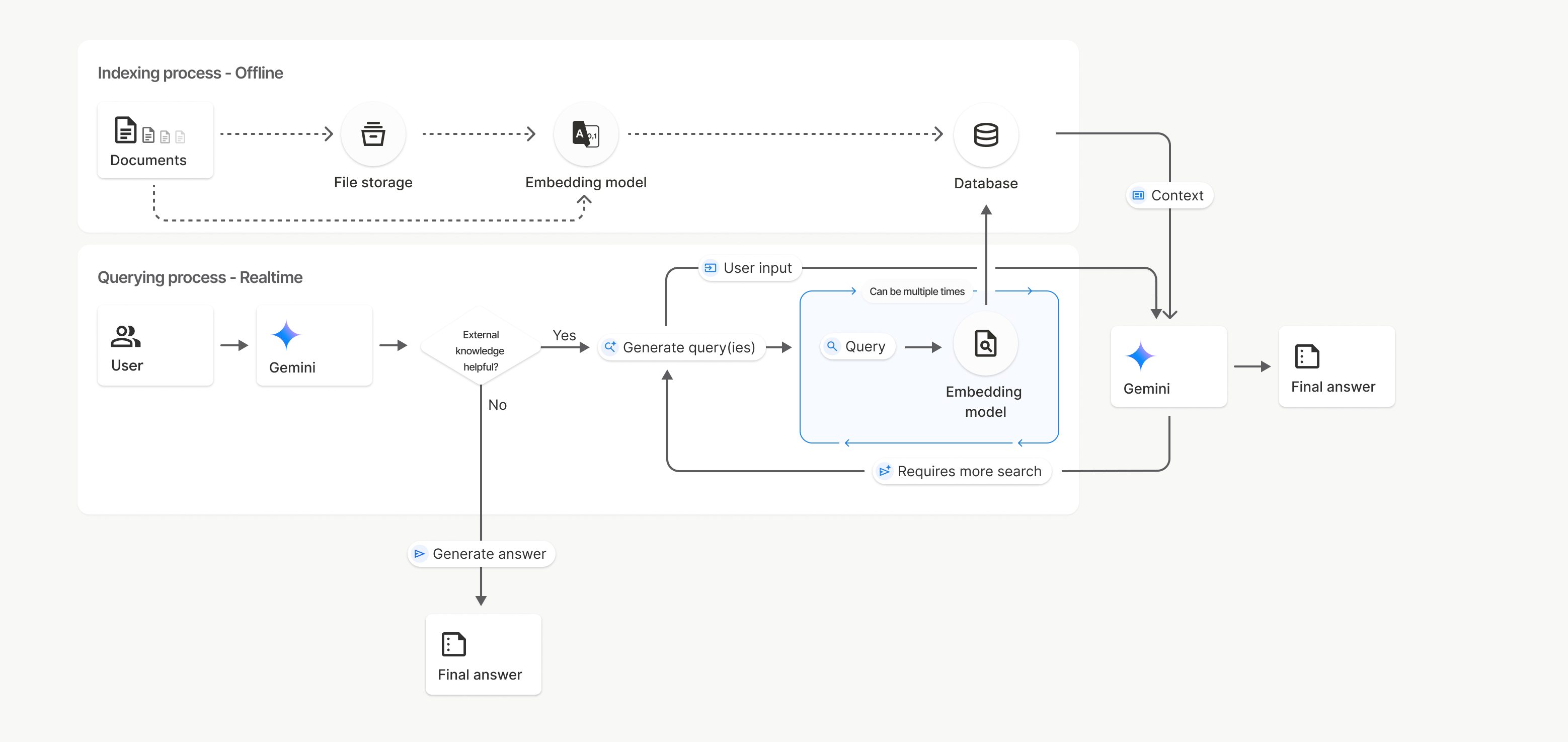

运作方式

文件搜索功能使用一种称为语义搜索的技术来查找与用户提示相关的信息。与基于标准关键字的搜索不同,语义搜索能够理解查询的含义和上下文。

导入文件后,系统会将其转换为称为嵌入的数值表示形式,用于捕捉文本的语义。这些嵌入会存储在专门的文件搜索数据库中。 当您提出查询时,系统也会将其转换为嵌入。然后,系统会执行文件搜索,从文件搜索存储区中查找最相似且最相关的文档块。

嵌入内容和文件没有存留时间 (TTL);它们会一直保留,直到手动删除或模型被弃用为止。

下面详细介绍了使用文件搜索 uploadToFileSearchStore API 的流程:

创建文件搜索存储区:文件搜索存储区包含来自文件的处理后数据。它是语义搜索将使用的嵌入的持久性容器。

上传文件并导入到文件搜索存储区:同时上传文件并将结果导入到文件搜索存储区。这会创建一个临时

File对象,该对象是对原始文档的引用。然后,该数据会被分块、转换为 File Search 嵌入并编入索引。File对象会在 48 小时后被删除,而导入到文件搜索存储区的数据会无限期存储,直到您选择删除为止。使用文件搜索进行查询:最后,您可以在

generateContent调用中使用FileSearch工具。在工具配置中,您需要指定一个FileSearchRetrievalResource,该FileSearchRetrievalResource指向您要搜索的FileSearchStore。这会指示模型对相应的文件搜索存储区执行语义搜索,以查找相关信息来为回答提供依据。

在此图中,从文档到嵌入模型(使用 gemini-embedding-001)的虚线表示 uploadToFileSearchStore API(绕过文件存储)。否则,使用 Files API 单独创建文件,然后导入文件,会将索引编制流程从 Documents 移至 File storage,然后再移至 Embedding model。

文件搜索存储区

文件搜索存储区是文档嵌入的容器。虽然通过 File API 上传的原始文件会在 48 小时后被删除,但导入到文件搜索存储区的数据会无限期存储,直到您手动将其删除。您可以创建多个文件搜索存储区来整理文档。借助 FileSearchStore API,您可以创建、列出、获取和删除文件搜索存储区,从而管理这些存储区。文件搜索存储区名称具有全局范围。

以下是一些有关如何管理文件搜索商店的示例:

Python

file_search_store = client.file_search_stores.create(config={'display_name': 'my-file_search-store-123'})

for file_search_store in client.file_search_stores.list():

print(file_search_store)

my_file_search_store = client.file_search_stores.get(name='fileSearchStores/my-file_search-store-123')

client.file_search_stores.delete(name='fileSearchStores/my-file_search-store-123', config={'force': True})

JavaScript

const fileSearchStore = await ai.fileSearchStores.create({

config: { displayName: 'my-file_search-store-123' }

});

const fileSearchStores = await ai.fileSearchStores.list();

for await (const store of fileSearchStores) {

console.log(store);

}

const myFileSearchStore = await ai.fileSearchStores.get({

name: 'fileSearchStores/my-file_search-store-123'

});

await ai.fileSearchStores.delete({

name: 'fileSearchStores/my-file_search-store-123',

config: { force: true }

});

REST

curl -X POST "https://generativelanguage.googleapis.com/v1beta/fileSearchStores?key=${GEMINI_API_KEY}" \

-H "Content-Type: application/json"

-d '{ "displayName": "My Store" }'

curl "https://generativelanguage.googleapis.com/v1beta/fileSearchStores?key=${GEMINI_API_KEY}" \

curl "https://generativelanguage.googleapis.com/v1beta/fileSearchStores/my-file_search-store-123?key=${GEMINI_API_KEY}"

curl -X DELETE "https://generativelanguage.googleapis.com/v1beta/fileSearchStores/my-file_search-store-123?key=${GEMINI_API_KEY}"

文件搜索文档

您可以使用 File Search Documents API 管理文件存储区中的各个文档,以 list 文件搜索存储区中的每个文档、get 文档的相关信息,以及按名称 delete 文档。

Python

for document_in_store in client.file_search_stores.documents.list(parent='fileSearchStores/my-file_search-store-123'):

print(document_in_store)

file_search_document = client.file_search_stores.documents.get(name='fileSearchStores/my-file_search-store-123/documents/my_doc')

print(file_search_document)

client.file_search_stores.documents.delete(name='fileSearchStores/my-file_search-store-123/documents/my_doc')

JavaScript

const documents = await ai.fileSearchStores.documents.list({

parent: 'fileSearchStores/my-file_search-store-123'

});

for await (const doc of documents) {

console.log(doc);

}

const fileSearchDocument = await ai.fileSearchStores.documents.get({

name: 'fileSearchStores/my-file_search-store-123/documents/my_doc'

});

await ai.fileSearchStores.documents.delete({

name: 'fileSearchStores/my-file_search-store-123/documents/my_doc'

});

REST

curl "https://generativelanguage.googleapis.com/v1beta/fileSearchStores/my-file_search-store-123/documents?key=${GEMINI_API_KEY}"

curl "https://generativelanguage.googleapis.com/v1beta/fileSearchStores/my-file_search-store-123/documents/my_doc?key=${GEMINI_API_KEY}"

curl -X DELETE "https://generativelanguage.googleapis.com/v1beta/fileSearchStores/my-file_search-store-123/documents/my_doc?key=${GEMINI_API_KEY}"

文件元数据

您可以为文件添加自定义元数据,以便过滤文件或提供更多背景信息。元数据是一组键值对。

Python

op = client.file_search_stores.import_file(

file_search_store_name=file_search_store.name,

file_name=sample_file.name,

custom_metadata=[

{"key": "author", "string_value": "Robert Graves"},

{"key": "year", "numeric_value": 1934}

]

)

JavaScript

let operation = await ai.fileSearchStores.importFile({

fileSearchStoreName: fileSearchStore.name,

fileName: sampleFile.name,

config: {

customMetadata: [

{ key: "author", stringValue: "Robert Graves" },

{ key: "year", numericValue: 1934 }

]

}

});

如果您在文件搜索存储区中有多个文档,并且只想搜索其中的一部分,此参数会非常有用。

Python

response = client.models.generate_content(

model="gemini-3-flash-preview",

contents="Tell me about the book 'I, Claudius'",

config=types.GenerateContentConfig(

tools=[

types.Tool(

file_search=types.FileSearch(

file_search_store_names=[file_search_store.name],

metadata_filter="author=Robert Graves",

)

)

]

)

)

print(response.text)

JavaScript

const response = await ai.models.generateContent({

model: "gemini-3-flash-preview",

contents: "Tell me about the book 'I, Claudius'",

config: {

tools: [

{

fileSearch: {

fileSearchStoreNames: [fileSearchStore.name],

metadataFilter: 'author="Robert Graves"',

}

}

]

}

});

console.log(response.text);

REST

curl "https://generativelanguage.googleapis.com/v1beta/models/gemini-3-flash-preview:generateContent?key=${GEMINI_API_KEY}" \

-H 'Content-Type: application/json' \

-X POST \

-d '{

"contents": [{

"parts":[{"text": "Tell me about the book I, Claudius"}]

}],

"tools": [{

"file_search": {

"file_search_store_names":["'$STORE_NAME'"],

"metadata_filter": "author = \"Robert Graves\""

}

}]

}' 2> /dev/null > response.json

cat response.json

有关为 metadata_filter 实现列表过滤条件语法的指南,请参阅 google.aip.dev/160

引用

使用文件搜索功能时,模型的回答可能会包含引用,指明上传的文档中的哪些部分用于生成回答。这有助于进行事实核查和验证。

您可以通过响应的 grounding_metadata 属性访问引用信息。

Python

print(response.candidates[0].grounding_metadata)

JavaScript

console.log(JSON.stringify(response.candidates?.[0]?.groundingMetadata, null, 2));

结构化输出

从 Gemini 3 模型开始,您可以将文件搜索工具与结构化输出相结合。

Python

from pydantic import BaseModel, Field

class Money(BaseModel):

amount: str = Field(description="The numerical part of the amount.")

currency: str = Field(description="The currency of amount.")

response = client.models.generate_content(

model="gemini-3-flash-preview",

contents="What is the minimum hourly wage in Tokyo right now?",

config=types.GenerateContentConfig(

tools=[

types.Tool(

file_search=types.FileSearch(

file_search_store_names=[file_search_store.name]

)

)

],

responseMimeType="application/json",

responseJsonSchema= Money.model_json_schema()

)

)

result = Money.model_validate_json(response.text)

print(result)

JavaScript

import { z } from "zod";

import { zodToJsonSchema } from "zod-to-json-schema";

const moneySchema = z.object({

amount: z.string().describe("The numerical part of the amount."),

currency: z.string().describe("The currency of amount."),

});

async function run() {

const response = await ai.models.generateContent({

model: "gemini-3-flash-preview",

contents: "What is the minimum hourly wage in Tokyo right now?",

config: {

tools: [

{

fileSearch: {

fileSearchStoreNames: [file_search_store.name],

},

},

],

responseMimeType: "application/json",

responseJsonSchema: zodToJsonSchema(moneySchema),

},

});

const result = moneySchema.parse(JSON.parse(response.text));

console.log(result);

}

run();

REST

curl "https://generativelanguage.googleapis.com/v1beta/models/gemini-3-flash-preview:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H 'Content-Type: application/json' \

-X POST \

-d "{

\"contents\": [{

\"parts\": [{\"text\": \"What is the minimum hourly wage in Tokyo right now?\"}]

}],

\"tools\": [

{

\"fileSearch\": {

\"fileSearchStoreNames\": [\"$STORE_NAME\"]

}

}

],

\"generationConfig\": {

\"responseMimeType\": \"application/json\",

\"responseJsonSchema\": {

\"type\": \"object\",

\"properties\": {

\"amount\": {\"type\": \"string\", \"description\": \"The numerical part of the amount.\"},

\"currency\": {\"type\": \"string\", \"description\": \"The currency of amount.\"}

},

\"required\": [\"amount\", \"currency\"]

}

}

}"

支持的模型

以下模型支持文件搜索:

支持的文件类型

文件搜索支持多种文件格式,详见以下各部分。

应用文件类型

application/dartapplication/ecmascriptapplication/jsonapplication/ms-javaapplication/mswordapplication/pdfapplication/sqlapplication/typescriptapplication/vnd.curlapplication/vnd.dartapplication/vnd.ibm.secure-containerapplication/vnd.jupyterapplication/vnd.ms-excelapplication/vnd.oasis.opendocument.textapplication/vnd.openxmlformats-officedocument.presentationml.presentationapplication/vnd.openxmlformats-officedocument.spreadsheetml.sheetapplication/vnd.openxmlformats-officedocument.wordprocessingml.documentapplication/vnd.openxmlformats-officedocument.wordprocessingml.templateapplication/x-cshapplication/x-hwpapplication/x-hwp-v5application/x-latexapplication/x-phpapplication/x-powershellapplication/x-shapplication/x-shellscriptapplication/x-texapplication/x-zshapplication/xmlapplication/zip

文本文件类型

text/1d-interleaved-parityfectext/REDtext/SGMLtext/cache-manifesttext/calendartext/cqltext/cql-extensiontext/cql-identifiertext/csstext/csvtext/csv-schematext/dnstext/encaprtptext/enrichedtext/exampletext/fhirpathtext/flexfectext/fwdredtext/gff3text/grammar-ref-listtext/hl7v2text/htmltext/javascripttext/jcr-cndtext/jsxtext/markdowntext/mizartext/n3text/parameterstext/parityfectext/phptext/plaintext/provenance-notationtext/prs.fallenstein.rsttext/prs.lines.tagtext/prs.prop.logictext/raptorfectext/rfc822-headerstext/rtftext/rtp-enc-aescm128text/rtploopbacktext/rtxtext/sgmltext/shaclctext/shextext/spdxtext/stringstext/t140text/tab-separated-valuestext/texmacstext/trofftext/tsvtext/tsxtext/turtletext/ulpfectext/uri-listtext/vcardtext/vnd.DMClientScripttext/vnd.IPTC.NITFtext/vnd.IPTC.NewsMLtext/vnd.atext/vnd.abctext/vnd.ascii-arttext/vnd.curltext/vnd.debian.copyrighttext/vnd.dvb.subtitletext/vnd.esmertec.theme-descriptortext/vnd.exchangeabletext/vnd.familysearch.gedcomtext/vnd.ficlab.flttext/vnd.flytext/vnd.fmi.flexstortext/vnd.gmltext/vnd.graphviztext/vnd.hanstext/vnd.hgltext/vnd.in3d.3dmltext/vnd.in3d.spottext/vnd.latex-ztext/vnd.motorola.reflextext/vnd.ms-mediapackagetext/vnd.net2phone.commcenter.commandtext/vnd.radisys.msml-basic-layouttext/vnd.senx.warpscripttext/vnd.sositext/vnd.sun.j2me.app-descriptortext/vnd.trolltech.linguisttext/vnd.wap.sitext/vnd.wap.sltext/vnd.wap.wmltext/vnd.wap.wmlscripttext/vtttext/wgsltext/x-asmtext/x-bibtextext/x-bootext/x-ctext/x-c++hdrtext/x-c++srctext/x-cassandratext/x-chdrtext/x-coffeescripttext/x-componenttext/x-cshtext/x-csharptext/x-csrctext/x-cudatext/x-dtext/x-difftext/x-dsrctext/x-emacs-lisptext/x-erlangtext/x-gff3text/x-gotext/x-haskelltext/x-javatext/x-java-propertiestext/x-java-sourcetext/x-kotlintext/x-lilypondtext/x-lisptext/x-literate-haskelltext/x-luatext/x-moctext/x-objcsrctext/x-pascaltext/x-pcs-gcdtext/x-perltext/x-perl-scripttext/x-pythontext/x-python-scripttext/x-r-markdowntext/x-rsrctext/x-rsttext/x-ruby-scripttext/x-rusttext/x-sasstext/x-scalatext/x-schemetext/x-script.pythontext/x-scsstext/x-setexttext/x-sfvtext/x-shtext/x-siestatext/x-sostext/x-sqltext/x-swifttext/x-tcltext/x-textext/x-vbasictext/x-vcalendartext/xmltext/xml-dtdtext/xml-external-parsed-entitytext/yaml

限制

- Live API:Live API 不支持文件搜索。

- 工具不兼容:目前,文件搜索无法与其他工具(例如依托 Google 搜索进行接地、网址上下文等)结合使用。

速率限制

为了确保服务稳定性,文件搜索 API 具有以下限制:

- 文件大小上限 / 每个文档的限制:100 MB

- 项目文件搜索存储空间总大小(取决于用户层级):

- 免费:1 GB

- 1 级:10 GB

- 第 2 级:100 GB

- 3 级:1 TB

- 建议:将每个文件搜索存储空间的大小限制在 20 GB 以下,以确保最佳检索延迟时间。

价格

后续步骤

- 访问 File Search Stores 和 File Search Documents 的 API 参考文档。