-

Nano Banana Pro によって生成されました プロンプト: 「ロンドンの最も象徴的なランドマークと建築要素を特徴とする、45 度の俯瞰図の等角投影のミニチュア 3D 漫画のシーンを鮮明に表現してください。リアルな PBR マテリアルと、優しくリアルな照明と影を使用して、柔らかく洗練されたテクスチャを使用します。現在の気象条件を都市環境に直接統合して、没入感のある雰囲気を作り出します。すっきりとしたミニマルな構図で、柔らかい単色の背景を使用します。中央上部に「London」というタイトルを大きな太字で配置し、その下に目立つ天気アイコン、日付(小さいテキスト)、気温(中くらいのテキスト)を配置します。すべてのテキストは、一貫した間隔で中央に配置する必要があります。建物の最上部とわずかに重なることもあります。」 -

Nano Banana Pro によって生成されました -

Nano Banana Pro によって生成されました -

Nano Banana Pro によって生成されました プロンプト: 「光沢のある雑誌の表紙の写真。表紙には「Nano Banana Pro」という大きな太字の文字が書かれている。」テキストはセリフフォントで、ビュー全体に表示されます。他のテキストは含めないでください。テキストの前に、美しい衣装を着た人物のポートレートがあります。バーコードと価格とともに、問題番号と今日の日付を隅に配置します。雑誌は、デザイナーズ ショップ内のレンガの壁に沿って置かれた棚にあります。マネキンが同じ服装をしています。」AI Studio でプロフェッショナルな商品写真を作成する -

Nano Banana Pro によって生成されました -

Nano Banana Pro によって生成されました -

Nano Banana Pro によって生成されました プロンプト: 「かわいい犬を表すアイコン。背景は白です。カラフルで触覚的な 3D スタイルでアイコンを作成します。テキストなし。」AI Studio の Nano Banana でアイコン、ステッカー、アセットを作成する -

Nano Banana Pro によって生成されました プロンプト: 「完全にアイソメトリックな写真を作成して。ミニチュアではなく、たまたま完全にアイソメトリックになった写真です。美しいモダンなオフィス内部の写真です。」

Nano Banana は、Gemini のネイティブ画像生成機能の名称です。Gemini は、テキスト、画像、またはその両方を組み合わせて、会話形式で画像を生成して処理できます。これにより、これまでにない制御でビジュアルを作成、編集、反復処理できます。

Nano Banana は、Gemini API で使用できる 2 つの異なるモデルを指します。

- Nano Banana: Gemini 2.5 Flash Image モデル(

gemini-2.5-flash-image)。このモデルは速度と効率性を重視して設計されており、大量の低レイテンシ タスク向けに最適化されています。 - Nano Banana Pro: Gemini 3 Pro Image プレビュー モデル(

gemini-3-pro-image-preview)。このモデルは、高度な推論(「思考」)を利用して複雑な指示に従い、高忠実度のテキストをレンダリングすることで、プロフェッショナルなアセット制作向けに設計されています。

すべての生成画像には SynthID の透かしが埋め込まれています。

画像生成(テキスト画像変換)

Python

from google import genai

from google.genai import types

from PIL import Image

client = genai.Client()

prompt = ("Create a picture of a nano banana dish in a fancy restaurant with a Gemini theme")

response = client.models.generate_content(

model="gemini-2.5-flash-image",

contents=[prompt],

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("generated_image.png")

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

"Create a picture of a nano banana dish in a fancy restaurant with a Gemini theme";

const response = await ai.models.generateContent({

model: "gemini-2.5-flash-image",

contents: prompt,

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("gemini-native-image.png", buffer);

console.log("Image saved as gemini-native-image.png");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-2.5-flash-image",

genai.Text("Create a picture of a nano banana dish in a " +

" fancy restaurant with a Gemini theme"),

)

for _, part := range result.Candidates[0].Content.Parts {

if part.Text != "" {

fmt.Println(part.Text)

} else if part.InlineData != nil {

imageBytes := part.InlineData.Data

outputFilename := "gemini_generated_image.png"

_ = os.WriteFile(outputFilename, imageBytes, 0644)

}

}

}

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class TextToImage {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-2.5-flash-image",

"Create a picture of a nano banana dish in a fancy restaurant with a Gemini theme",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("_01_generated_image.png"), blob.data().get());

}

}

}

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-2.5-flash-image:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{

"parts": [

{"text": "Create a picture of a nano banana dish in a fancy restaurant with a Gemini theme"}

]

}]

}'

画像編集(テキストと画像による画像変換)

リマインダー: アップロードする画像に必要な権利をすべて所有していることをご確認ください。他者の権利を侵害するコンテンツ(他人を欺く、嫌がらせをする、または危害を加える動画や画像など)を生成しないでください。この生成 AI 機能の使用は、Google の使用禁止に関するポリシーの対象となります。

画像を指定し、テキスト プロンプトを使用して要素の追加、削除、変更、スタイルの変更、カラー グレーディングの調整を行います。

次の例は、base64 エンコードされた画像をアップロードする方法を示しています。複数の画像、大きなペイロード、サポートされている MIME タイプについては、画像認識のページをご覧ください。

Python

from google import genai

from google.genai import types

from PIL import Image

client = genai.Client()

prompt = (

"Create a picture of my cat eating a nano-banana in a "

"fancy restaurant under the Gemini constellation",

)

image = Image.open("/path/to/cat_image.png")

response = client.models.generate_content(

model="gemini-2.5-flash-image",

contents=[prompt, image],

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("generated_image.png")

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const imagePath = "path/to/cat_image.png";

const imageData = fs.readFileSync(imagePath);

const base64Image = imageData.toString("base64");

const prompt = [

{ text: "Create a picture of my cat eating a nano-banana in a" +

"fancy restaurant under the Gemini constellation" },

{

inlineData: {

mimeType: "image/png",

data: base64Image,

},

},

];

const response = await ai.models.generateContent({

model: "gemini-2.5-flash-image",

contents: prompt,

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("gemini-native-image.png", buffer);

console.log("Image saved as gemini-native-image.png");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

imagePath := "/path/to/cat_image.png"

imgData, _ := os.ReadFile(imagePath)

parts := []*genai.Part{

genai.NewPartFromText("Create a picture of my cat eating a nano-banana in a fancy restaurant under the Gemini constellation"),

&genai.Part{

InlineData: &genai.Blob{

MIMEType: "image/png",

Data: imgData,

},

},

}

contents := []*genai.Content{

genai.NewContentFromParts(parts, genai.RoleUser),

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-2.5-flash-image",

contents,

)

for _, part := range result.Candidates[0].Content.Parts {

if part.Text != "" {

fmt.Println(part.Text)

} else if part.InlineData != nil {

imageBytes := part.InlineData.Data

outputFilename := "gemini_generated_image.png"

_ = os.WriteFile(outputFilename, imageBytes, 0644)

}

}

}

Java

import com.google.genai.Client;

import com.google.genai.types.Content;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

public class TextAndImageToImage {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-2.5-flash-image",

Content.fromParts(

Part.fromText("""

Create a picture of my cat eating a nano-banana in

a fancy restaurant under the Gemini constellation

"""),

Part.fromBytes(

Files.readAllBytes(

Path.of("src/main/resources/cat.jpg")),

"image/jpeg")),

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("gemini_generated_image.png"), blob.data().get());

}

}

}

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-2.5-flash-image:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H 'Content-Type: application/json' \

-d "{

\"contents\": [{

\"parts\":[

{\"text\": \"'Create a picture of my cat eating a nano-banana in a fancy restaurant under the Gemini constellation\"},

{

\"inline_data\": {

\"mime_type\":\"image/jpeg\",

\"data\": \"<BASE64_IMAGE_DATA>\"

}

}

]

}]

}"

マルチターンの画像編集

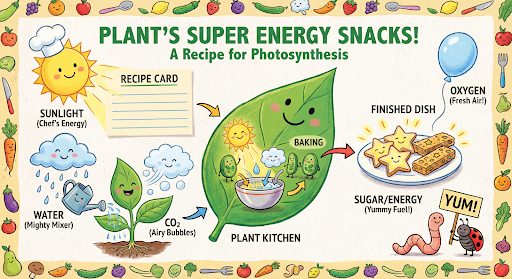

会話形式で画像の生成と編集を続けます。画像に対して反復処理を行うには、チャットまたはマルチターンの会話をおすすめします。次の例は、光合成に関するインフォグラフィックを生成するプロンプトを示しています。

Python

from google import genai

from google.genai import types

client = genai.Client()

chat = client.chats.create(

model="gemini-3-pro-image-preview",

config=types.GenerateContentConfig(

response_modalities=['TEXT', 'IMAGE'],

tools=[{"google_search": {}}]

)

)

message = "Create a vibrant infographic that explains photosynthesis as if it were a recipe for a plant's favorite food. Show the \"ingredients\" (sunlight, water, CO2) and the \"finished dish\" (sugar/energy). The style should be like a page from a colorful kids' cookbook, suitable for a 4th grader."

response = chat.send_message(message)

for part in response.parts:

if part.text is not None:

print(part.text)

elif image:= part.as_image():

image.save("photosynthesis.png")

JavaScript

import { GoogleGenAI } from "@google/genai";

const ai = new GoogleGenAI({});

async function main() {

const chat = ai.chats.create({

model: "gemini-3-pro-image-preview",

config: {

responseModalities: ['TEXT', 'IMAGE'],

tools: [{googleSearch: {}}],

},

});

await main();

const message = "Create a vibrant infographic that explains photosynthesis as if it were a recipe for a plant's favorite food. Show the \"ingredients\" (sunlight, water, CO2) and the \"finished dish\" (sugar/energy). The style should be like a page from a colorful kids' cookbook, suitable for a 4th grader."

let response = await chat.sendMessage({message});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("photosynthesis.png", buffer);

console.log("Image saved as photosynthesis.png");

}

}

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

defer client.Close()

model := client.GenerativeModel("gemini-3-pro-image-preview")

model.GenerationConfig = &pb.GenerationConfig{

ResponseModalities: []pb.ResponseModality{genai.Text, genai.Image},

}

chat := model.StartChat()

message := "Create a vibrant infographic that explains photosynthesis as if it were a recipe for a plant's favorite food. Show the \"ingredients\" (sunlight, water, CO2) and the \"finished dish\" (sugar/energy). The style should be like a page from a colorful kids' cookbook, suitable for a 4th grader."

resp, err := chat.SendMessage(ctx, genai.Text(message))

if err != nil {

log.Fatal(err)

}

for _, part := range resp.Candidates[0].Content.Parts {

if txt, ok := part.(genai.Text); ok {

fmt.Printf("%s", string(txt))

} else if img, ok := part.(genai.ImageData); ok {

err := os.WriteFile("photosynthesis.png", img.Data, 0644)

if err != nil {

log.Fatal(err)

}

}

}

}

Java

import com.google.genai.Chat;

import com.google.genai.Client;

import com.google.genai.types.Content;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.GoogleSearch;

import com.google.genai.types.ImageConfig;

import com.google.genai.types.Part;

import com.google.genai.types.RetrievalConfig;

import com.google.genai.types.Tool;

import com.google.genai.types.ToolConfig;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

public class MultiturnImageEditing {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.tools(Tool.builder()

.googleSearch(GoogleSearch.builder().build())

.build())

.build();

Chat chat = client.chats.create("gemini-3-pro-image-preview", config);

GenerateContentResponse response = chat.sendMessage("""

Create a vibrant infographic that explains photosynthesis

as if it were a recipe for a plant's favorite food.

Show the "ingredients" (sunlight, water, CO2)

and the "finished dish" (sugar/energy).

The style should be like a page from a colorful

kids' cookbook, suitable for a 4th grader.

""");

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("photosynthesis.png"), blob.data().get());

}

}

}

// ...

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-image-preview:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{

"role": "user",

"parts": [

{"text": "Create a vibrant infographic that explains photosynthesis as if it were a recipe for a plants favorite food. Show the \"ingredients\" (sunlight, water, CO2) and the \"finished dish\" (sugar/energy). The style should be like a page from a colorful kids cookbook, suitable for a 4th grader."}

]

}],

"generationConfig": {

"responseModalities": ["TEXT", "IMAGE"]

}

}'

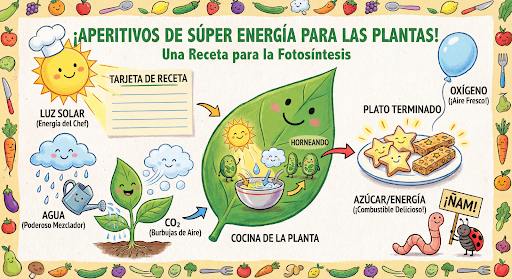

同じチャットを使用して、グラフィックの言語をスペイン語に変更できます。

Python

message = "Update this infographic to be in Spanish. Do not change any other elements of the image."

aspect_ratio = "16:9" # "1:1","2:3","3:2","3:4","4:3","4:5","5:4","9:16","16:9","21:9"

resolution = "2K" # "1K", "2K", "4K"

response = chat.send_message(message,

config=types.GenerateContentConfig(

image_config=types.ImageConfig(

aspect_ratio=aspect_ratio,

image_size=resolution

),

))

for part in response.parts:

if part.text is not None:

print(part.text)

elif image:= part.as_image():

image.save("photosynthesis_spanish.png")

JavaScript

const message = 'Update this infographic to be in Spanish. Do not change any other elements of the image.';

const aspectRatio = '16:9';

const resolution = '2K';

let response = await chat.sendMessage({

message,

config: {

responseModalities: ['TEXT', 'IMAGE'],

imageConfig: {

aspectRatio: aspectRatio,

imageSize: resolution,

},

tools: [{googleSearch: {}}],

},

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("photosynthesis2.png", buffer);

console.log("Image saved as photosynthesis2.png");

}

}

Go

message = "Update this infographic to be in Spanish. Do not change any other elements of the image."

aspect_ratio = "16:9" // "1:1","2:3","3:2","3:4","4:3","4:5","5:4","9:16","16:9","21:9"

resolution = "2K" // "1K", "2K", "4K"

model.GenerationConfig.ImageConfig = &pb.ImageConfig{

AspectRatio: aspect_ratio,

ImageSize: resolution,

}

resp, err = chat.SendMessage(ctx, genai.Text(message))

if err != nil {

log.Fatal(err)

}

for _, part := range resp.Candidates[0].Content.Parts {

if txt, ok := part.(genai.Text); ok {

fmt.Printf("%s", string(txt))

} else if img, ok := part.(genai.ImageData); ok {

err := os.WriteFile("photosynthesis_spanish.png", img.Data, 0644)

if err != nil {

log.Fatal(err)

}

}

}

Java

String aspectRatio = "16:9"; // "1:1","2:3","3:2","3:4","4:3","4:5","5:4","9:16","16:9","21:9"

String resolution = "2K"; // "1K", "2K", "4K"

config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.imageConfig(ImageConfig.builder()

.aspectRatio(aspectRatio)

.imageSize(resolution)

.build())

.build();

response = chat.sendMessage(

"Update this infographic to be in Spanish. " +

"Do not change any other elements of the image.",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("photosynthesis_spanish.png"), blob.data().get());

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-image-preview:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H 'Content-Type: application/json' \

-d '{

"contents": [

{

"role": "user",

"parts": [{"text": "Create a vibrant infographic that explains photosynthesis..."}]

},

{

"role": "model",

"parts": [{"inline_data": {"mime_type": "image/png", "data": "<PREVIOUS_IMAGE_DATA>"}}]

},

{

"role": "user",

"parts": [{"text": "Update this infographic to be in Spanish. Do not change any other elements of the image."}]

}

],

"tools": [{"google_search": {}}],

"generationConfig": {

"responseModalities": ["TEXT", "IMAGE"],

"imageConfig": {

"aspectRatio": "16:9",

"imageSize": "2K"

}

}

}'

Gemini 3 Pro Image の新機能

Gemini 3 Pro Image(gemini-3-pro-image-preview)は、プロフェッショナルなアセット制作に最適化された最先端の画像生成および編集モデルです。高度な推論を通じて最も困難なワークフローに取り組むように設計されており、複雑なマルチターンの作成と変更タスクに優れています。

- 高解像度出力: 1K、2K、4K のビジュアルを生成する機能が組み込まれています。

- 高度なテキスト レンダリング: インフォグラフィック、メニュー、図表、マーケティング アセット用に、読みやすくスタイリッシュなテキストを生成できます。

- Google 検索によるグラウンディング: モデルは Google 検索をツールとして使用して、事実を確認し、リアルタイム データ(現在の天気図、株価チャート、最近のイベントなど)に基づいて画像生成できます。

- 思考モード: モデルは「思考」プロセスを使用して複雑なプロンプトを推論します。最終的な高品質の出力を生成する前に、構成を調整するための中間的な「思考画像」(バックエンドで表示されるが、課金されない)を生成します。

- 最大 14 枚の参照画像: 最大 14 枚の参照画像を組み合わせて最終的な画像を生成できるようになりました。

最大 14 枚の参照画像を使用する

Gemini 3 Pro Preview では、最大 14 枚の参照画像を組み合わせることができます。これらの 14 枚の画像には、次のものを含めることができます。

- 最終的な画像に含める高忠実度のオブジェクトの画像(最大 6 枚)

キャラクターの一貫性を維持するための人物の画像(最大 5 枚)

Python

from google import genai

from google.genai import types

from PIL import Image

prompt = "An office group photo of these people, they are making funny faces."

aspect_ratio = "5:4" # "1:1","2:3","3:2","3:4","4:3","4:5","5:4","9:16","16:9","21:9"

resolution = "2K" # "1K", "2K", "4K"

client = genai.Client()

response = client.models.generate_content(

model="gemini-3-pro-image-preview",

contents=[

prompt,

Image.open('person1.png'),

Image.open('person2.png'),

Image.open('person3.png'),

Image.open('person4.png'),

Image.open('person5.png'),

],

config=types.GenerateContentConfig(

response_modalities=['TEXT', 'IMAGE'],

image_config=types.ImageConfig(

aspect_ratio=aspect_ratio,

image_size=resolution

),

)

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif image:= part.as_image():

image.save("office.png")

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

'An office group photo of these people, they are making funny faces.';

const aspectRatio = '5:4';

const resolution = '2K';

const contents = [

{ text: prompt },

{

inlineData: {

mimeType: "image/jpeg",

data: base64ImageFile1,

},

},

{

inlineData: {

mimeType: "image/jpeg",

data: base64ImageFile2,

},

},

{

inlineData: {

mimeType: "image/jpeg",

data: base64ImageFile3,

},

},

{

inlineData: {

mimeType: "image/jpeg",

data: base64ImageFile4,

},

},

{

inlineData: {

mimeType: "image/jpeg",

data: base64ImageFile5,

},

}

];

const response = await ai.models.generateContent({

model: 'gemini-3-pro-image-preview',

contents: contents,

config: {

responseModalities: ['TEXT', 'IMAGE'],

imageConfig: {

aspectRatio: aspectRatio,

imageSize: resolution,

},

},

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("image.png", buffer);

console.log("Image saved as image.png");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

defer client.Close()

model := client.GenerativeModel("gemini-3-pro-image-preview")

model.GenerationConfig = &pb.GenerationConfig{

ResponseModalities: []pb.ResponseModality{genai.Text, genai.Image},

ImageConfig: &pb.ImageConfig{

AspectRatio: "5:4",

ImageSize: "2K",

},

}

img1, err := os.ReadFile("person1.png")

if err != nil { log.Fatal(err) }

img2, err := os.ReadFile("person2.png")

if err != nil { log.Fatal(err) }

img3, err := os.ReadFile("person3.png")

if err != nil { log.Fatal(err) }

img4, err := os.ReadFile("person4.png")

if err != nil { log.Fatal(err) }

img5, err := os.ReadFile("person5.png")

if err != nil { log.Fatal(err) }

parts := []genai.Part{

genai.Text("An office group photo of these people, they are making funny faces."),

genai.ImageData{MIMEType: "image/png", Data: img1},

genai.ImageData{MIMEType: "image/png", Data: img2},

genai.ImageData{MIMEType: "image/png", Data: img3},

genai.ImageData{MIMEType: "image/png", Data: img4},

genai.ImageData{MIMEType: "image/png", Data: img5},

}

resp, err := model.GenerateContent(ctx, parts...)

if err != nil {

log.Fatal(err)

}

for _, part := range resp.Candidates[0].Content.Parts {

if txt, ok := part.(genai.Text); ok {

fmt.Printf("%s", string(txt))

} else if img, ok := part.(genai.ImageData); ok {

err := os.WriteFile("office.png", img.Data, 0644)

if err != nil {

log.Fatal(err)

}

}

}

}

Java

import com.google.genai.Client;

import com.google.genai.types.Content;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.ImageConfig;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

public class GroupPhoto {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.imageConfig(ImageConfig.builder()

.aspectRatio("5:4")

.imageSize("2K")

.build())

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-3-pro-image-preview",

Content.fromParts(

Part.fromText("An office group photo of these people, they are making funny faces."),

Part.fromBytes(Files.readAllBytes(Path.of("person1.png")), "image/png"),

Part.fromBytes(Files.readAllBytes(Path.of("person2.png")), "image/png"),

Part.fromBytes(Files.readAllBytes(Path.of("person3.png")), "image/png"),

Part.fromBytes(Files.readAllBytes(Path.of("person4.png")), "image/png"),

Part.fromBytes(Files.readAllBytes(Path.of("person5.png")), "image/png")

), config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("office.png"), blob.data().get());

}

}

}

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-image-preview:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H 'Content-Type: application/json' \

-d "{

\"contents\": [{

\"parts\":[

{\"text\": \"An office group photo of these people, they are making funny faces.\"},

{\"inline_data\": {\"mime_type\":\"image/png\", \"data\": \"<BASE64_DATA_IMG_1>\"}},

{\"inline_data\": {\"mime_type\":\"image/png\", \"data\": \"<BASE64_DATA_IMG_2>\"}},

{\"inline_data\": {\"mime_type\":\"image/png\", \"data\": \"<BASE64_DATA_IMG_3>\"}},

{\"inline_data\": {\"mime_type\":\"image/png\", \"data\": \"<BASE64_DATA_IMG_4>\"}},

{\"inline_data\": {\"mime_type\":\"image/png\", \"data\": \"<BASE64_DATA_IMG_5>\"}}

]

}],

\"generationConfig\": {

\"responseModalities\": [\"TEXT\", \"IMAGE\"],

\"imageConfig\": {

\"aspectRatio\": \"5:4\",

\"imageSize\": \"2K\"

}

}

}"

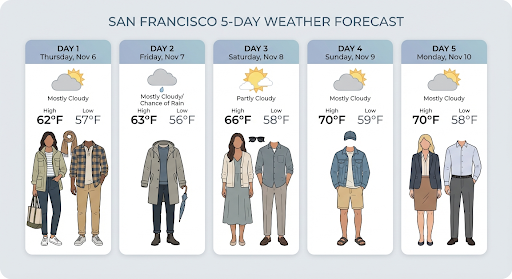

Google 検索によるグラウンディング

Google 検索ツールを使用して、天気予報、株価チャート、最近の出来事などのリアルタイム情報に基づいて画像を生成します。

画像生成で Google 検索によるグラウンディングを使用する場合、画像ベースの検索結果は生成モデルに渡されず、レスポンスから除外されます。

Python

from google import genai

prompt = "Visualize the current weather forecast for the next 5 days in San Francisco as a clean, modern weather chart. Add a visual on what I should wear each day"

aspect_ratio = "16:9" # "1:1","2:3","3:2","3:4","4:3","4:5","5:4","9:16","16:9","21:9"

client = genai.Client()

response = client.models.generate_content(

model="gemini-3-pro-image-preview",

contents=prompt,

config=types.GenerateContentConfig(

response_modalities=['Text', 'Image'],

image_config=types.ImageConfig(

aspect_ratio=aspect_ratio,

),

tools=[{"google_search": {}}]

)

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif image:= part.as_image():

image.save("weather.png")

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt = 'Visualize the current weather forecast for the next 5 days in San Francisco as a clean, modern weather chart. Add a visual on what I should wear each day';

const aspectRatio = '16:9';

const resolution = '2K';

const response = await ai.models.generateContent({

model: 'gemini-3-pro-image-preview',

contents: prompt,

config: {

responseModalities: ['TEXT', 'IMAGE'],

imageConfig: {

aspectRatio: aspectRatio,

imageSize: resolution,

},

tools: [{ googleSearch: {} }]

},

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("image.png", buffer);

console.log("Image saved as image.png");

}

}

}

main();

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.GoogleSearch;

import com.google.genai.types.ImageConfig;

import com.google.genai.types.Part;

import com.google.genai.types.Tool;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class SearchGrounding {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.imageConfig(ImageConfig.builder()

.aspectRatio("16:9")

.build())

.tools(Tool.builder()

.googleSearch(GoogleSearch.builder().build())

.build())

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-3-pro-image-preview", """

Visualize the current weather forecast for the next 5 days

in San Francisco as a clean, modern weather chart.

Add a visual on what I should wear each day

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("weather.png"), blob.data().get());

}

}

}

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-image-preview:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{"parts": [{"text": "Visualize the current weather forecast for the next 5 days in San Francisco as a clean, modern weather chart. Add a visual on what I should wear each day"}]}],

"tools": [{"google_search": {}}],

"generationConfig": {

"responseModalities": ["TEXT", "IMAGE"],

"imageConfig": {"aspectRatio": "16:9"}

}

}'

レスポンスには、次の必須フィールドを含む groundingMetadata が含まれます。

searchEntryPoint: 必要な検索候補をレンダリングするための HTML と CSS が含まれています。groundingChunks: 生成された画像のグラウンディングに使用された上位 3 つのウェブソースを返します

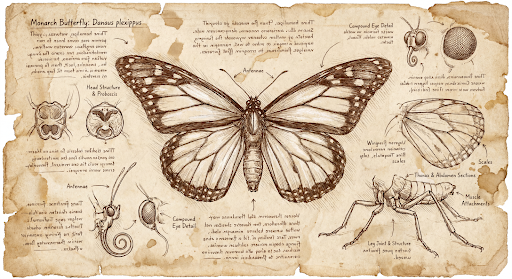

最大 4K 解像度の画像を生成する

Gemini 3 Pro Image はデフォルトで 1K 画像を生成しますが、2K 画像と 4K 画像を出力することもできます。高解像度のアセットを生成するには、generation_config で image_size を指定します。

大文字の「K」を使用する必要があります(例: 1K、2K、4K)。小文字のパラメータ(例: 1k)は拒否されます。

Python

from google import genai

from google.genai import types

prompt = "Da Vinci style anatomical sketch of a dissected Monarch butterfly. Detailed drawings of the head, wings, and legs on textured parchment with notes in English."

aspect_ratio = "1:1" # "1:1","2:3","3:2","3:4","4:3","4:5","5:4","9:16","16:9","21:9"

resolution = "1K" # "1K", "2K", "4K"

client = genai.Client()

response = client.models.generate_content(

model="gemini-3-pro-image-preview",

contents=prompt,

config=types.GenerateContentConfig(

response_modalities=['TEXT', 'IMAGE'],

image_config=types.ImageConfig(

aspect_ratio=aspect_ratio,

image_size=resolution

),

)

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif image:= part.as_image():

image.save("butterfly.png")

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

'Da Vinci style anatomical sketch of a dissected Monarch butterfly. Detailed drawings of the head, wings, and legs on textured parchment with notes in English.';

const aspectRatio = '1:1';

const resolution = '1K';

const response = await ai.models.generateContent({

model: 'gemini-3-pro-image-preview',

contents: prompt,

config: {

responseModalities: ['TEXT', 'IMAGE'],

imageConfig: {

aspectRatio: aspectRatio,

imageSize: resolution,

},

},

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("image.png", buffer);

console.log("Image saved as image.png");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

defer client.Close()

model := client.GenerativeModel("gemini-3-pro-image-preview")

model.GenerationConfig = &pb.GenerationConfig{

ResponseModalities: []pb.ResponseModality{genai.Text, genai.Image},

ImageConfig: &pb.ImageConfig{

AspectRatio: "1:1",

ImageSize: "1K",

},

}

prompt := "Da Vinci style anatomical sketch of a dissected Monarch butterfly. Detailed drawings of the head, wings, and legs on textured parchment with notes in English."

resp, err := model.GenerateContent(ctx, genai.Text(prompt))

if err != nil {

log.Fatal(err)

}

for _, part := range resp.Candidates[0].Content.Parts {

if txt, ok := part.(genai.Text); ok {

fmt.Printf("%s", string(txt))

} else if img, ok := part.(genai.ImageData); ok {

err := os.WriteFile("butterfly.png", img.Data, 0644)

if err != nil {

log.Fatal(err)

}

}

}

}

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.GoogleSearch;

import com.google.genai.types.ImageConfig;

import com.google.genai.types.Part;

import com.google.genai.types.Tool;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class HiRes {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.imageConfig(ImageConfig.builder()

.aspectRatio("16:9")

.imageSize("4K")

.build())

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-3-pro-image-preview", """

Da Vinci style anatomical sketch of a dissected Monarch butterfly.

Detailed drawings of the head, wings, and legs on textured

parchment with notes in English.

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("butterfly.png"), blob.data().get());

}

}

}

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-image-preview:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{"parts": [{"text": "Da Vinci style anatomical sketch of a dissected Monarch butterfly. Detailed drawings of the head, wings, and legs on textured parchment with notes in English."}]}],

"tools": [{"google_search": {}}],

"generationConfig": {

"responseModalities": ["TEXT", "IMAGE"],

"imageConfig": {"aspectRatio": "1:1", "imageSize": "1K"}

}

}'

このプロンプトから生成された画像の例を次に示します。

思考プロセス

Gemini 3 Pro Image プレビュー版モデルは思考モデルであり、複雑なプロンプトに対して推論プロセス(「思考」)を使用します。この機能はデフォルトで有効になっており、API で無効にすることはできません。思考プロセスの詳細については、Gemini の思考ガイドをご覧ください。

このモデルは、構図とロジックをテストするために最大 2 つの中間画像を生成します。Thinking 内の最後の画像は、最終的にレンダリングされた画像でもあります。

最終的な画像が生成されるまでの思考を確認できます。

Python

for part in response.parts:

if part.thought:

if part.text:

print(part.text)

elif image:= part.as_image():

image.show()

JavaScript

for (const part of response.candidates[0].content.parts) {

if (part.thought) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, 'base64');

fs.writeFileSync('image.png', buffer);

console.log('Image saved as image.png');

}

}

}

思考シグネチャ

思考シグネチャは、モデルの内部的な思考プロセスを暗号化したもので、マルチターンのやり取りで推論コンテキストを保持するために使用されます。すべてのレスポンスに thought_signature フィールドが含まれます。原則として、モデルのレスポンスで思考シグネチャを受け取った場合は、次のターンで会話履歴を送信するときに、受け取ったとおりに渡す必要があります。思考シグネチャを循環させないと、レスポンスが失敗する可能性があります。署名全般について詳しくは、思考署名のドキュメントをご覧ください。

思考シグネチャの仕組みは次のとおりです。

- レスポンスの一部である画像

mimetypeを含むすべてのinline_data部分には署名が必要です。 - 考えの直後に(画像の前に)テキスト部分がある場合は、最初のテキスト部分にも署名が必要です。

- 画像

mimetypeを含むinline_data部分が思考の一部である場合、署名は含まれません。

次のコードは、思考シグネチャが含まれる場所の例を示しています。

[

{

"inline_data": {

"data": "<base64_image_data_0>",

"mime_type": "image/png"

},

"thought": true // Thoughts don't have signatures

},

{

"inline_data": {

"data": "<base64_image_data_1>",

"mime_type": "image/png"

},

"thought": true // Thoughts don't have signatures

},

{

"inline_data": {

"data": "<base64_image_data_2>",

"mime_type": "image/png"

},

"thought": true // Thoughts don't have signatures

},

{

"text": "Here is a step-by-step guide to baking macarons, presented in three separate images.\n\n### Step 1: Piping the Batter\n\nThe first step after making your macaron batter is to pipe it onto a baking sheet. This requires a steady hand to create uniform circles.\n\n",

"thought_signature": "<Signature_A>" // The first non-thought part always has a signature

},

{

"inline_data": {

"data": "<base64_image_data_3>",

"mime_type": "image/png"

},

"thought_signature": "<Signature_B>" // All image parts have a signatures

},

{

"text": "\n\n### Step 2: Baking and Developing Feet\n\nOnce piped, the macarons are baked in the oven. A key sign of a successful bake is the development of \"feet\"—the ruffled edge at the base of each macaron shell.\n\n"

// Follow-up text parts don't have signatures

},

{

"inline_data": {

"data": "<base64_image_data_4>",

"mime_type": "image/png"

},

"thought_signature": "<Signature_C>" // All image parts have a signatures

},

{

"text": "\n\n### Step 3: Assembling the Macaron\n\nThe final step is to pair the cooled macaron shells by size and sandwich them together with your desired filling, creating the classic macaron dessert.\n\n"

},

{

"inline_data": {

"data": "<base64_image_data_5>",

"mime_type": "image/png"

},

"thought_signature": "<Signature_D>" // All image parts have a signatures

}

]

その他の画像生成モード

Gemini は、プロンプトの構造とコンテキストに基づいて、次のような他の画像操作モードをサポートしています。

- テキスト画像変換とテキスト(インターリーブ): 関連するテキストを含む画像を出力します。

- プロンプトの例: 「パエリアのレシピをイラスト付きで生成してください。」

- 画像とテキスト画像変換とテキスト(インターリーブ): 入力画像とテキストを使用して、関連する新しい画像とテキストを作成します。

- プロンプトの例:(家具付きの部屋の画像を提示して)「この部屋に合いそうなソファの色には他にどんなものがありますか?画像を更新してください」。

画像をバッチで生成する

大量の画像を生成する必要がある場合は、Batch API を使用できます。最大 24 時間のターンアラウンドと引き換えに、レートの上限が引き上げられます。

Batch API の画像例とコードについては、Batch API の画像生成に関するドキュメントとクックブックをご覧ください。

プロンプトのガイドと戦略

画像生成をマスターするには、次の基本原則から始めます。

キーワードを列挙するだけでなく、シーンを説明します。このモデルの強みは、言語を深く理解していることです。物語や説明の段落は、関連性のない単語のリストよりも、一貫性のある優れた画像を生成する可能性がほぼ常に高くなります。

画像を生成するためのプロンプト

次の戦略は、効果的なプロンプトを作成して、思いどおりの画像を生成するのに役立ちます。

1. フォトリアリスティックなシーン

リアルな画像を作成するには、写真用語を使用します。カメラアングル、レンズの種類、照明、細部について言及し、モデルを写真のようにリアルな結果に導きます。

テンプレート

A photorealistic [shot type] of [subject], [action or expression], set in

[environment]. The scene is illuminated by [lighting description], creating

a [mood] atmosphere. Captured with a [camera/lens details], emphasizing

[key textures and details]. The image should be in a [aspect ratio] format.

プロンプト

A photorealistic close-up portrait of an elderly Japanese ceramicist with

deep, sun-etched wrinkles and a warm, knowing smile. He is carefully

inspecting a freshly glazed tea bowl. The setting is his rustic,

sun-drenched workshop. The scene is illuminated by soft, golden hour light

streaming through a window, highlighting the fine texture of the clay.

Captured with an 85mm portrait lens, resulting in a soft, blurred background

(bokeh). The overall mood is serene and masterful. Vertical portrait

orientation.

Python

from google import genai

from google.genai import types

client = genai.Client()

response = client.models.generate_content(

model="gemini-2.5-flash-image",

contents="A photorealistic close-up portrait of an elderly Japanese ceramicist with deep, sun-etched wrinkles and a warm, knowing smile. He is carefully inspecting a freshly glazed tea bowl. The setting is his rustic, sun-drenched workshop with pottery wheels and shelves of clay pots in the background. The scene is illuminated by soft, golden hour light streaming through a window, highlighting the fine texture of the clay and the fabric of his apron. Captured with an 85mm portrait lens, resulting in a soft, blurred background (bokeh). The overall mood is serene and masterful.",

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("photorealistic_example.png")

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class PhotorealisticScene {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-2.5-flash-image",

"""

A photorealistic close-up portrait of an elderly Japanese ceramicist

with deep, sun-etched wrinkles and a warm, knowing smile. He is

carefully inspecting a freshly glazed tea bowl. The setting is his

rustic, sun-drenched workshop with pottery wheels and shelves of

clay pots in the background. The scene is illuminated by soft,

golden hour light streaming through a window, highlighting the

fine texture of the clay and the fabric of his apron. Captured

with an 85mm portrait lens, resulting in a soft, blurred

background (bokeh). The overall mood is serene and masterful.

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("photorealistic_example.png"), blob.data().get());

}

}

}

}

}

}

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

"A photorealistic close-up portrait of an elderly Japanese ceramicist with deep, sun-etched wrinkles and a warm, knowing smile. He is carefully inspecting a freshly glazed tea bowl. The setting is his rustic, sun-drenched workshop with pottery wheels and shelves of clay pots in the background. The scene is illuminated by soft, golden hour light streaming through a window, highlighting the fine texture of the clay and the fabric of his apron. Captured with an 85mm portrait lens, resulting in a soft, blurred background (bokeh). The overall mood is serene and masterful.";

const response = await ai.models.generateContent({

model: "gemini-2.5-flash-image",

contents: prompt,

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("photorealistic_example.png", buffer);

console.log("Image saved as photorealistic_example.png");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-2.5-flash-image",

genai.Text("A photorealistic close-up portrait of an elderly Japanese ceramicist with deep, sun-etched wrinkles and a warm, knowing smile. He is carefully inspecting a freshly glazed tea bowl. The setting is his rustic, sun-drenched workshop with pottery wheels and shelves of clay pots in the background. The scene is illuminated by soft, golden hour light streaming through a window, highlighting the fine texture of the clay and the fabric of his apron. Captured with an 85mm portrait lens, resulting in a soft, blurred background (bokeh). The overall mood is serene and masterful."),

)

for _, part := range result.Candidates[0].Content.Parts {

if part.Text != "" {

fmt.Println(part.Text)

} else if part.InlineData != nil {

imageBytes := part.InlineData.Data

outputFilename := "photorealistic_example.png"

_ = os.WriteFile(outputFilename, imageBytes, 0644)

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-2.5-flash-image:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{

"parts": [

{"text": "A photorealistic close-up portrait of an elderly Japanese ceramicist with deep, sun-etched wrinkles and a warm, knowing smile. He is carefully inspecting a freshly glazed tea bowl. The setting is his rustic, sun-drenched workshop with pottery wheels and shelves of clay pots in the background. The scene is illuminated by soft, golden hour light streaming through a window, highlighting the fine texture of the clay and the fabric of his apron. Captured with an 85mm portrait lens, resulting in a soft, blurred background (bokeh). The overall mood is serene and masterful."}

]

}]

}'

2. スタイルを適用したイラストとステッカー

ステッカー、アイコン、アセットを作成する場合は、スタイルを明確に指定し、背景を透明にするようリクエストします。

テンプレート

A [style] sticker of a [subject], featuring [key characteristics] and a

[color palette]. The design should have [line style] and [shading style].

The background must be transparent.

プロンプト

A kawaii-style sticker of a happy red panda wearing a tiny bamboo hat. It's

munching on a green bamboo leaf. The design features bold, clean outlines,

simple cel-shading, and a vibrant color palette. The background must be white.

Python

from google import genai

from google.genai import types

client = genai.Client()

response = client.models.generate_content(

model="gemini-2.5-flash-image",

contents="A kawaii-style sticker of a happy red panda wearing a tiny bamboo hat. It's munching on a green bamboo leaf. The design features bold, clean outlines, simple cel-shading, and a vibrant color palette. The background must be white.",

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("red_panda_sticker.png")

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class StylizedIllustration {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-2.5-flash-image",

"""

A kawaii-style sticker of a happy red panda wearing a tiny bamboo

hat. It's munching on a green bamboo leaf. The design features

bold, clean outlines, simple cel-shading, and a vibrant color

palette. The background must be white.

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("red_panda_sticker.png"), blob.data().get());

}

}

}

}

}

}

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

"A kawaii-style sticker of a happy red panda wearing a tiny bamboo hat. It's munching on a green bamboo leaf. The design features bold, clean outlines, simple cel-shading, and a vibrant color palette. The background must be white.";

const response = await ai.models.generateContent({

model: "gemini-2.5-flash-image",

contents: prompt,

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("red_panda_sticker.png", buffer);

console.log("Image saved as red_panda_sticker.png");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-2.5-flash-image",

genai.Text("A kawaii-style sticker of a happy red panda wearing a tiny bamboo hat. It's munching on a green bamboo leaf. The design features bold, clean outlines, simple cel-shading, and a vibrant color palette. The background must be white."),

)

for _, part := range result.Candidates[0].Content.Parts {

if part.Text != "" {

fmt.Println(part.Text)

} else if part.InlineData != nil {

imageBytes := part.InlineData.Data

outputFilename := "red_panda_sticker.png"

_ = os.WriteFile(outputFilename, imageBytes, 0644)

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-2.5-flash-image:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{

"parts": [

{"text": "A kawaii-style sticker of a happy red panda wearing a tiny bamboo hat. It is munching on a green bamboo leaf. The design features bold, clean outlines, simple cel-shading, and a vibrant color palette. The background must be white."}

]

}]

}'

3. 画像内の正確なテキスト

Gemini はテキストのレンダリングに優れています。テキスト、フォント スタイル(説明)、全体的なデザインを明確にします。プロフェッショナルなアセット制作には、Gemini 3 Pro Image Preview を使用します。

テンプレート

Create a [image type] for [brand/concept] with the text "[text to render]"

in a [font style]. The design should be [style description], with a

[color scheme].

プロンプト

Create a modern, minimalist logo for a coffee shop called 'The Daily Grind'. The text should be in a clean, bold, sans-serif font. The color scheme is black and white. Put the logo in a circle. Use a coffee bean in a clever way.

Python

from google import genai

from google.genai import types

client = genai.Client()

response = client.models.generate_content(

model="gemini-3-pro-image-preview",

contents="Create a modern, minimalist logo for a coffee shop called 'The Daily Grind'. The text should be in a clean, bold, sans-serif font. The color scheme is black and white. Put the logo in a circle. Use a coffee bean in a clever way.",

config=types.GenerateContentConfig(

image_config=types.ImageConfig(

aspect_ratio="1:1",

)

)

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("logo_example.jpg")

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import com.google.genai.types.ImageConfig;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class AccurateTextInImages {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.imageConfig(ImageConfig.builder()

.aspectRatio("1:1")

.build())

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-3-pro-image-preview",

"""

Create a modern, minimalist logo for a coffee shop called 'The Daily Grind'. The text should be in a clean, bold, sans-serif font. The color scheme is black and white. Put the logo in a circle. Use a coffee bean in a clever way.

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("logo_example.jpg"), blob.data().get());

}

}

}

}

}

}

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

"Create a modern, minimalist logo for a coffee shop called 'The Daily Grind'. The text should be in a clean, bold, sans-serif font. The color scheme is black and white. Put the logo in a circle. Use a coffee bean in a clever way.";

const response = await ai.models.generateContent({

model: "gemini-3-pro-image-preview",

contents: prompt,

config: {

imageConfig: {

aspectRatio: "1:1",

},

},

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("logo_example.jpg", buffer);

console.log("Image saved as logo_example.jpg");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-3-pro-image-preview",

genai.Text("Create a modern, minimalist logo for a coffee shop called 'The Daily Grind'. The text should be in a clean, bold, sans-serif font. The color scheme is black and white. Put the logo in a circle. Use a coffee bean in a clever way."),

&genai.GenerateContentConfig{

ImageConfig: &genai.ImageConfig{

AspectRatio: "1:1",

},

},

)

for _, part := range result.Candidates[0].Content.Parts {

if part.Text != "" {

fmt.Println(part.Text)

} else if part.InlineData != nil {

imageBytes := part.InlineData.Data

outputFilename := "logo_example.jpg"

_ = os.WriteFile(outputFilename, imageBytes, 0644)

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-image-preview:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{

"parts": [

{"text": "Create a modern, minimalist logo for a coffee shop called The Daily Grind. The text should be in a clean, bold, sans-serif font. The color scheme is black and white. Put the logo in a circle. Use a coffee bean in a clever way."}

]

}],

"generationConfig": {

"imageConfig": {

"aspectRatio": "1:1"

}

}

}'

「The Daily Grind」というコーヒー ショップのモダンでミニマルなロゴを作成して...

「The Daily Grind」というコーヒー ショップのモダンでミニマルなロゴを作成して...4. 商品のモックアップと広告写真

e コマース、広告、ブランディング用のクリーンでプロフェッショナルな商品画像の作成に最適です。

テンプレート

A high-resolution, studio-lit product photograph of a [product description]

on a [background surface/description]. The lighting is a [lighting setup,

e.g., three-point softbox setup] to [lighting purpose]. The camera angle is

a [angle type] to showcase [specific feature]. Ultra-realistic, with sharp

focus on [key detail]. [Aspect ratio].

プロンプト

A high-resolution, studio-lit product photograph of a minimalist ceramic

coffee mug in matte black, presented on a polished concrete surface. The

lighting is a three-point softbox setup designed to create soft, diffused

highlights and eliminate harsh shadows. The camera angle is a slightly

elevated 45-degree shot to showcase its clean lines. Ultra-realistic, with

sharp focus on the steam rising from the coffee. Square image.

Python

from google import genai

from google.genai import types

client = genai.Client()

response = client.models.generate_content(

model="gemini-2.5-flash-image",

contents="A high-resolution, studio-lit product photograph of a minimalist ceramic coffee mug in matte black, presented on a polished concrete surface. The lighting is a three-point softbox setup designed to create soft, diffused highlights and eliminate harsh shadows. The camera angle is a slightly elevated 45-degree shot to showcase its clean lines. Ultra-realistic, with sharp focus on the steam rising from the coffee. Square image.",

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("product_mockup.png")

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class ProductMockup {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-2.5-flash-image",

"""

A high-resolution, studio-lit product photograph of a minimalist

ceramic coffee mug in matte black, presented on a polished

concrete surface. The lighting is a three-point softbox setup

designed to create soft, diffused highlights and eliminate harsh

shadows. The camera angle is a slightly elevated 45-degree shot

to showcase its clean lines. Ultra-realistic, with sharp focus

on the steam rising from the coffee. Square image.

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("product_mockup.png"), blob.data().get());

}

}

}

}

}

}

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

"A high-resolution, studio-lit product photograph of a minimalist ceramic coffee mug in matte black, presented on a polished concrete surface. The lighting is a three-point softbox setup designed to create soft, diffused highlights and eliminate harsh shadows. The camera angle is a slightly elevated 45-degree shot to showcase its clean lines. Ultra-realistic, with sharp focus on the steam rising from the coffee. Square image.";

const response = await ai.models.generateContent({

model: "gemini-2.5-flash-image",

contents: prompt,

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("product_mockup.png", buffer);

console.log("Image saved as product_mockup.png");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-2.5-flash-image",

genai.Text("A high-resolution, studio-lit product photograph of a minimalist ceramic coffee mug in matte black, presented on a polished concrete surface. The lighting is a three-point softbox setup designed to create soft, diffused highlights and eliminate harsh shadows. The camera angle is a slightly elevated 45-degree shot to showcase its clean lines. Ultra-realistic, with sharp focus on the steam rising from the coffee. Square image."),

)

for _, part := range result.Candidates[0].Content.Parts {

if part.Text != "" {

fmt.Println(part.Text)

} else if part.InlineData != nil {

imageBytes := part.InlineData.Data

outputFilename := "product_mockup.png"

_ = os.WriteFile(outputFilename, imageBytes, 0644)

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-2.5-flash-image:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{

"parts": [

{"text": "A high-resolution, studio-lit product photograph of a minimalist ceramic coffee mug in matte black, presented on a polished concrete surface. The lighting is a three-point softbox setup designed to create soft, diffused highlights and eliminate harsh shadows. The camera angle is a slightly elevated 45-degree shot to showcase its clean lines. Ultra-realistic, with sharp focus on the steam rising from the coffee. Square image."}

]

}]

}'

5. ミニマルでネガティブ スペースを活かしたデザイン

テキストを重ねて表示するウェブサイト、プレゼンテーション、マーケティング資料の背景の作成に最適です。

テンプレート

A minimalist composition featuring a single [subject] positioned in the

[bottom-right/top-left/etc.] of the frame. The background is a vast, empty

[color] canvas, creating significant negative space. Soft, subtle lighting.

[Aspect ratio].

プロンプト

A minimalist composition featuring a single, delicate red maple leaf

positioned in the bottom-right of the frame. The background is a vast, empty

off-white canvas, creating significant negative space for text. Soft,

diffused lighting from the top left. Square image.

Python

from google import genai

from google.genai import types

client = genai.Client()

response = client.models.generate_content(

model="gemini-2.5-flash-image",

contents="A minimalist composition featuring a single, delicate red maple leaf positioned in the bottom-right of the frame. The background is a vast, empty off-white canvas, creating significant negative space for text. Soft, diffused lighting from the top left. Square image.",

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("minimalist_design.png")

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class MinimalistDesign {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-2.5-flash-image",

"""

A minimalist composition featuring a single, delicate red maple

leaf positioned in the bottom-right of the frame. The background

is a vast, empty off-white canvas, creating significant negative

space for text. Soft, diffused lighting from the top left.

Square image.

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("minimalist_design.png"), blob.data().get());

}

}

}

}

}

}

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

"A minimalist composition featuring a single, delicate red maple leaf positioned in the bottom-right of the frame. The background is a vast, empty off-white canvas, creating significant negative space for text. Soft, diffused lighting from the top left. Square image.";

const response = await ai.models.generateContent({

model: "gemini-2.5-flash-image",

contents: prompt,

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("minimalist_design.png", buffer);

console.log("Image saved as minimalist_design.png");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-2.5-flash-image",

genai.Text("A minimalist composition featuring a single, delicate red maple leaf positioned in the bottom-right of the frame. The background is a vast, empty off-white canvas, creating significant negative space for text. Soft, diffused lighting from the top left. Square image."),

)

for _, part := range result.Candidates[0].Content.Parts {

if part.Text != "" {

fmt.Println(part.Text)

} else if part.InlineData != nil {

imageBytes := part.InlineData.Data

outputFilename := "minimalist_design.png"

_ = os.WriteFile(outputFilename, imageBytes, 0644)

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-2.5-flash-image:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{

"parts": [

{"text": "A minimalist composition featuring a single, delicate red maple leaf positioned in the bottom-right of the frame. The background is a vast, empty off-white canvas, creating significant negative space for text. Soft, diffused lighting from the top left. Square image."}

]

}]

}'

6. 連続したアート(コミック パネル / ストーリーボード)

キャラクターの整合性とシーンの説明に基づいて、ビジュアル ストーリーテリング用のパネルを作成します。テキストの正確性とストーリーテリングの能力については、これらのプロンプトは Gemini 3 Pro Image Preview で最適に機能します。

テンプレート

Make a 3 panel comic in a [style]. Put the character in a [type of scene].

プロンプト

Make a 3 panel comic in a gritty, noir art style with high-contrast black and white inks. Put the character in a humurous scene.

Python

from google import genai

from google.genai import types

from PIL import Image

client = genai.Client()

image_input = Image.open('/path/to/your/man_in_white_glasses.jpg')

text_input = "Make a 3 panel comic in a gritty, noir art style with high-contrast black and white inks. Put the character in a humurous scene."

response = client.models.generate_content(

model="gemini-3-pro-image-preview",

contents=[text_input, image_input],

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("comic_panel.jpg")

Java

import com.google.genai.Client;

import com.google.genai.types.Content;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

public class ComicPanel {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-3-pro-image-preview",

Content.fromParts(

Part.fromText("""

Make a 3 panel comic in a gritty, noir art style with high-contrast black and white inks. Put the character in a humurous scene.

"""),

Part.fromBytes(

Files.readAllBytes(

Path.of("/path/to/your/man_in_white_glasses.jpg")),

"image/jpeg")),

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("comic_panel.jpg"), blob.data().get());

}

}

}

}

}

}

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const imagePath = "/path/to/your/man_in_white_glasses.jpg";

const imageData = fs.readFileSync(imagePath);

const base64Image = imageData.toString("base64");

const prompt = [

{text: "Make a 3 panel comic in a gritty, noir art style with high-contrast black and white inks. Put the character in a humurous scene."},

{

inlineData: {

mimeType: "image/jpeg",

data: base64Image,

},

},

];

const response = await ai.models.generateContent({

model: "gemini-3-pro-image-preview",

contents: prompt,

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("comic_panel.jpg", buffer);

console.log("Image saved as comic_panel.jpg");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

imagePath := "/path/to/your/man_in_white_glasses.jpg"

imgData, _ := os.ReadFile(imagePath)

parts := []*genai.Part{

genai.NewPartFromText("Make a 3 panel comic in a gritty, noir art style with high-contrast black and white inks. Put the character in a humurous scene."),

&genai.Part{

InlineData: &genai.Blob{

MIMEType: "image/jpeg",

Data: imgData,

},

},

}

contents := []*genai.Content{

genai.NewContentFromParts(parts, genai.RoleUser),

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-3-pro-image-preview",

contents,

)

for _, part := range result.Candidates[0].Content.Parts {

if part.Text != "" {

fmt.Println(part.Text)

} else if part.InlineData != nil {

imageBytes := part.InlineData.Data

outputFilename := "comic_panel.jpg"

_ = os.WriteFile(outputFilename, imageBytes, 0644)

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-image-preview:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{

"parts": [

{"text": "Make a 3 panel comic in a gritty, noir art style with high-contrast black and white inks. Put the character in a humurous scene."},

{"inline_data": {"mime_type": "image/jpeg", "data": "<BASE64_IMAGE_DATA>"}}

]

}]

}'

入力 |

出力 |

|

|

7. Google 検索によるグラウンディング

Google 検索を使用して、最近の情報やリアルタイムの情報に基づいて画像を生成します。これは、ニュースや天気など、時間的制約のあるトピックに役立ちます。

プロンプト