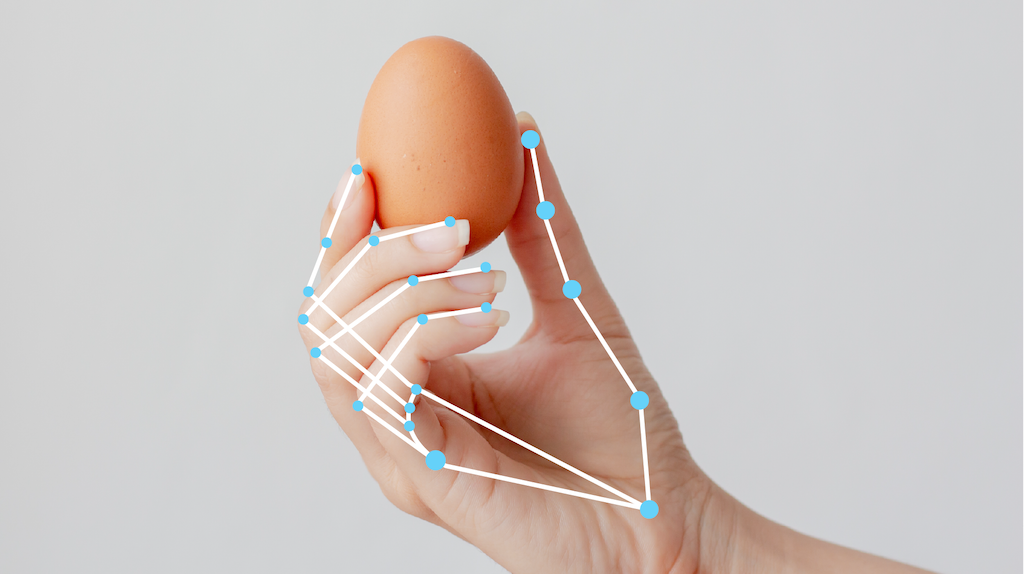

MediaPipe Hand Landmarker タスクを使用すると、画像内の手のランドマークを検出できます。このタスクを使用すると、手のキーポイントを特定し、その上に視覚効果をレンダリングできます。このタスクは、機械学習(ML)モデルを静的データまたは連続ストリームとして使用して画像データを処理し、画像座標で手のランドマーク、ワールド座標で手のランドマーク、検出された複数の手の利き手(左手/右手)を出力します。

使ってみる

このタスクを使用するには、対象プラットフォーム向けの次のいずれかの実装ガイドに沿って操作します。以下のプラットフォーム固有のガイドでは、推奨モデルや、推奨構成オプションを含むコード例など、このタスクの基本的な実装について説明します。

タスクの詳細

このセクションでは、このタスクの機能、入力、出力、構成オプションについて説明します。

機能

- 入力画像の処理 - 処理には、画像の回転、サイズ変更、正規化、色空間変換が含まれます。

- スコアしきい値 - 予測スコアに基づいて結果をフィルタします。

| タスク入力 | タスクの出力 |

|---|---|

Hand Landmarker は、次のいずれかのデータ型の入力を受け入れます。

|

Hand Landmarker の出力結果は次のとおりです。

|

構成オプション

このタスクには、次の構成オプションがあります。

| オプション名 | 説明 | 値の範囲 | デフォルト値 |

|---|---|---|---|

running_mode |

タスクの実行モードを設定します。モードは次の 3 つです。 IMAGE: 単一画像入力のモード。 動画: 動画のデコードされたフレームのモード。 LIVE_STREAM: カメラなどからの入力データのライブ配信モード。このモードでは、resultListener を呼び出して、結果を非同期で受信するリスナーを設定する必要があります。 |

{IMAGE, VIDEO, LIVE_STREAM} |

IMAGE |

num_hands |

ハンド ランドマーク検出機能によって検出される手の最大数。 | Any integer > 0 |

1 |

min_hand_detection_confidence |

手の検出が成功と見なされるために必要な、手のひら検出モデルの信頼度の最小スコア。 | 0.0 - 1.0 |

0.5 |

min_hand_presence_confidence |

手ランドマーク検出モデルの手の存在スコアの最小信頼度スコア。動画モードとライブ配信モードでは、手形モデルの手の存在の信頼スコアがこのしきい値を下回ると、ハンド ランドマークが手のひら検出モデルをトリガーします。それ以外の場合は、軽量の手トラッキング アルゴリズムが、その後のランドマーク検出のために手の位置を決定します。 | 0.0 - 1.0 |

0.5 |

min_tracking_confidence |

ハンド トラッキングが成功とみなされるための最小信頼スコア。これは、現在のフレームと最後のフレームの手の境界ボックスの IoU しきい値です。Hand Landmarker の動画モードとストリーミング モードでは、トラッキングに失敗すると、Hand Landmarker が手の検出をトリガーします。それ以外の場合、手検出はスキップされます。 | 0.0 - 1.0 |

0.5 |

result_callback |

ハンド ランドマークがライブ配信モードのときに検出結果を非同期で受信するように結果リスナーを設定します。実行モードが LIVE_STREAM に設定されている場合にのみ適用されます。 |

なし | なし |

モデル

Hand Landmarker は、手のひら検出モデルと手のランドマーク検出モデルの 2 つのパッケージ化されたモデルを含むモデル バンドルを使用します。このタスクを実行するには、これらのモデルの両方を含むモデル バンドルが必要です。

| モデル名 | 入力シェイプ | 量子化のタイプ | モデルカード | バージョン |

|---|---|---|---|---|

| HandLandmarker(完全版) | 192 x 192、224 x 224 | float 16 | 情報 | 最新 |

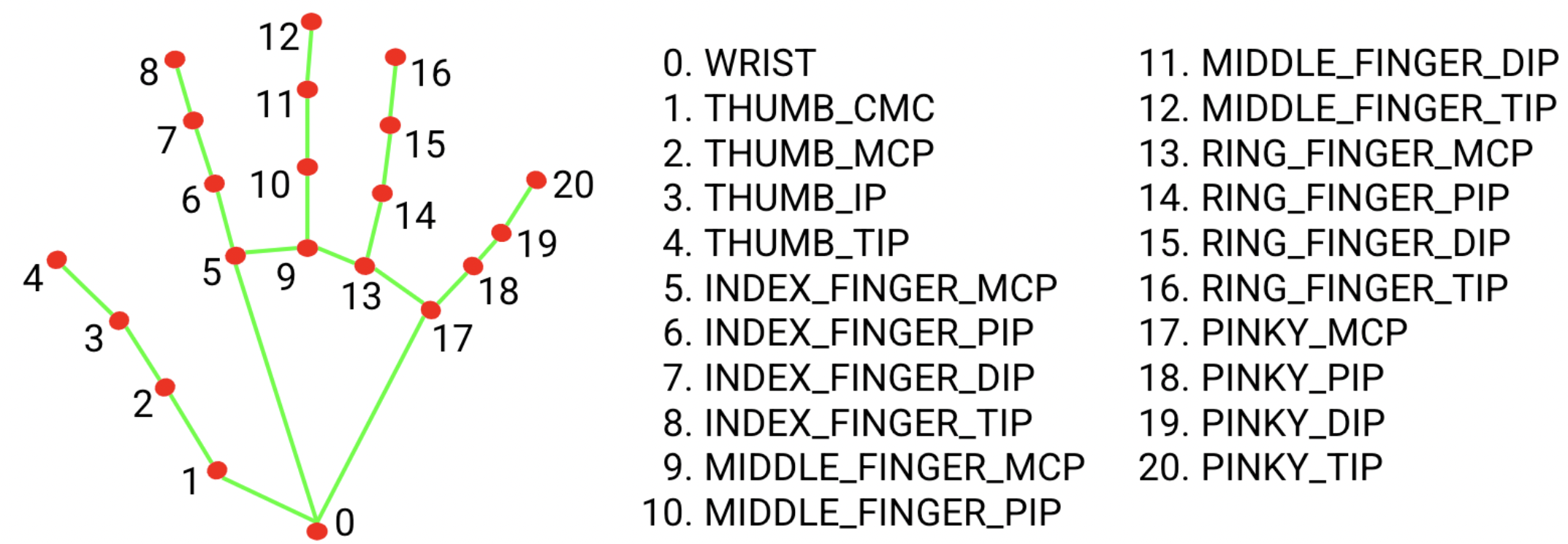

ハンド ランドマーク モデル バンドルは、検出された手の領域内の 21 個の手の指関節座標のキーポイント位置を検出します。このモデルは、約 3 万枚の現実世界の画像と、さまざまな背景に重ねてレンダリングされた合成の手モデルでトレーニングされています。

ハンド ランドマーク モデル バンドルには、手のひら検出モデルとハンド ランドマーク検出モデルが含まれています。手のひら検出モデルは入力画像内の手を検出し、手のランドマーク検出モデルは手のひら検出モデルによって定義された切り抜かれた手の画像上の特定の手のランドマークを識別します。

手のひら検出モデルの実行には時間がかかるため、動画またはライブ配信の実行モードでは、ハンド ランドマーク モデルによって 1 つのフレームで定義された境界ボックスを使用して、後続のフレームの手の領域をローカライズします。Hand Landmarker は、手ランドマーク モデルが手の存在を検出できなくなった場合、またはフレーム内の手をトラッキングできなかった場合にのみ、手のひら検出モデルを再トリガーします。これにより、Hand Landmarker が手のひら検出モデルをトリガーする回数が減ります。

タスクのベンチマーク

上記の事前トレーニング済みモデルに基づくパイプライン全体のタスク ベンチマークは次のとおりです。レイテンシの結果は、CPU / GPU を使用した Google Pixel 6 の平均レイテンシです。

| モデル名 | CPU レイテンシ | GPU レイテンシ |

|---|---|---|

| HandLandmarker(完全) | 17.12ms | 12.27 ミリ秒 |