-

Generiert von Nano Banana Pro Prompt: „Erstelle eine klare, isometrische 3D-Miniatur-Zeichentrickszene von London aus der Vogelperspektive (45°), die die berühmtesten Wahrzeichen und architektonischen Elemente der Stadt zeigt. Verwende weiche, raffinierte Texturen mit realistischen PBR-Materialien und sanften, lebensechten Lichtern und Schatten. Integrieren Sie die aktuellen Wetterbedingungen direkt in die Stadtumgebung, um eine immersive, atmosphärische Stimmung zu erzeugen. Verwende eine klare, minimalistische Komposition mit einem weichen, einfarbigen Hintergrund. Platziere oben in der Mitte den Titel „London“ in großer, fett gedruckter Schrift, darunter ein gut sichtbares Wettersymbol, dann das Datum (kleine Schrift) und die Temperatur (mittlere Schrift). Der gesamte Text muss zentriert sein und einen einheitlichen Abstand haben. Er darf sich leicht mit den Dächern der Gebäude überschneiden.“ -

Generiert von Nano Banana Pro Prompt: „Platziere dieses Logo in einer hochwertigen Anzeige für ein Parfüm mit Bananenduft. Das Logo ist perfekt in die Flasche integriert.“ -

Generiert von Nano Banana Pro -

Generiert von Nano Banana Pro Eingabeaufforderung: „Ein Foto von einem glänzenden Magazincover mit den großen, fett gedruckten Worten ‚Nano Banana Pro‘.“ Der Text ist in einer Serifenschriftart und füllt die Ansicht aus. Kein anderer Text. Vor dem Text ist ein Porträt einer Person in einem schönen Outfit zu sehen. Fügen Sie die Ausgabenummer und das aktuelle Datum in die Ecke ein, zusammen mit einem Barcode und einem Preis. Das Magazin liegt in einem Designergeschäft auf einem Regal vor einer Backsteinwand. Eine Schaufensterpuppe trägt dasselbe Outfit.“Professionelle Produktbilder in AI Studio erstellen -

Generiert von Nano Banana Pro Prompt: „Suche nach Informationen darüber, wie die Einführung von Gemini 3 Flash aufgenommen wurde. Schreibe anhand dieser Informationen einen kurzen Artikel (mit Überschriften). Gib ein Foto des Artikels zurück, wie er in einem designorientierten Hochglanzmagazin erscheinen würde. Es ist ein Foto einer einzelnen umgeklappten Seite, auf der der Artikel über Gemini 3 Flash zu sehen ist. Ein Hero-Foto. Anzeigentitel in Serifenschrift.“ -

Generiert von Nano Banana Pro Eingabeaufforderung: „Ein Foto einer Alltagsszene in einem belebten Café, in dem Frühstück serviert wird. Im Vordergrund ist ein Anime-Mann mit blauen Haaren zu sehen. Eine der Personen ist eine Bleistiftskizze, eine andere ist eine Claymation-Figur.“Mit verschiedenen Kunststilen mit Nano Banana in AI Studio experimentieren -

Generiert von Nano Banana Pro Prompt: „Ein Symbol, das einen niedlichen Hund darstellt. Der Hintergrund ist weiß. Gestalte die Symbole in einem farbenfrohen und taktilen 3D-Stil. Kein Text.“Symbole, Sticker und Assets mit Nano Banana in AI Studio erstellen -

Generiert von Nano Banana Pro Prompt: „Erstelle ein perfekt isometrisches Foto. Es ist keine Miniatur, sondern ein aufgenommenes Foto, das zufällig perfekt isometrisch ist. Es ist ein Foto von einem schönen, modernen Bürointerieur.“Fotorealistische Bilder in AI Studio erstellen

Nano Banana ist der Name für die nativen Funktionen von Gemini zur Bildgenerierung. Gemini kann Bilder in Kombination mit Text, Bildern oder einer Kombination aus beidem generieren und verarbeiten. So können Sie Bilder mit beispielloser Kontrolle erstellen, bearbeiten und optimieren.

Nano Banana bezieht sich auf zwei verschiedene Modelle, die in der Gemini API verfügbar sind:

- Nano Banana: Das Modell Gemini 2.5 Flash Image (

gemini-2.5-flash-image). Dieses Modell ist auf Geschwindigkeit und Effizienz ausgelegt und für Aufgaben mit hohem Volumen und geringer Latenz optimiert. - Nano Banana Pro: Das Modell Gemini 3 Pro Image (Vorabversion) (

gemini-3-pro-image-preview). Dieses Modell wurde für die professionelle Asset-Produktion entwickelt. Es nutzt fortschrittliche Schlussfolgerungen („Thinking“), um komplexe Anweisungen zu befolgen und Text in hoher Qualität zu rendern.

Alle generierten Bilder enthalten ein SynthID-Wasserzeichen.

Bildgenerierung (Text-zu-Bild)

Python

from google import genai

from google.genai import types

from PIL import Image

client = genai.Client()

prompt = ("Create a picture of a nano banana dish in a fancy restaurant with a Gemini theme")

response = client.models.generate_content(

model="gemini-2.5-flash-image",

contents=[prompt],

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("generated_image.png")

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

"Create a picture of a nano banana dish in a fancy restaurant with a Gemini theme";

const response = await ai.models.generateContent({

model: "gemini-2.5-flash-image",

contents: prompt,

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("gemini-native-image.png", buffer);

console.log("Image saved as gemini-native-image.png");

}

}

}

main();

Ok

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-2.5-flash-image",

genai.Text("Create a picture of a nano banana dish in a " +

" fancy restaurant with a Gemini theme"),

)

for _, part := range result.Candidates[0].Content.Parts {

if part.Text != "" {

fmt.Println(part.Text)

} else if part.InlineData != nil {

imageBytes := part.InlineData.Data

outputFilename := "gemini_generated_image.png"

_ = os.WriteFile(outputFilename, imageBytes, 0644)

}

}

}

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class TextToImage {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-2.5-flash-image",

"Create a picture of a nano banana dish in a fancy restaurant with a Gemini theme",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("_01_generated_image.png"), blob.data().get());

}

}

}

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-2.5-flash-image:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{

"parts": [

{"text": "Create a picture of a nano banana dish in a fancy restaurant with a Gemini theme"}

]

}]

}'

Bildbearbeitung (Text-und-Bild-zu-Bild)

Zur Erinnerung: Sie müssen die erforderlichen Rechte an allen Bildern haben, die Sie hochladen. Erstelle keine Inhalte, durch die die Rechte anderer verletzt werden, einschließlich Videos oder Bildern, durch die andere getäuscht, belästigt oder geschädigt werden. Ihre Nutzung dieses auf generativer KI basierenden Dienstes unterliegt unserer Richtlinie zur unzulässigen Nutzung.

Sie können ein Bild bereitstellen und Text-Prompts verwenden, um Elemente hinzuzufügen, zu entfernen oder zu ändern, den Stil zu ändern oder die Farbkorrektur anzupassen.

Im folgenden Beispiel wird das Hochladen von base64-codierten Bildern veranschaulicht.

Informationen zu mehreren Bildern, größeren Nutzlasten und unterstützten MIME-Typen finden Sie auf der Seite Bildanalyse.

Python

from google import genai

from google.genai import types

from PIL import Image

client = genai.Client()

prompt = (

"Create a picture of my cat eating a nano-banana in a "

"fancy restaurant under the Gemini constellation",

)

image = Image.open("/path/to/cat_image.png")

response = client.models.generate_content(

model="gemini-2.5-flash-image",

contents=[prompt, image],

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("generated_image.png")

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const imagePath = "path/to/cat_image.png";

const imageData = fs.readFileSync(imagePath);

const base64Image = imageData.toString("base64");

const prompt = [

{ text: "Create a picture of my cat eating a nano-banana in a" +

"fancy restaurant under the Gemini constellation" },

{

inlineData: {

mimeType: "image/png",

data: base64Image,

},

},

];

const response = await ai.models.generateContent({

model: "gemini-2.5-flash-image",

contents: prompt,

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("gemini-native-image.png", buffer);

console.log("Image saved as gemini-native-image.png");

}

}

}

main();

Ok

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

imagePath := "/path/to/cat_image.png"

imgData, _ := os.ReadFile(imagePath)

parts := []*genai.Part{

genai.NewPartFromText("Create a picture of my cat eating a nano-banana in a fancy restaurant under the Gemini constellation"),

&genai.Part{

InlineData: &genai.Blob{

MIMEType: "image/png",

Data: imgData,

},

},

}

contents := []*genai.Content{

genai.NewContentFromParts(parts, genai.RoleUser),

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-2.5-flash-image",

contents,

)

for _, part := range result.Candidates[0].Content.Parts {

if part.Text != "" {

fmt.Println(part.Text)

} else if part.InlineData != nil {

imageBytes := part.InlineData.Data

outputFilename := "gemini_generated_image.png"

_ = os.WriteFile(outputFilename, imageBytes, 0644)

}

}

}

Java

import com.google.genai.Client;

import com.google.genai.types.Content;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

public class TextAndImageToImage {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-2.5-flash-image",

Content.fromParts(

Part.fromText("""

Create a picture of my cat eating a nano-banana in

a fancy restaurant under the Gemini constellation

"""),

Part.fromBytes(

Files.readAllBytes(

Path.of("src/main/resources/cat.jpg")),

"image/jpeg")),

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("gemini_generated_image.png"), blob.data().get());

}

}

}

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-2.5-flash-image:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H 'Content-Type: application/json' \

-d "{

\"contents\": [{

\"parts\":[

{\"text\": \"'Create a picture of my cat eating a nano-banana in a fancy restaurant under the Gemini constellation\"},

{

\"inline_data\": {

\"mime_type\":\"image/jpeg\",

\"data\": \"<BASE64_IMAGE_DATA>\"

}

}

]

}]

}"

Multi-Turn-Bildbearbeitung

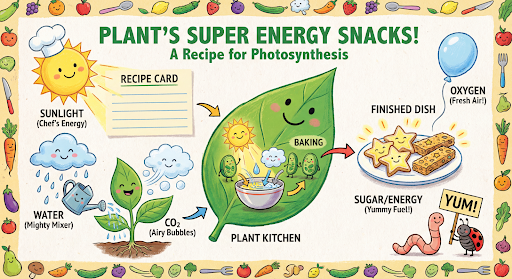

Bilder weiterhin per Prompt generieren und bearbeiten Wir empfehlen, Bilder in einem Chat oder einer Multi-Turn Conversation zu optimieren. Im folgenden Beispiel wird ein Prompt zum Generieren einer Infografik zur Fotosynthese gezeigt.

Python

from google import genai

from google.genai import types

client = genai.Client()

chat = client.chats.create(

model="gemini-3-pro-image-preview",

config=types.GenerateContentConfig(

response_modalities=['TEXT', 'IMAGE'],

tools=[{"google_search": {}}]

)

)

message = "Create a vibrant infographic that explains photosynthesis as if it were a recipe for a plant's favorite food. Show the \"ingredients\" (sunlight, water, CO2) and the \"finished dish\" (sugar/energy). The style should be like a page from a colorful kids' cookbook, suitable for a 4th grader."

response = chat.send_message(message)

for part in response.parts:

if part.text is not None:

print(part.text)

elif image:= part.as_image():

image.save("photosynthesis.png")

JavaScript

import { GoogleGenAI } from "@google/genai";

const ai = new GoogleGenAI({});

async function main() {

const chat = ai.chats.create({

model: "gemini-3-pro-image-preview",

config: {

responseModalities: ['TEXT', 'IMAGE'],

tools: [{googleSearch: {}}],

},

});

await main();

const message = "Create a vibrant infographic that explains photosynthesis as if it were a recipe for a plant's favorite food. Show the \"ingredients\" (sunlight, water, CO2) and the \"finished dish\" (sugar/energy). The style should be like a page from a colorful kids' cookbook, suitable for a 4th grader."

let response = await chat.sendMessage({message});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("photosynthesis.png", buffer);

console.log("Image saved as photosynthesis.png");

}

}

Ok

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

defer client.Close()

model := client.GenerativeModel("gemini-3-pro-image-preview")

model.GenerationConfig = &pb.GenerationConfig{

ResponseModalities: []pb.ResponseModality{genai.Text, genai.Image},

}

chat := model.StartChat()

message := "Create a vibrant infographic that explains photosynthesis as if it were a recipe for a plant's favorite food. Show the \"ingredients\" (sunlight, water, CO2) and the \"finished dish\" (sugar/energy). The style should be like a page from a colorful kids' cookbook, suitable for a 4th grader."

resp, err := chat.SendMessage(ctx, genai.Text(message))

if err != nil {

log.Fatal(err)

}

for _, part := range resp.Candidates[0].Content.Parts {

if txt, ok := part.(genai.Text); ok {

fmt.Printf("%s", string(txt))

} else if img, ok := part.(genai.ImageData); ok {

err := os.WriteFile("photosynthesis.png", img.Data, 0644)

if err != nil {

log.Fatal(err)

}

}

}

}

Java

import com.google.genai.Chat;

import com.google.genai.Client;

import com.google.genai.types.Content;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.GoogleSearch;

import com.google.genai.types.ImageConfig;

import com.google.genai.types.Part;

import com.google.genai.types.RetrievalConfig;

import com.google.genai.types.Tool;

import com.google.genai.types.ToolConfig;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

public class MultiturnImageEditing {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.tools(Tool.builder()

.googleSearch(GoogleSearch.builder().build())

.build())

.build();

Chat chat = client.chats.create("gemini-3-pro-image-preview", config);

GenerateContentResponse response = chat.sendMessage("""

Create a vibrant infographic that explains photosynthesis

as if it were a recipe for a plant's favorite food.

Show the "ingredients" (sunlight, water, CO2)

and the "finished dish" (sugar/energy).

The style should be like a page from a colorful

kids' cookbook, suitable for a 4th grader.

""");

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("photosynthesis.png"), blob.data().get());

}

}

}

// ...

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-image-preview:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{

"role": "user",

"parts": [

{"text": "Create a vibrant infographic that explains photosynthesis as if it were a recipe for a plants favorite food. Show the \"ingredients\" (sunlight, water, CO2) and the \"finished dish\" (sugar/energy). The style should be like a page from a colorful kids cookbook, suitable for a 4th grader."}

]

}],

"generationConfig": {

"responseModalities": ["TEXT", "IMAGE"]

}

}'

Sie können dann denselben Chat verwenden, um die Sprache der Grafik in Spanisch zu ändern.

Python

message = "Update this infographic to be in Spanish. Do not change any other elements of the image."

aspect_ratio = "16:9" # "1:1","2:3","3:2","3:4","4:3","4:5","5:4","9:16","16:9","21:9"

resolution = "2K" # "1K", "2K", "4K"

response = chat.send_message(message,

config=types.GenerateContentConfig(

image_config=types.ImageConfig(

aspect_ratio=aspect_ratio,

image_size=resolution

),

))

for part in response.parts:

if part.text is not None:

print(part.text)

elif image:= part.as_image():

image.save("photosynthesis_spanish.png")

JavaScript

const message = 'Update this infographic to be in Spanish. Do not change any other elements of the image.';

const aspectRatio = '16:9';

const resolution = '2K';

let response = await chat.sendMessage({

message,

config: {

responseModalities: ['TEXT', 'IMAGE'],

imageConfig: {

aspectRatio: aspectRatio,

imageSize: resolution,

},

tools: [{googleSearch: {}}],

},

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("photosynthesis2.png", buffer);

console.log("Image saved as photosynthesis2.png");

}

}

Ok

message = "Update this infographic to be in Spanish. Do not change any other elements of the image."

aspect_ratio = "16:9" // "1:1","2:3","3:2","3:4","4:3","4:5","5:4","9:16","16:9","21:9"

resolution = "2K" // "1K", "2K", "4K"

model.GenerationConfig.ImageConfig = &pb.ImageConfig{

AspectRatio: aspect_ratio,

ImageSize: resolution,

}

resp, err = chat.SendMessage(ctx, genai.Text(message))

if err != nil {

log.Fatal(err)

}

for _, part := range resp.Candidates[0].Content.Parts {

if txt, ok := part.(genai.Text); ok {

fmt.Printf("%s", string(txt))

} else if img, ok := part.(genai.ImageData); ok {

err := os.WriteFile("photosynthesis_spanish.png", img.Data, 0644)

if err != nil {

log.Fatal(err)

}

}

}

Java

String aspectRatio = "16:9"; // "1:1","2:3","3:2","3:4","4:3","4:5","5:4","9:16","16:9","21:9"

String resolution = "2K"; // "1K", "2K", "4K"

config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.imageConfig(ImageConfig.builder()

.aspectRatio(aspectRatio)

.imageSize(resolution)

.build())

.build();

response = chat.sendMessage(

"Update this infographic to be in Spanish. " +

"Do not change any other elements of the image.",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("photosynthesis_spanish.png"), blob.data().get());

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-image-preview:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H 'Content-Type: application/json' \

-d '{

"contents": [

{

"role": "user",

"parts": [{"text": "Create a vibrant infographic that explains photosynthesis..."}]

},

{

"role": "model",

"parts": [{"inline_data": {"mime_type": "image/png", "data": "<PREVIOUS_IMAGE_DATA>"}}]

},

{

"role": "user",

"parts": [{"text": "Update this infographic to be in Spanish. Do not change any other elements of the image."}]

}

],

"tools": [{"google_search": {}}],

"generationConfig": {

"responseModalities": ["TEXT", "IMAGE"],

"imageConfig": {

"aspectRatio": "16:9",

"imageSize": "2K"

}

}

}'

Neu mit Gemini 3 Pro Image

Gemini 3 Pro Image (gemini-3-pro-image-preview) ist ein hochmodernes Modell für die Bildgenerierung und ‑bearbeitung, das für die professionelle Asset-Produktion optimiert ist.

Es wurde entwickelt, um die anspruchsvollsten Workflows durch fortschrittliches logisches Denken zu bewältigen, und eignet sich hervorragend für komplexe Aufgaben, die mehrere Schritte erfordern.

- Ausgabe in hoher Auflösung: Integrierte Funktionen zum Generieren von Bildern in 1K-, 2K- und 4K-Auflösung.

- Innovatives Text-Rendering: Das Modell kann gut lesbaren, stilisierten Text für Infografiken, Menüs, Diagramme und Marketing-Assets generieren.

- Fundierung mit der Google Suche: Das Modell kann die Google Suche als Tool verwenden, um Fakten zu überprüfen und Bilder auf Grundlage von Echtzeitdaten zu generieren (z.B. aktuelle Wetterkarten, Aktiencharts, aktuelle Ereignisse).

- Thinking-Modus: Das Modell nutzt einen „Denkprozess“, um komplexe Prompts zu analysieren. Es werden vorläufige „Gedankenbilder“ generiert (im Backend sichtbar, aber nicht kostenpflichtig), um die Komposition zu optimieren, bevor die endgültige hochwertige Ausgabe erstellt wird.

- Bis zu 14 Referenzbilder: Sie können jetzt bis zu 14 Referenzbilder kombinieren, um das endgültige Bild zu erstellen.

Bis zu 14 Referenzbilder verwenden

Mit Gemini 3 Pro (Vorabversion) können Sie bis zu 14 Referenzbilder kombinieren. Diese 14 Bilder können Folgendes enthalten:

- Bis zu 6 Bilder von Objekten mit hoher Wiedergabetreue, die in das endgültige Bild aufgenommen werden sollen

Bis zu 5 Bilder von Menschen, um das Aussehen des Charakters beizubehalten

Python

from google import genai

from google.genai import types

from PIL import Image

prompt = "An office group photo of these people, they are making funny faces."

aspect_ratio = "5:4" # "1:1","2:3","3:2","3:4","4:3","4:5","5:4","9:16","16:9","21:9"

resolution = "2K" # "1K", "2K", "4K"

client = genai.Client()

response = client.models.generate_content(

model="gemini-3-pro-image-preview",

contents=[

prompt,

Image.open('person1.png'),

Image.open('person2.png'),

Image.open('person3.png'),

Image.open('person4.png'),

Image.open('person5.png'),

],

config=types.GenerateContentConfig(

response_modalities=['TEXT', 'IMAGE'],

image_config=types.ImageConfig(

aspect_ratio=aspect_ratio,

image_size=resolution

),

)

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif image:= part.as_image():

image.save("office.png")

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

'An office group photo of these people, they are making funny faces.';

const aspectRatio = '5:4';

const resolution = '2K';

const contents = [

{ text: prompt },

{

inlineData: {

mimeType: "image/jpeg",

data: base64ImageFile1,

},

},

{

inlineData: {

mimeType: "image/jpeg",

data: base64ImageFile2,

},

},

{

inlineData: {

mimeType: "image/jpeg",

data: base64ImageFile3,

},

},

{

inlineData: {

mimeType: "image/jpeg",

data: base64ImageFile4,

},

},

{

inlineData: {

mimeType: "image/jpeg",

data: base64ImageFile5,

},

}

];

const response = await ai.models.generateContent({

model: 'gemini-3-pro-image-preview',

contents: contents,

config: {

responseModalities: ['TEXT', 'IMAGE'],

imageConfig: {

aspectRatio: aspectRatio,

imageSize: resolution,

},

},

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("image.png", buffer);

console.log("Image saved as image.png");

}

}

}

main();

Ok

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

defer client.Close()

model := client.GenerativeModel("gemini-3-pro-image-preview")

model.GenerationConfig = &pb.GenerationConfig{

ResponseModalities: []pb.ResponseModality{genai.Text, genai.Image},

ImageConfig: &pb.ImageConfig{

AspectRatio: "5:4",

ImageSize: "2K",

},

}

img1, err := os.ReadFile("person1.png")

if err != nil { log.Fatal(err) }

img2, err := os.ReadFile("person2.png")

if err != nil { log.Fatal(err) }

img3, err := os.ReadFile("person3.png")

if err != nil { log.Fatal(err) }

img4, err := os.ReadFile("person4.png")

if err != nil { log.Fatal(err) }

img5, err := os.ReadFile("person5.png")

if err != nil { log.Fatal(err) }

parts := []genai.Part{

genai.Text("An office group photo of these people, they are making funny faces."),

genai.ImageData{MIMEType: "image/png", Data: img1},

genai.ImageData{MIMEType: "image/png", Data: img2},

genai.ImageData{MIMEType: "image/png", Data: img3},

genai.ImageData{MIMEType: "image/png", Data: img4},

genai.ImageData{MIMEType: "image/png", Data: img5},

}

resp, err := model.GenerateContent(ctx, parts...)

if err != nil {

log.Fatal(err)

}

for _, part := range resp.Candidates[0].Content.Parts {

if txt, ok := part.(genai.Text); ok {

fmt.Printf("%s", string(txt))

} else if img, ok := part.(genai.ImageData); ok {

err := os.WriteFile("office.png", img.Data, 0644)

if err != nil {

log.Fatal(err)

}

}

}

}

Java

import com.google.genai.Client;

import com.google.genai.types.Content;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.ImageConfig;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

public class GroupPhoto {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.imageConfig(ImageConfig.builder()

.aspectRatio("5:4")

.imageSize("2K")

.build())

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-3-pro-image-preview",

Content.fromParts(

Part.fromText("An office group photo of these people, they are making funny faces."),

Part.fromBytes(Files.readAllBytes(Path.of("person1.png")), "image/png"),

Part.fromBytes(Files.readAllBytes(Path.of("person2.png")), "image/png"),

Part.fromBytes(Files.readAllBytes(Path.of("person3.png")), "image/png"),

Part.fromBytes(Files.readAllBytes(Path.of("person4.png")), "image/png"),

Part.fromBytes(Files.readAllBytes(Path.of("person5.png")), "image/png")

), config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("office.png"), blob.data().get());

}

}

}

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-image-preview:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H 'Content-Type: application/json' \

-d "{

\"contents\": [{

\"parts\":[

{\"text\": \"An office group photo of these people, they are making funny faces.\"},

{\"inline_data\": {\"mime_type\":\"image/png\", \"data\": \"<BASE64_DATA_IMG_1>\"}},

{\"inline_data\": {\"mime_type\":\"image/png\", \"data\": \"<BASE64_DATA_IMG_2>\"}},

{\"inline_data\": {\"mime_type\":\"image/png\", \"data\": \"<BASE64_DATA_IMG_3>\"}},

{\"inline_data\": {\"mime_type\":\"image/png\", \"data\": \"<BASE64_DATA_IMG_4>\"}},

{\"inline_data\": {\"mime_type\":\"image/png\", \"data\": \"<BASE64_DATA_IMG_5>\"}}

]

}],

\"generationConfig\": {

\"responseModalities\": [\"TEXT\", \"IMAGE\"],

\"imageConfig\": {

\"aspectRatio\": \"5:4\",

\"imageSize\": \"2K\"

}

}

}"

Fundierung mit der Google Suche

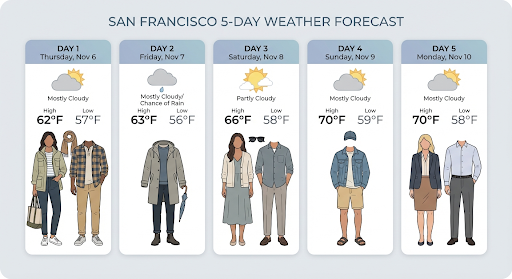

Mit dem Google Suche-Tool können Sie Bilder auf Grundlage von Echtzeitinformationen wie Wettervorhersagen, Aktiencharts oder aktuellen Ereignissen generieren.

Wenn Sie die Fundierung mit der Google Suche für die Bildgenerierung verwenden, werden bildbasierte Suchergebnisse nicht an das Generierungsmodell übergeben und sind nicht in der Antwort enthalten.

Python

from google import genai

prompt = "Visualize the current weather forecast for the next 5 days in San Francisco as a clean, modern weather chart. Add a visual on what I should wear each day"

aspect_ratio = "16:9" # "1:1","2:3","3:2","3:4","4:3","4:5","5:4","9:16","16:9","21:9"

client = genai.Client()

response = client.models.generate_content(

model="gemini-3-pro-image-preview",

contents=prompt,

config=types.GenerateContentConfig(

response_modalities=['Text', 'Image'],

image_config=types.ImageConfig(

aspect_ratio=aspect_ratio,

),

tools=[{"google_search": {}}]

)

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif image:= part.as_image():

image.save("weather.png")

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt = 'Visualize the current weather forecast for the next 5 days in San Francisco as a clean, modern weather chart. Add a visual on what I should wear each day';

const aspectRatio = '16:9';

const resolution = '2K';

const response = await ai.models.generateContent({

model: 'gemini-3-pro-image-preview',

contents: prompt,

config: {

responseModalities: ['TEXT', 'IMAGE'],

imageConfig: {

aspectRatio: aspectRatio,

imageSize: resolution,

},

tools: [{ googleSearch: {} }]

},

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("image.png", buffer);

console.log("Image saved as image.png");

}

}

}

main();

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.GoogleSearch;

import com.google.genai.types.ImageConfig;

import com.google.genai.types.Part;

import com.google.genai.types.Tool;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class SearchGrounding {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.imageConfig(ImageConfig.builder()

.aspectRatio("16:9")

.build())

.tools(Tool.builder()

.googleSearch(GoogleSearch.builder().build())

.build())

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-3-pro-image-preview", """

Visualize the current weather forecast for the next 5 days

in San Francisco as a clean, modern weather chart.

Add a visual on what I should wear each day

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("weather.png"), blob.data().get());

}

}

}

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-image-preview:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{"parts": [{"text": "Visualize the current weather forecast for the next 5 days in San Francisco as a clean, modern weather chart. Add a visual on what I should wear each day"}]}],

"tools": [{"google_search": {}}],

"generationConfig": {

"responseModalities": ["TEXT", "IMAGE"],

"imageConfig": {"aspectRatio": "16:9"}

}

}'

Die Antwort enthält groundingMetadata mit den folgenden erforderlichen Feldern:

searchEntryPoint: Enthält das HTML und CSS zum Rendern der erforderlichen Suchvorschläge.groundingChunks: Gibt die drei wichtigsten Webquellen zurück, die zur Fundierung des generierten Bildes verwendet wurden.

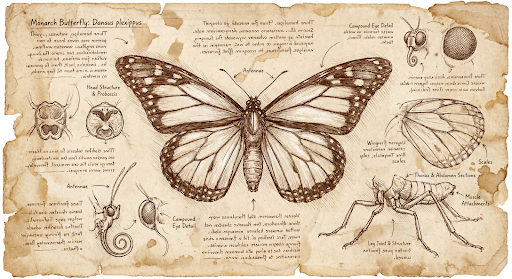

Bilder mit einer Auflösung von bis zu 4K generieren

Gemini 3 Pro Image generiert standardmäßig Bilder mit einer Auflösung von 1.000 Pixeln, kann aber auch Bilder mit einer Auflösung von 2.000 und 4.000 Pixeln ausgeben. Wenn Sie Assets mit höherer Auflösung generieren möchten, geben Sie die image_size im generation_config an.

Sie müssen ein großes „K“ verwenden, z.B. 1.000, 2.000, 4.000). Parameter in Kleinbuchstaben (z.B. 1.000) wird abgelehnt.

Python

from google import genai

from google.genai import types

prompt = "Da Vinci style anatomical sketch of a dissected Monarch butterfly. Detailed drawings of the head, wings, and legs on textured parchment with notes in English."

aspect_ratio = "1:1" # "1:1","2:3","3:2","3:4","4:3","4:5","5:4","9:16","16:9","21:9"

resolution = "1K" # "1K", "2K", "4K"

client = genai.Client()

response = client.models.generate_content(

model="gemini-3-pro-image-preview",

contents=prompt,

config=types.GenerateContentConfig(

response_modalities=['TEXT', 'IMAGE'],

image_config=types.ImageConfig(

aspect_ratio=aspect_ratio,

image_size=resolution

),

)

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif image:= part.as_image():

image.save("butterfly.png")

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

'Da Vinci style anatomical sketch of a dissected Monarch butterfly. Detailed drawings of the head, wings, and legs on textured parchment with notes in English.';

const aspectRatio = '1:1';

const resolution = '1K';

const response = await ai.models.generateContent({

model: 'gemini-3-pro-image-preview',

contents: prompt,

config: {

responseModalities: ['TEXT', 'IMAGE'],

imageConfig: {

aspectRatio: aspectRatio,

imageSize: resolution,

},

},

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("image.png", buffer);

console.log("Image saved as image.png");

}

}

}

main();

Ok

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

defer client.Close()

model := client.GenerativeModel("gemini-3-pro-image-preview")

model.GenerationConfig = &pb.GenerationConfig{

ResponseModalities: []pb.ResponseModality{genai.Text, genai.Image},

ImageConfig: &pb.ImageConfig{

AspectRatio: "1:1",

ImageSize: "1K",

},

}

prompt := "Da Vinci style anatomical sketch of a dissected Monarch butterfly. Detailed drawings of the head, wings, and legs on textured parchment with notes in English."

resp, err := model.GenerateContent(ctx, genai.Text(prompt))

if err != nil {

log.Fatal(err)

}

for _, part := range resp.Candidates[0].Content.Parts {

if txt, ok := part.(genai.Text); ok {

fmt.Printf("%s", string(txt))

} else if img, ok := part.(genai.ImageData); ok {

err := os.WriteFile("butterfly.png", img.Data, 0644)

if err != nil {

log.Fatal(err)

}

}

}

}

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.GoogleSearch;

import com.google.genai.types.ImageConfig;

import com.google.genai.types.Part;

import com.google.genai.types.Tool;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class HiRes {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.imageConfig(ImageConfig.builder()

.aspectRatio("16:9")

.imageSize("4K")

.build())

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-3-pro-image-preview", """

Da Vinci style anatomical sketch of a dissected Monarch butterfly.

Detailed drawings of the head, wings, and legs on textured

parchment with notes in English.

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("butterfly.png"), blob.data().get());

}

}

}

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-image-preview:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{"parts": [{"text": "Da Vinci style anatomical sketch of a dissected Monarch butterfly. Detailed drawings of the head, wings, and legs on textured parchment with notes in English."}]}],

"tools": [{"google_search": {}}],

"generationConfig": {

"responseModalities": ["TEXT", "IMAGE"],

"imageConfig": {"aspectRatio": "1:1", "imageSize": "1K"}

}

}'

Das folgende Bild wurde mit diesem Prompt generiert:

Denkprozess

Das Gemini 3 Pro Image Preview-Modell ist ein Denkmodell und verwendet einen Denkprozess („Thinking“) für komplexe Prompts. Dieses Feature ist standardmäßig aktiviert und kann in der API nicht deaktiviert werden. Weitere Informationen zum Denkprozess finden Sie im Leitfaden Gemini Thinking.

Das Modell generiert bis zu zwei Zwischenbilder, um Komposition und Logik zu testen. Das letzte Bild unter „Thinking“ ist auch das endgültige gerenderte Bild.

Sie können sich die Überlegungen ansehen, die zur Erstellung des endgültigen Bildes geführt haben.

Python

for part in response.parts:

if part.thought:

if part.text:

print(part.text)

elif image:= part.as_image():

image.show()

JavaScript

for (const part of response.candidates[0].content.parts) {

if (part.thought) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, 'base64');

fs.writeFileSync('image.png', buffer);

console.log('Image saved as image.png');

}

}

}

Thought Signatures

Gedankensignaturen sind verschlüsselte Darstellungen des internen Denkprozesses des Modells und werden verwendet, um den Kontext der Argumentation bei Interaktionen mit mehreren Zügen beizubehalten. Alle Antworten enthalten das Feld thought_signature. In der Regel sollten Sie eine Gedanken-Signatur, die Sie in einer Modellantwort erhalten, genau so zurückgeben, wie Sie sie erhalten haben, wenn Sie den Unterhaltungsverlauf im nächsten Zug senden. Wenn keine Gedanken-Signaturen verteilt werden, kann dies dazu führen, dass die Antwort fehlschlägt. Weitere Informationen zu Signaturen im Allgemeinen finden Sie in der Dokumentation zur Gedankensignatur.

So funktionieren Gedanken-Signaturen:

- Alle

inline_data-Teile mit dem Bildmimetype, die Teil der Antwort sind, müssen eine Signatur haben. - Wenn es direkt nach den Gedanken am Anfang (vor einem Bild) Text gibt, sollte auch der erste Textteil eine Signatur haben.

- Wenn

inline_data-Teile mit dem BildmimetypeTeil von Überlegungen sind, haben sie keine Signaturen.

Der folgende Code zeigt ein Beispiel dafür, wo Gedankensignaturen enthalten sind:

[

{

"inline_data": {

"data": "<base64_image_data_0>",

"mime_type": "image/png"

},

"thought": true // Thoughts don't have signatures

},

{

"inline_data": {

"data": "<base64_image_data_1>",

"mime_type": "image/png"

},

"thought": true // Thoughts don't have signatures

},

{

"inline_data": {

"data": "<base64_image_data_2>",

"mime_type": "image/png"

},

"thought": true // Thoughts don't have signatures

},

{

"text": "Here is a step-by-step guide to baking macarons, presented in three separate images.\n\n### Step 1: Piping the Batter\n\nThe first step after making your macaron batter is to pipe it onto a baking sheet. This requires a steady hand to create uniform circles.\n\n",

"thought_signature": "<Signature_A>" // The first non-thought part always has a signature

},

{

"inline_data": {

"data": "<base64_image_data_3>",

"mime_type": "image/png"

},

"thought_signature": "<Signature_B>" // All image parts have a signatures

},

{

"text": "\n\n### Step 2: Baking and Developing Feet\n\nOnce piped, the macarons are baked in the oven. A key sign of a successful bake is the development of \"feet\"—the ruffled edge at the base of each macaron shell.\n\n"

// Follow-up text parts don't have signatures

},

{

"inline_data": {

"data": "<base64_image_data_4>",

"mime_type": "image/png"

},

"thought_signature": "<Signature_C>" // All image parts have a signatures

},

{

"text": "\n\n### Step 3: Assembling the Macaron\n\nThe final step is to pair the cooled macaron shells by size and sandwich them together with your desired filling, creating the classic macaron dessert.\n\n"

},

{

"inline_data": {

"data": "<base64_image_data_5>",

"mime_type": "image/png"

},

"thought_signature": "<Signature_D>" // All image parts have a signatures

}

]

Andere Modi zur Bildgenerierung

Gemini unterstützt je nach Promptstruktur und Kontext auch andere Modi für die Bildinteraktion:

- Text zu Bild(ern) und Text (verschachtelt): Es werden Bilder mit zugehörigem Text ausgegeben.

- Beispiel-Prompt: „Erstelle ein illustriertes Rezept für eine Paella.“

- Bild(er) und Text zu Bild(ern) und Text (verschachtelt): Verwendet Eingabebilder und ‑text, um neue zugehörige Bilder und Texte zu erstellen.

- Beispielprompt: (Mit einem Bild eines möblierten Zimmers) „Welche anderen Sofafarben würden in meinen Raum passen? Kannst du das Bild aktualisieren?“

Bilder im Batch generieren

Wenn Sie viele Bilder generieren müssen, können Sie die Batch API verwenden. Sie erhalten höhere Ratenlimits im Austausch für eine Bearbeitungszeit von bis zu 24 Stunden.

In der Dokumentation zur Batch API-Bildgenerierung und im Kochbuch finden Sie Beispiele und Code für die Batch API-Bildgenerierung.

Anleitung und Strategien für Prompts

Die Bildgenerierung basiert auf einem grundlegenden Prinzip:

Beschreiben Sie die Szene, anstatt nur Keywords aufzulisten. Die Stärke des Modells liegt in seinem tiefen Sprachverständnis. Ein narrativer, beschreibender Absatz führt fast immer zu einem besseren, kohärenteren Bild als eine Liste mit unzusammenhängenden Wörtern.

Prompts zum Generieren von Bildern

Die folgenden Strategien helfen Ihnen dabei, effektive Prompts zu erstellen, mit denen Sie genau die Bilder generieren können, die Sie suchen.

1. Fotorealistische Szenen

Verwenden Sie fotografische Begriffe, um realistische Bilder zu erstellen. Erwähnen Sie Kamerawinkel, Objektivtypen, Beleuchtung und feine Details, um das Modell in Richtung eines fotorealistischen Ergebnisses zu lenken.

Vorlage

A photorealistic [shot type] of [subject], [action or expression], set in

[environment]. The scene is illuminated by [lighting description], creating

a [mood] atmosphere. Captured with a [camera/lens details], emphasizing

[key textures and details]. The image should be in a [aspect ratio] format.

Prompt

A photorealistic close-up portrait of an elderly Japanese ceramicist with

deep, sun-etched wrinkles and a warm, knowing smile. He is carefully

inspecting a freshly glazed tea bowl. The setting is his rustic,

sun-drenched workshop. The scene is illuminated by soft, golden hour light

streaming through a window, highlighting the fine texture of the clay.

Captured with an 85mm portrait lens, resulting in a soft, blurred background

(bokeh). The overall mood is serene and masterful. Vertical portrait

orientation.

Python

from google import genai

from google.genai import types

client = genai.Client()

response = client.models.generate_content(

model="gemini-2.5-flash-image",

contents="A photorealistic close-up portrait of an elderly Japanese ceramicist with deep, sun-etched wrinkles and a warm, knowing smile. He is carefully inspecting a freshly glazed tea bowl. The setting is his rustic, sun-drenched workshop with pottery wheels and shelves of clay pots in the background. The scene is illuminated by soft, golden hour light streaming through a window, highlighting the fine texture of the clay and the fabric of his apron. Captured with an 85mm portrait lens, resulting in a soft, blurred background (bokeh). The overall mood is serene and masterful.",

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("photorealistic_example.png")

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class PhotorealisticScene {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-2.5-flash-image",

"""

A photorealistic close-up portrait of an elderly Japanese ceramicist

with deep, sun-etched wrinkles and a warm, knowing smile. He is

carefully inspecting a freshly glazed tea bowl. The setting is his

rustic, sun-drenched workshop with pottery wheels and shelves of

clay pots in the background. The scene is illuminated by soft,

golden hour light streaming through a window, highlighting the

fine texture of the clay and the fabric of his apron. Captured

with an 85mm portrait lens, resulting in a soft, blurred

background (bokeh). The overall mood is serene and masterful.

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("photorealistic_example.png"), blob.data().get());

}

}

}

}

}

}

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

"A photorealistic close-up portrait of an elderly Japanese ceramicist with deep, sun-etched wrinkles and a warm, knowing smile. He is carefully inspecting a freshly glazed tea bowl. The setting is his rustic, sun-drenched workshop with pottery wheels and shelves of clay pots in the background. The scene is illuminated by soft, golden hour light streaming through a window, highlighting the fine texture of the clay and the fabric of his apron. Captured with an 85mm portrait lens, resulting in a soft, blurred background (bokeh). The overall mood is serene and masterful.";

const response = await ai.models.generateContent({

model: "gemini-2.5-flash-image",

contents: prompt,

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("photorealistic_example.png", buffer);

console.log("Image saved as photorealistic_example.png");

}

}

}

main();

Ok

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-2.5-flash-image",

genai.Text("A photorealistic close-up portrait of an elderly Japanese ceramicist with deep, sun-etched wrinkles and a warm, knowing smile. He is carefully inspecting a freshly glazed tea bowl. The setting is his rustic, sun-drenched workshop with pottery wheels and shelves of clay pots in the background. The scene is illuminated by soft, golden hour light streaming through a window, highlighting the fine texture of the clay and the fabric of his apron. Captured with an 85mm portrait lens, resulting in a soft, blurred background (bokeh). The overall mood is serene and masterful."),

)

for _, part := range result.Candidates[0].Content.Parts {

if part.Text != "" {

fmt.Println(part.Text)

} else if part.InlineData != nil {

imageBytes := part.InlineData.Data

outputFilename := "photorealistic_example.png"

_ = os.WriteFile(outputFilename, imageBytes, 0644)

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-2.5-flash-image:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{

"parts": [

{"text": "A photorealistic close-up portrait of an elderly Japanese ceramicist with deep, sun-etched wrinkles and a warm, knowing smile. He is carefully inspecting a freshly glazed tea bowl. The setting is his rustic, sun-drenched workshop with pottery wheels and shelves of clay pots in the background. The scene is illuminated by soft, golden hour light streaming through a window, highlighting the fine texture of the clay and the fabric of his apron. Captured with an 85mm portrait lens, resulting in a soft, blurred background (bokeh). The overall mood is serene and masterful."}

]

}]

}'

2. Stilisierte Illustrationen und Sticker

Wenn Sie Sticker, Symbole oder Assets erstellen möchten, geben Sie den Stil genau an und fordern Sie einen transparenten Hintergrund an.

Vorlage

A [style] sticker of a [subject], featuring [key characteristics] and a

[color palette]. The design should have [line style] and [shading style].

The background must be transparent.

Prompt

A kawaii-style sticker of a happy red panda wearing a tiny bamboo hat. It's

munching on a green bamboo leaf. The design features bold, clean outlines,

simple cel-shading, and a vibrant color palette. The background must be white.

Python

from google import genai

from google.genai import types

client = genai.Client()

response = client.models.generate_content(

model="gemini-2.5-flash-image",

contents="A kawaii-style sticker of a happy red panda wearing a tiny bamboo hat. It's munching on a green bamboo leaf. The design features bold, clean outlines, simple cel-shading, and a vibrant color palette. The background must be white.",

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("red_panda_sticker.png")

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class StylizedIllustration {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-2.5-flash-image",

"""

A kawaii-style sticker of a happy red panda wearing a tiny bamboo

hat. It's munching on a green bamboo leaf. The design features

bold, clean outlines, simple cel-shading, and a vibrant color

palette. The background must be white.

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("red_panda_sticker.png"), blob.data().get());

}

}

}

}

}

}

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

"A kawaii-style sticker of a happy red panda wearing a tiny bamboo hat. It's munching on a green bamboo leaf. The design features bold, clean outlines, simple cel-shading, and a vibrant color palette. The background must be white.";

const response = await ai.models.generateContent({

model: "gemini-2.5-flash-image",

contents: prompt,

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("red_panda_sticker.png", buffer);

console.log("Image saved as red_panda_sticker.png");

}

}

}

main();

Ok

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-2.5-flash-image",

genai.Text("A kawaii-style sticker of a happy red panda wearing a tiny bamboo hat. It's munching on a green bamboo leaf. The design features bold, clean outlines, simple cel-shading, and a vibrant color palette. The background must be white."),

)

for _, part := range result.Candidates[0].Content.Parts {

if part.Text != "" {

fmt.Println(part.Text)

} else if part.InlineData != nil {

imageBytes := part.InlineData.Data

outputFilename := "red_panda_sticker.png"

_ = os.WriteFile(outputFilename, imageBytes, 0644)

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-2.5-flash-image:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{

"parts": [

{"text": "A kawaii-style sticker of a happy red panda wearing a tiny bamboo hat. It is munching on a green bamboo leaf. The design features bold, clean outlines, simple cel-shading, and a vibrant color palette. The background must be white."}

]

}]

}'

3. Genaue Darstellung von Text in Bildern

Gemini ist hervorragend im Rendern von Text. Beschreiben Sie den Text, die Schriftart und das Gesamtdesign so genau wie möglich. Gemini 3 Pro Image Preview für die professionelle Asset-Produktion verwenden

Vorlage

Create a [image type] for [brand/concept] with the text "[text to render]"

in a [font style]. The design should be [style description], with a

[color scheme].

Prompt

Create a modern, minimalist logo for a coffee shop called 'The Daily Grind'. The text should be in a clean, bold, sans-serif font. The color scheme is black and white. Put the logo in a circle. Use a coffee bean in a clever way.

Python

from google import genai

from google.genai import types

client = genai.Client()

response = client.models.generate_content(

model="gemini-3-pro-image-preview",

contents="Create a modern, minimalist logo for a coffee shop called 'The Daily Grind'. The text should be in a clean, bold, sans-serif font. The color scheme is black and white. Put the logo in a circle. Use a coffee bean in a clever way.",

config=types.GenerateContentConfig(

image_config=types.ImageConfig(

aspect_ratio="1:1",

)

)

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("logo_example.jpg")

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import com.google.genai.types.ImageConfig;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class AccurateTextInImages {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.imageConfig(ImageConfig.builder()

.aspectRatio("1:1")

.build())

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-3-pro-image-preview",

"""

Create a modern, minimalist logo for a coffee shop called 'The Daily Grind'. The text should be in a clean, bold, sans-serif font. The color scheme is black and white. Put the logo in a circle. Use a coffee bean in a clever way.

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("logo_example.jpg"), blob.data().get());

}

}

}

}

}

}

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

"Create a modern, minimalist logo for a coffee shop called 'The Daily Grind'. The text should be in a clean, bold, sans-serif font. The color scheme is black and white. Put the logo in a circle. Use a coffee bean in a clever way.";

const response = await ai.models.generateContent({

model: "gemini-3-pro-image-preview",

contents: prompt,

config: {

imageConfig: {

aspectRatio: "1:1",

},

},

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("logo_example.jpg", buffer);

console.log("Image saved as logo_example.jpg");

}

}

}

main();

Ok

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-3-pro-image-preview",

genai.Text("Create a modern, minimalist logo for a coffee shop called 'The Daily Grind'. The text should be in a clean, bold, sans-serif font. The color scheme is black and white. Put the logo in a circle. Use a coffee bean in a clever way."),

&genai.GenerateContentConfig{

ImageConfig: &genai.ImageConfig{

AspectRatio: "1:1",

},

},

)

for _, part := range result.Candidates[0].Content.Parts {

if part.Text != "" {

fmt.Println(part.Text)

} else if part.InlineData != nil {

imageBytes := part.InlineData.Data

outputFilename := "logo_example.jpg"

_ = os.WriteFile(outputFilename, imageBytes, 0644)

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-image-preview:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{

"parts": [

{"text": "Create a modern, minimalist logo for a coffee shop called The Daily Grind. The text should be in a clean, bold, sans-serif font. The color scheme is black and white. Put the logo in a circle. Use a coffee bean in a clever way."}

]

}],

"generationConfig": {

"imageConfig": {

"aspectRatio": "1:1"

}

}

}'

4. Produkt-Mockups und kommerzielle Fotografie

Ideal für die Erstellung von sauberen, professionellen Produktbildern für E-Commerce, Werbung oder Branding.

Vorlage

A high-resolution, studio-lit product photograph of a [product description]

on a [background surface/description]. The lighting is a [lighting setup,

e.g., three-point softbox setup] to [lighting purpose]. The camera angle is

a [angle type] to showcase [specific feature]. Ultra-realistic, with sharp

focus on [key detail]. [Aspect ratio].

Prompt

A high-resolution, studio-lit product photograph of a minimalist ceramic

coffee mug in matte black, presented on a polished concrete surface. The

lighting is a three-point softbox setup designed to create soft, diffused

highlights and eliminate harsh shadows. The camera angle is a slightly

elevated 45-degree shot to showcase its clean lines. Ultra-realistic, with

sharp focus on the steam rising from the coffee. Square image.

Python

from google import genai

from google.genai import types

client = genai.Client()

response = client.models.generate_content(

model="gemini-2.5-flash-image",

contents="A high-resolution, studio-lit product photograph of a minimalist ceramic coffee mug in matte black, presented on a polished concrete surface. The lighting is a three-point softbox setup designed to create soft, diffused highlights and eliminate harsh shadows. The camera angle is a slightly elevated 45-degree shot to showcase its clean lines. Ultra-realistic, with sharp focus on the steam rising from the coffee. Square image.",

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("product_mockup.png")

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class ProductMockup {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-2.5-flash-image",

"""

A high-resolution, studio-lit product photograph of a minimalist

ceramic coffee mug in matte black, presented on a polished

concrete surface. The lighting is a three-point softbox setup

designed to create soft, diffused highlights and eliminate harsh

shadows. The camera angle is a slightly elevated 45-degree shot

to showcase its clean lines. Ultra-realistic, with sharp focus

on the steam rising from the coffee. Square image.

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("product_mockup.png"), blob.data().get());

}

}

}

}

}

}

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

"A high-resolution, studio-lit product photograph of a minimalist ceramic coffee mug in matte black, presented on a polished concrete surface. The lighting is a three-point softbox setup designed to create soft, diffused highlights and eliminate harsh shadows. The camera angle is a slightly elevated 45-degree shot to showcase its clean lines. Ultra-realistic, with sharp focus on the steam rising from the coffee. Square image.";

const response = await ai.models.generateContent({

model: "gemini-2.5-flash-image",

contents: prompt,

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("product_mockup.png", buffer);

console.log("Image saved as product_mockup.png");

}

}

}

main();

Ok

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-2.5-flash-image",

genai.Text("A high-resolution, studio-lit product photograph of a minimalist ceramic coffee mug in matte black, presented on a polished concrete surface. The lighting is a three-point softbox setup designed to create soft, diffused highlights and eliminate harsh shadows. The camera angle is a slightly elevated 45-degree shot to showcase its clean lines. Ultra-realistic, with sharp focus on the steam rising from the coffee. Square image."),

)

for _, part := range result.Candidates[0].Content.Parts {

if part.Text != "" {

fmt.Println(part.Text)

} else if part.InlineData != nil {

imageBytes := part.InlineData.Data

outputFilename := "product_mockup.png"

_ = os.WriteFile(outputFilename, imageBytes, 0644)

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-2.5-flash-image:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{

"parts": [

{"text": "A high-resolution, studio-lit product photograph of a minimalist ceramic coffee mug in matte black, presented on a polished concrete surface. The lighting is a three-point softbox setup designed to create soft, diffused highlights and eliminate harsh shadows. The camera angle is a slightly elevated 45-degree shot to showcase its clean lines. Ultra-realistic, with sharp focus on the steam rising from the coffee. Square image."}

]

}]

}'

5. Minimalistisches Design und Negativraum

Hervorragend geeignet für Hintergründe für Websites, Präsentationen oder Marketingmaterialien, auf denen Text eingeblendet werden soll.

Vorlage

A minimalist composition featuring a single [subject] positioned in the

[bottom-right/top-left/etc.] of the frame. The background is a vast, empty

[color] canvas, creating significant negative space. Soft, subtle lighting.

[Aspect ratio].

Prompt

A minimalist composition featuring a single, delicate red maple leaf

positioned in the bottom-right of the frame. The background is a vast, empty

off-white canvas, creating significant negative space for text. Soft,

diffused lighting from the top left. Square image.

Python

from google import genai

from google.genai import types

client = genai.Client()

response = client.models.generate_content(

model="gemini-2.5-flash-image",

contents="A minimalist composition featuring a single, delicate red maple leaf positioned in the bottom-right of the frame. The background is a vast, empty off-white canvas, creating significant negative space for text. Soft, diffused lighting from the top left. Square image.",

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("minimalist_design.png")

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class MinimalistDesign {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-2.5-flash-image",

"""

A minimalist composition featuring a single, delicate red maple

leaf positioned in the bottom-right of the frame. The background

is a vast, empty off-white canvas, creating significant negative

space for text. Soft, diffused lighting from the top left.

Square image.

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("minimalist_design.png"), blob.data().get());

}

}

}

}

}

}

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

"A minimalist composition featuring a single, delicate red maple leaf positioned in the bottom-right of the frame. The background is a vast, empty off-white canvas, creating significant negative space for text. Soft, diffused lighting from the top left. Square image.";

const response = await ai.models.generateContent({

model: "gemini-2.5-flash-image",

contents: prompt,

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("minimalist_design.png", buffer);

console.log("Image saved as minimalist_design.png");

}

}

}

main();

Ok

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-2.5-flash-image",