-

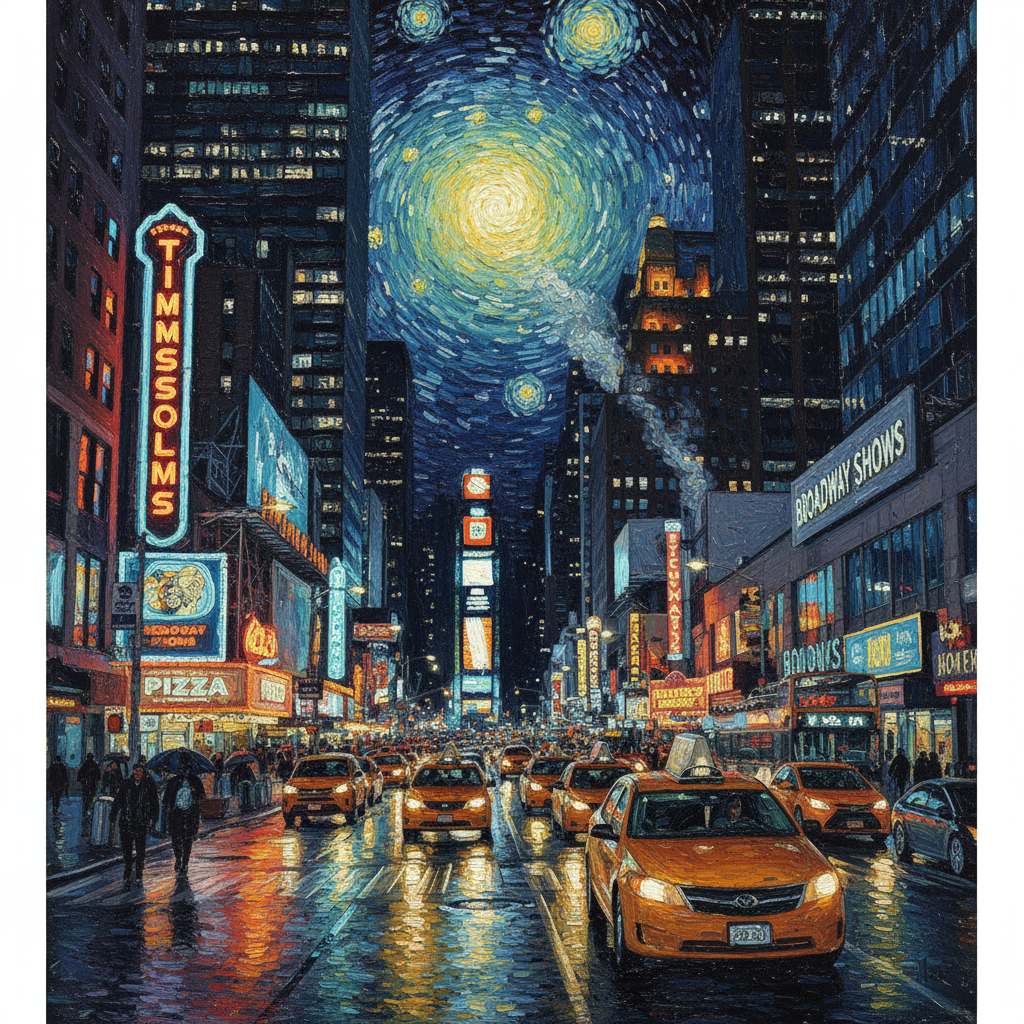

Gerado pelo Nano Banana Pro Comando: "Apresente uma cena de desenho animado em 3D em miniatura isométrica de cima para baixo de 45° clara de Londres, com os marcos e elementos arquitetônicos mais icônicos. Use texturas suaves e refinadas com materiais PBR realistas e iluminação e sombras suaves e realistas. Integre as condições climáticas atuais diretamente ao ambiente da cidade para criar uma atmosfera imersiva. Use uma composição limpa e minimalista com um fundo macio e de cor sólida. Na parte superior central, coloque o título "Londres" em texto grande e em negrito, um ícone de clima em destaque abaixo dele e, em seguida, a data (texto pequeno) e a temperatura (texto médio). Todo o texto precisa estar centralizado com espaçamento consistente e pode se sobrepor sutilmente aos topos dos edifícios".Saiba mais sobre o embasamento de pesquisa e teste no AI Studio -

Gerado pelo Nano Banana Pro Comando: "Coloque este logotipo em um anúncio sofisticado de um perfume com aroma de banana. O logotipo está perfeitamente integrado à garrafa".Teste a preservação de detalhes de alta fidelidade do Nano Banana no AI Studio -

Gerado pelo Nano Banana Pro Comando: "Restaure esta imagem com alta fidelidade à qualidade de uma fotografia moderna, em cores, faça o upscaling para 4K"Gere imagens em até resolução 4K. Teste o Nano Banana no AI Studio -

Gerado pelo Nano Banana Pro Comando: "Uma foto da capa de uma revista brilhante com as palavras grandes e em negrito "Nano Banana Pro". O texto está em uma fonte serifada e preenche a visualização. Nenhum outro texto. Na frente do texto, há um retrato de uma pessoa com uma roupa bonita. Coloque o número da edição e a data de hoje no canto, junto com um código de barras e um preço. A revista está em uma prateleira contra uma parede de tijolos, dentro de uma loja de design. Um manequim está usando a mesma roupa."Criar fotos de produtos profissionais no AI Studio -

Gerado pelo Nano Banana Pro Comando: "Use a pesquisa para saber como foi a recepção do lançamento do Gemini 3 Flash. Use essas informações para escrever um pequeno artigo sobre o assunto (com títulos). Retorne uma foto do artigo como ele apareceu em uma revista brilhante focada em design. É uma foto de uma única página dobrada, mostrando o artigo sobre o Gemini 3 Flash. Uma foto principal. Título em serifada." -

Gerado pelo Nano Banana Pro Comando: "Uma foto de uma cena cotidiana em um café movimentado que serve café da manhã. Em primeiro plano, um homem de anime com cabelo azul, uma das pessoas é um esboço a lápis, outra é uma pessoa de animação com massa de modelar"Teste diferentes estilos artísticos com o Nano Banana no AI Studio -

Gerado pelo Nano Banana Pro Comando: "Um ícone representando um cachorro fofo. O fundo é branco. Faça os ícones em um estilo 3D colorido e tátil. Sem texto."Crie ícones, adesivos e recursos com o Nano Banana no AI Studio -

Gerado pelo Nano Banana Pro Comando: "Crie uma foto perfeitamente isométrica. Não é uma miniatura, é uma foto capturada que acabou sendo perfeitamente isométrica. É uma foto de um lindo interior de escritório moderno".Teste a geração de imagens fotorrealistas no AI Studio

Nano Banana é o nome dos recursos nativos de geração de imagens do Gemini. O Gemini pode gerar e processar imagens de forma conversacional com texto, imagens ou uma combinação dos dois. Isso permite criar, editar e iterar recursos visuais com um controle sem precedentes.

O Nano Banana se refere a dois modelos distintos disponíveis na API Gemini:

- Nano Banana: o modelo Gemini 2.5 Flash Image (

gemini-2.5-flash-image). Ele foi projetado para velocidade e eficiência, otimizado para tarefas de alto volume e baixa latência. - Nano Banana Pro: o modelo Prévia de imagem do Gemini 3 Pro (

gemini-3-pro-image-preview). Ele foi projetado para produção de recursos profissionais, usando raciocínio avançado ("Pensamento") para seguir instruções complexas e renderizar texto de alta fidelidade.

Todas as imagens geradas incluem uma marca-d'água do SynthID.

Geração de imagens (conversão de texto em imagem)

Python

from google import genai

from google.genai import types

from PIL import Image

client = genai.Client()

prompt = ("Create a picture of a nano banana dish in a fancy restaurant with a Gemini theme")

response = client.models.generate_content(

model="gemini-2.5-flash-image",

contents=[prompt],

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("generated_image.png")

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

"Create a picture of a nano banana dish in a fancy restaurant with a Gemini theme";

const response = await ai.models.generateContent({

model: "gemini-2.5-flash-image",

contents: prompt,

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("gemini-native-image.png", buffer);

console.log("Image saved as gemini-native-image.png");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-2.5-flash-image",

genai.Text("Create a picture of a nano banana dish in a " +

" fancy restaurant with a Gemini theme"),

)

for _, part := range result.Candidates[0].Content.Parts {

if part.Text != "" {

fmt.Println(part.Text)

} else if part.InlineData != nil {

imageBytes := part.InlineData.Data

outputFilename := "gemini_generated_image.png"

_ = os.WriteFile(outputFilename, imageBytes, 0644)

}

}

}

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class TextToImage {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-2.5-flash-image",

"Create a picture of a nano banana dish in a fancy restaurant with a Gemini theme",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("_01_generated_image.png"), blob.data().get());

}

}

}

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-2.5-flash-image:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{

"parts": [

{"text": "Create a picture of a nano banana dish in a fancy restaurant with a Gemini theme"}

]

}]

}'

Edição de imagens (texto e imagem para imagem)

Lembrete: confira se você tem os direitos necessários sobre as imagens que enviar. Não gere conteúdo que viole os direitos de terceiros, incluindo vídeos ou imagens que enganem, assediem ou prejudiquem pessoas. O uso deste serviço de IA generativa está sujeito à nossa Política de uso proibido.

Forneça uma imagem e use comandos de texto para adicionar, remover ou modificar elementos, mudar o estilo ou ajustar a gradação de cores.

O exemplo a seguir demonstra o upload de imagens codificadas em base64.

Para várias imagens, payloads maiores e tipos MIME compatíveis, consulte a página Entendimento de imagens.

Python

from google import genai

from google.genai import types

from PIL import Image

client = genai.Client()

prompt = (

"Create a picture of my cat eating a nano-banana in a "

"fancy restaurant under the Gemini constellation",

)

image = Image.open("/path/to/cat_image.png")

response = client.models.generate_content(

model="gemini-2.5-flash-image",

contents=[prompt, image],

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("generated_image.png")

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const imagePath = "path/to/cat_image.png";

const imageData = fs.readFileSync(imagePath);

const base64Image = imageData.toString("base64");

const prompt = [

{ text: "Create a picture of my cat eating a nano-banana in a" +

"fancy restaurant under the Gemini constellation" },

{

inlineData: {

mimeType: "image/png",

data: base64Image,

},

},

];

const response = await ai.models.generateContent({

model: "gemini-2.5-flash-image",

contents: prompt,

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("gemini-native-image.png", buffer);

console.log("Image saved as gemini-native-image.png");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

imagePath := "/path/to/cat_image.png"

imgData, _ := os.ReadFile(imagePath)

parts := []*genai.Part{

genai.NewPartFromText("Create a picture of my cat eating a nano-banana in a fancy restaurant under the Gemini constellation"),

&genai.Part{

InlineData: &genai.Blob{

MIMEType: "image/png",

Data: imgData,

},

},

}

contents := []*genai.Content{

genai.NewContentFromParts(parts, genai.RoleUser),

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-2.5-flash-image",

contents,

)

for _, part := range result.Candidates[0].Content.Parts {

if part.Text != "" {

fmt.Println(part.Text)

} else if part.InlineData != nil {

imageBytes := part.InlineData.Data

outputFilename := "gemini_generated_image.png"

_ = os.WriteFile(outputFilename, imageBytes, 0644)

}

}

}

Java

import com.google.genai.Client;

import com.google.genai.types.Content;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

public class TextAndImageToImage {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-2.5-flash-image",

Content.fromParts(

Part.fromText("""

Create a picture of my cat eating a nano-banana in

a fancy restaurant under the Gemini constellation

"""),

Part.fromBytes(

Files.readAllBytes(

Path.of("src/main/resources/cat.jpg")),

"image/jpeg")),

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("gemini_generated_image.png"), blob.data().get());

}

}

}

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-2.5-flash-image:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H 'Content-Type: application/json' \

-d "{

\"contents\": [{

\"parts\":[

{\"text\": \"'Create a picture of my cat eating a nano-banana in a fancy restaurant under the Gemini constellation\"},

{

\"inline_data\": {

\"mime_type\":\"image/jpeg\",

\"data\": \"<BASE64_IMAGE_DATA>\"

}

}

]

}]

}"

Edição de imagens em várias etapas

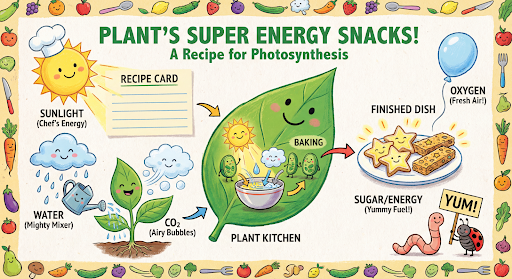

Continue gerando e editando imagens por conversa. O chat ou a conversa em vários turnos é a maneira recomendada de iterar imagens. O exemplo a seguir mostra um comando para gerar um infográfico sobre a fotossíntese.

Python

from google import genai

from google.genai import types

client = genai.Client()

chat = client.chats.create(

model="gemini-3-pro-image-preview",

config=types.GenerateContentConfig(

response_modalities=['TEXT', 'IMAGE'],

tools=[{"google_search": {}}]

)

)

message = "Create a vibrant infographic that explains photosynthesis as if it were a recipe for a plant's favorite food. Show the \"ingredients\" (sunlight, water, CO2) and the \"finished dish\" (sugar/energy). The style should be like a page from a colorful kids' cookbook, suitable for a 4th grader."

response = chat.send_message(message)

for part in response.parts:

if part.text is not None:

print(part.text)

elif image:= part.as_image():

image.save("photosynthesis.png")

JavaScript

import { GoogleGenAI } from "@google/genai";

const ai = new GoogleGenAI({});

async function main() {

const chat = ai.chats.create({

model: "gemini-3-pro-image-preview",

config: {

responseModalities: ['TEXT', 'IMAGE'],

tools: [{googleSearch: {}}],

},

});

await main();

const message = "Create a vibrant infographic that explains photosynthesis as if it were a recipe for a plant's favorite food. Show the \"ingredients\" (sunlight, water, CO2) and the \"finished dish\" (sugar/energy). The style should be like a page from a colorful kids' cookbook, suitable for a 4th grader."

let response = await chat.sendMessage({message});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("photosynthesis.png", buffer);

console.log("Image saved as photosynthesis.png");

}

}

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

defer client.Close()

model := client.GenerativeModel("gemini-3-pro-image-preview")

model.GenerationConfig = &pb.GenerationConfig{

ResponseModalities: []pb.ResponseModality{genai.Text, genai.Image},

}

chat := model.StartChat()

message := "Create a vibrant infographic that explains photosynthesis as if it were a recipe for a plant's favorite food. Show the \"ingredients\" (sunlight, water, CO2) and the \"finished dish\" (sugar/energy). The style should be like a page from a colorful kids' cookbook, suitable for a 4th grader."

resp, err := chat.SendMessage(ctx, genai.Text(message))

if err != nil {

log.Fatal(err)

}

for _, part := range resp.Candidates[0].Content.Parts {

if txt, ok := part.(genai.Text); ok {

fmt.Printf("%s", string(txt))

} else if img, ok := part.(genai.ImageData); ok {

err := os.WriteFile("photosynthesis.png", img.Data, 0644)

if err != nil {

log.Fatal(err)

}

}

}

}

Java

import com.google.genai.Chat;

import com.google.genai.Client;

import com.google.genai.types.Content;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.GoogleSearch;

import com.google.genai.types.ImageConfig;

import com.google.genai.types.Part;

import com.google.genai.types.RetrievalConfig;

import com.google.genai.types.Tool;

import com.google.genai.types.ToolConfig;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

public class MultiturnImageEditing {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.tools(Tool.builder()

.googleSearch(GoogleSearch.builder().build())

.build())

.build();

Chat chat = client.chats.create("gemini-3-pro-image-preview", config);

GenerateContentResponse response = chat.sendMessage("""

Create a vibrant infographic that explains photosynthesis

as if it were a recipe for a plant's favorite food.

Show the "ingredients" (sunlight, water, CO2)

and the "finished dish" (sugar/energy).

The style should be like a page from a colorful

kids' cookbook, suitable for a 4th grader.

""");

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("photosynthesis.png"), blob.data().get());

}

}

}

// ...

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-image-preview:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{

"role": "user",

"parts": [

{"text": "Create a vibrant infographic that explains photosynthesis as if it were a recipe for a plants favorite food. Show the \"ingredients\" (sunlight, water, CO2) and the \"finished dish\" (sugar/energy). The style should be like a page from a colorful kids cookbook, suitable for a 4th grader."}

]

}],

"generationConfig": {

"responseModalities": ["TEXT", "IMAGE"]

}

}'

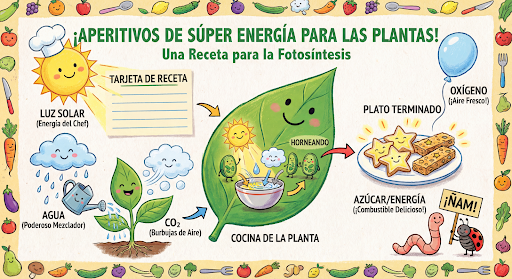

Em seguida, use o mesmo chat para mudar o idioma do gráfico para espanhol.

Python

message = "Update this infographic to be in Spanish. Do not change any other elements of the image."

aspect_ratio = "16:9" # "1:1","2:3","3:2","3:4","4:3","4:5","5:4","9:16","16:9","21:9"

resolution = "2K" # "1K", "2K", "4K"

response = chat.send_message(message,

config=types.GenerateContentConfig(

image_config=types.ImageConfig(

aspect_ratio=aspect_ratio,

image_size=resolution

),

))

for part in response.parts:

if part.text is not None:

print(part.text)

elif image:= part.as_image():

image.save("photosynthesis_spanish.png")

JavaScript

const message = 'Update this infographic to be in Spanish. Do not change any other elements of the image.';

const aspectRatio = '16:9';

const resolution = '2K';

let response = await chat.sendMessage({

message,

config: {

responseModalities: ['TEXT', 'IMAGE'],

imageConfig: {

aspectRatio: aspectRatio,

imageSize: resolution,

},

tools: [{googleSearch: {}}],

},

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("photosynthesis2.png", buffer);

console.log("Image saved as photosynthesis2.png");

}

}

Go

message = "Update this infographic to be in Spanish. Do not change any other elements of the image."

aspect_ratio = "16:9" // "1:1","2:3","3:2","3:4","4:3","4:5","5:4","9:16","16:9","21:9"

resolution = "2K" // "1K", "2K", "4K"

model.GenerationConfig.ImageConfig = &pb.ImageConfig{

AspectRatio: aspect_ratio,

ImageSize: resolution,

}

resp, err = chat.SendMessage(ctx, genai.Text(message))

if err != nil {

log.Fatal(err)

}

for _, part := range resp.Candidates[0].Content.Parts {

if txt, ok := part.(genai.Text); ok {

fmt.Printf("%s", string(txt))

} else if img, ok := part.(genai.ImageData); ok {

err := os.WriteFile("photosynthesis_spanish.png", img.Data, 0644)

if err != nil {

log.Fatal(err)

}

}

}

Java

String aspectRatio = "16:9"; // "1:1","2:3","3:2","3:4","4:3","4:5","5:4","9:16","16:9","21:9"

String resolution = "2K"; // "1K", "2K", "4K"

config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.imageConfig(ImageConfig.builder()

.aspectRatio(aspectRatio)

.imageSize(resolution)

.build())

.build();

response = chat.sendMessage(

"Update this infographic to be in Spanish. " +

"Do not change any other elements of the image.",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("photosynthesis_spanish.png"), blob.data().get());

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-image-preview:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H 'Content-Type: application/json' \

-d '{

"contents": [

{

"role": "user",

"parts": [{"text": "Create a vibrant infographic that explains photosynthesis..."}]

},

{

"role": "model",

"parts": [{"inline_data": {"mime_type": "image/png", "data": "<PREVIOUS_IMAGE_DATA>"}}]

},

{

"role": "user",

"parts": [{"text": "Update this infographic to be in Spanish. Do not change any other elements of the image."}]

}

],

"tools": [{"google_search": {}}],

"generationConfig": {

"responseModalities": ["TEXT", "IMAGE"],

"imageConfig": {

"aspectRatio": "16:9",

"imageSize": "2K"

}

}

}'

Novidade do Gemini 3 Pro Image

O Gemini 3 Pro Image (gemini-3-pro-image-preview) é um modelo de edição e geração de imagens de última geração otimizado para produção de recursos profissionais.

Projetado para lidar com os fluxos de trabalho mais desafiadores usando raciocínio avançado, ele é excelente em tarefas complexas de criação e modificação em várias etapas.

- Saída de alta resolução: recursos de geração integrados para visuais de 1K, 2K e 4K.

- Renderização de texto avançada: capaz de gerar texto legível e estilizado para infográficos, menus, diagramas e recursos de marketing.

- Embasamento com a Pesquisa Google: o modelo pode usar a Pesquisa Google como uma ferramenta para verificar fatos e gerar imagens com base em dados em tempo real (por exemplo, mapas meteorológicos atuais, gráficos de ações, eventos recentes).

- Modo de raciocínio: o modelo usa um processo de "raciocínio" para analisar comandos complexos. Ele gera "imagens de pensamento" provisórias (visíveis no back-end, mas não cobradas) para refinar a composição antes de produzir a saída final de alta qualidade.

- Até 14 imagens de referência: agora você pode misturar até 14 imagens de referência para produzir a imagem final.

Usar até 14 imagens de referência

Com o pré-lançamento do Gemini 3 Pro, você pode combinar até 14 imagens de referência. Essas 14 imagens podem incluir o seguinte:

- Até seis imagens de objetos com alta fidelidade para incluir na imagem final

Até cinco imagens de humanos para manter a consistência do personagem

Python

from google import genai

from google.genai import types

from PIL import Image

prompt = "An office group photo of these people, they are making funny faces."

aspect_ratio = "5:4" # "1:1","2:3","3:2","3:4","4:3","4:5","5:4","9:16","16:9","21:9"

resolution = "2K" # "1K", "2K", "4K"

client = genai.Client()

response = client.models.generate_content(

model="gemini-3-pro-image-preview",

contents=[

prompt,

Image.open('person1.png'),

Image.open('person2.png'),

Image.open('person3.png'),

Image.open('person4.png'),

Image.open('person5.png'),

],

config=types.GenerateContentConfig(

response_modalities=['TEXT', 'IMAGE'],

image_config=types.ImageConfig(

aspect_ratio=aspect_ratio,

image_size=resolution

),

)

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif image:= part.as_image():

image.save("office.png")

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

'An office group photo of these people, they are making funny faces.';

const aspectRatio = '5:4';

const resolution = '2K';

const contents = [

{ text: prompt },

{

inlineData: {

mimeType: "image/jpeg",

data: base64ImageFile1,

},

},

{

inlineData: {

mimeType: "image/jpeg",

data: base64ImageFile2,

},

},

{

inlineData: {

mimeType: "image/jpeg",

data: base64ImageFile3,

},

},

{

inlineData: {

mimeType: "image/jpeg",

data: base64ImageFile4,

},

},

{

inlineData: {

mimeType: "image/jpeg",

data: base64ImageFile5,

},

}

];

const response = await ai.models.generateContent({

model: 'gemini-3-pro-image-preview',

contents: contents,

config: {

responseModalities: ['TEXT', 'IMAGE'],

imageConfig: {

aspectRatio: aspectRatio,

imageSize: resolution,

},

},

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("image.png", buffer);

console.log("Image saved as image.png");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

defer client.Close()

model := client.GenerativeModel("gemini-3-pro-image-preview")

model.GenerationConfig = &pb.GenerationConfig{

ResponseModalities: []pb.ResponseModality{genai.Text, genai.Image},

ImageConfig: &pb.ImageConfig{

AspectRatio: "5:4",

ImageSize: "2K",

},

}

img1, err := os.ReadFile("person1.png")

if err != nil { log.Fatal(err) }

img2, err := os.ReadFile("person2.png")

if err != nil { log.Fatal(err) }

img3, err := os.ReadFile("person3.png")

if err != nil { log.Fatal(err) }

img4, err := os.ReadFile("person4.png")

if err != nil { log.Fatal(err) }

img5, err := os.ReadFile("person5.png")

if err != nil { log.Fatal(err) }

parts := []genai.Part{

genai.Text("An office group photo of these people, they are making funny faces."),

genai.ImageData{MIMEType: "image/png", Data: img1},

genai.ImageData{MIMEType: "image/png", Data: img2},

genai.ImageData{MIMEType: "image/png", Data: img3},

genai.ImageData{MIMEType: "image/png", Data: img4},

genai.ImageData{MIMEType: "image/png", Data: img5},

}

resp, err := model.GenerateContent(ctx, parts...)

if err != nil {

log.Fatal(err)

}

for _, part := range resp.Candidates[0].Content.Parts {

if txt, ok := part.(genai.Text); ok {

fmt.Printf("%s", string(txt))

} else if img, ok := part.(genai.ImageData); ok {

err := os.WriteFile("office.png", img.Data, 0644)

if err != nil {

log.Fatal(err)

}

}

}

}

Java

import com.google.genai.Client;

import com.google.genai.types.Content;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.ImageConfig;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

public class GroupPhoto {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.imageConfig(ImageConfig.builder()

.aspectRatio("5:4")

.imageSize("2K")

.build())

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-3-pro-image-preview",

Content.fromParts(

Part.fromText("An office group photo of these people, they are making funny faces."),

Part.fromBytes(Files.readAllBytes(Path.of("person1.png")), "image/png"),

Part.fromBytes(Files.readAllBytes(Path.of("person2.png")), "image/png"),

Part.fromBytes(Files.readAllBytes(Path.of("person3.png")), "image/png"),

Part.fromBytes(Files.readAllBytes(Path.of("person4.png")), "image/png"),

Part.fromBytes(Files.readAllBytes(Path.of("person5.png")), "image/png")

), config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("office.png"), blob.data().get());

}

}

}

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-image-preview:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H 'Content-Type: application/json' \

-d "{

\"contents\": [{

\"parts\":[

{\"text\": \"An office group photo of these people, they are making funny faces.\"},

{\"inline_data\": {\"mime_type\":\"image/png\", \"data\": \"<BASE64_DATA_IMG_1>\"}},

{\"inline_data\": {\"mime_type\":\"image/png\", \"data\": \"<BASE64_DATA_IMG_2>\"}},

{\"inline_data\": {\"mime_type\":\"image/png\", \"data\": \"<BASE64_DATA_IMG_3>\"}},

{\"inline_data\": {\"mime_type\":\"image/png\", \"data\": \"<BASE64_DATA_IMG_4>\"}},

{\"inline_data\": {\"mime_type\":\"image/png\", \"data\": \"<BASE64_DATA_IMG_5>\"}}

]

}],

\"generationConfig\": {

\"responseModalities\": [\"TEXT\", \"IMAGE\"],

\"imageConfig\": {

\"aspectRatio\": \"5:4\",

\"imageSize\": \"2K\"

}

}

}"

Embasamento com a Pesquisa Google

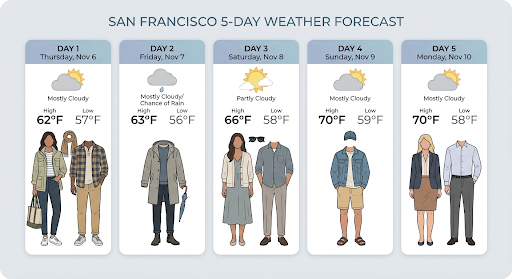

Use a ferramenta da Pesquisa Google para gerar imagens com base em informações em tempo real, como previsões do tempo, gráficos de ações ou eventos recentes.

Ao usar o Embasamento com a Pesquisa Google para gerar imagens, os resultados da pesquisa baseados em imagens não são transmitidos ao modelo de geração e são excluídos da resposta.

Python

from google import genai

prompt = "Visualize the current weather forecast for the next 5 days in San Francisco as a clean, modern weather chart. Add a visual on what I should wear each day"

aspect_ratio = "16:9" # "1:1","2:3","3:2","3:4","4:3","4:5","5:4","9:16","16:9","21:9"

client = genai.Client()

response = client.models.generate_content(

model="gemini-3-pro-image-preview",

contents=prompt,

config=types.GenerateContentConfig(

response_modalities=['Text', 'Image'],

image_config=types.ImageConfig(

aspect_ratio=aspect_ratio,

),

tools=[{"google_search": {}}]

)

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif image:= part.as_image():

image.save("weather.png")

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt = 'Visualize the current weather forecast for the next 5 days in San Francisco as a clean, modern weather chart. Add a visual on what I should wear each day';

const aspectRatio = '16:9';

const resolution = '2K';

const response = await ai.models.generateContent({

model: 'gemini-3-pro-image-preview',

contents: prompt,

config: {

responseModalities: ['TEXT', 'IMAGE'],

imageConfig: {

aspectRatio: aspectRatio,

imageSize: resolution,

},

tools: [{ googleSearch: {} }]

},

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("image.png", buffer);

console.log("Image saved as image.png");

}

}

}

main();

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.GoogleSearch;

import com.google.genai.types.ImageConfig;

import com.google.genai.types.Part;

import com.google.genai.types.Tool;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class SearchGrounding {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.imageConfig(ImageConfig.builder()

.aspectRatio("16:9")

.build())

.tools(Tool.builder()

.googleSearch(GoogleSearch.builder().build())

.build())

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-3-pro-image-preview", """

Visualize the current weather forecast for the next 5 days

in San Francisco as a clean, modern weather chart.

Add a visual on what I should wear each day

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("weather.png"), blob.data().get());

}

}

}

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-image-preview:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{"parts": [{"text": "Visualize the current weather forecast for the next 5 days in San Francisco as a clean, modern weather chart. Add a visual on what I should wear each day"}]}],

"tools": [{"google_search": {}}],

"generationConfig": {

"responseModalities": ["TEXT", "IMAGE"],

"imageConfig": {"aspectRatio": "16:9"}

}

}'

A resposta inclui groundingMetadata, que contém os seguintes campos obrigatórios:

searchEntryPoint: contém o HTML e o CSS para renderizar as sugestões de pesquisa necessárias.groundingChunks: retorna as três principais fontes da Web usadas para embasar a imagem gerada.

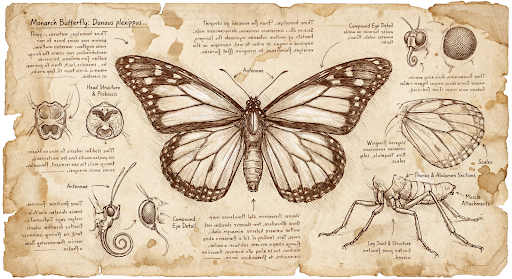

Gerar imagens com resolução de até 4K

O Gemini 3 Pro Image gera imagens em 1K por padrão, mas também pode gerar imagens em 2K e 4K. Para gerar recursos de resolução mais alta, especifique image_size em

generation_config.

Use um "K" maiúsculo (por exemplo, 1K, 2K, 4K). Parâmetros em letras minúsculas (por exemplo, 1k) será rejeitado.

Python

from google import genai

from google.genai import types

prompt = "Da Vinci style anatomical sketch of a dissected Monarch butterfly. Detailed drawings of the head, wings, and legs on textured parchment with notes in English."

aspect_ratio = "1:1" # "1:1","2:3","3:2","3:4","4:3","4:5","5:4","9:16","16:9","21:9"

resolution = "1K" # "1K", "2K", "4K"

client = genai.Client()

response = client.models.generate_content(

model="gemini-3-pro-image-preview",

contents=prompt,

config=types.GenerateContentConfig(

response_modalities=['TEXT', 'IMAGE'],

image_config=types.ImageConfig(

aspect_ratio=aspect_ratio,

image_size=resolution

),

)

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif image:= part.as_image():

image.save("butterfly.png")

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

'Da Vinci style anatomical sketch of a dissected Monarch butterfly. Detailed drawings of the head, wings, and legs on textured parchment with notes in English.';

const aspectRatio = '1:1';

const resolution = '1K';

const response = await ai.models.generateContent({

model: 'gemini-3-pro-image-preview',

contents: prompt,

config: {

responseModalities: ['TEXT', 'IMAGE'],

imageConfig: {

aspectRatio: aspectRatio,

imageSize: resolution,

},

},

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("image.png", buffer);

console.log("Image saved as image.png");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

defer client.Close()

model := client.GenerativeModel("gemini-3-pro-image-preview")

model.GenerationConfig = &pb.GenerationConfig{

ResponseModalities: []pb.ResponseModality{genai.Text, genai.Image},

ImageConfig: &pb.ImageConfig{

AspectRatio: "1:1",

ImageSize: "1K",

},

}

prompt := "Da Vinci style anatomical sketch of a dissected Monarch butterfly. Detailed drawings of the head, wings, and legs on textured parchment with notes in English."

resp, err := model.GenerateContent(ctx, genai.Text(prompt))

if err != nil {

log.Fatal(err)

}

for _, part := range resp.Candidates[0].Content.Parts {

if txt, ok := part.(genai.Text); ok {

fmt.Printf("%s", string(txt))

} else if img, ok := part.(genai.ImageData); ok {

err := os.WriteFile("butterfly.png", img.Data, 0644)

if err != nil {

log.Fatal(err)

}

}

}

}

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.GoogleSearch;

import com.google.genai.types.ImageConfig;

import com.google.genai.types.Part;

import com.google.genai.types.Tool;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class HiRes {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.imageConfig(ImageConfig.builder()

.aspectRatio("16:9")

.imageSize("4K")

.build())

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-3-pro-image-preview", """

Da Vinci style anatomical sketch of a dissected Monarch butterfly.

Detailed drawings of the head, wings, and legs on textured

parchment with notes in English.

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("butterfly.png"), blob.data().get());

}

}

}

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-image-preview:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{"parts": [{"text": "Da Vinci style anatomical sketch of a dissected Monarch butterfly. Detailed drawings of the head, wings, and legs on textured parchment with notes in English."}]}],

"tools": [{"google_search": {}}],

"generationConfig": {

"responseModalities": ["TEXT", "IMAGE"],

"imageConfig": {"aspectRatio": "1:1", "imageSize": "1K"}

}

}'

Confira um exemplo de imagem gerada com esse comando:

Linha de raciocínio

O modelo Gemini 3 Pro Image Preview é um modelo de raciocínio e usa um processo de raciocínio ("Raciocínio") para comandos complexos. Esse recurso é ativado por padrão e não pode ser desativado na API. Para saber mais sobre o processo de pensamento, consulte o guia Pensamento do Gemini.

O modelo gera até duas imagens provisórias para testar a composição e a lógica. A última imagem em "Pensando" também é a imagem renderizada final.

Você pode conferir as ideias que levaram à produção da imagem final.

Python

for part in response.parts:

if part.thought:

if part.text:

print(part.text)

elif image:= part.as_image():

image.show()

JavaScript

for (const part of response.candidates[0].content.parts) {

if (part.thought) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, 'base64');

fs.writeFileSync('image.png', buffer);

console.log('Image saved as image.png');

}

}

}

Thought Signatures

As assinaturas de pensamento são representações criptografadas do processo de raciocínio interno do modelo e são usadas para preservar o contexto de raciocínio em interações de várias rodadas. Todas as respostas incluem um campo thought_signature. Como regra geral, se você receber uma assinatura de pensamento em uma resposta do modelo, transmita-a exatamente como foi recebida ao enviar o histórico da conversa na próxima vez. Se não fizer isso, a resposta pode falhar. Consulte a documentação sobre assinatura de pensamento para mais explicações sobre assinaturas em geral.

Confira como elas funcionam:

- Todas as partes

inline_datacom a imagemmimetypeque fazem parte da resposta precisam ter assinatura. - Se houver partes de texto no início (antes de qualquer imagem) logo após os pensamentos, a primeira parte de texto também deverá ter uma assinatura.

- Se

inline_datapartes com a imagemmimetypefizerem parte de pensamentos, elas não terão assinaturas.

O código a seguir mostra um exemplo de onde as assinaturas de pensamento são incluídas:

[

{

"inline_data": {

"data": "<base64_image_data_0>",

"mime_type": "image/png"

},

"thought": true // Thoughts don't have signatures

},

{

"inline_data": {

"data": "<base64_image_data_1>",

"mime_type": "image/png"

},

"thought": true // Thoughts don't have signatures

},

{

"inline_data": {

"data": "<base64_image_data_2>",

"mime_type": "image/png"

},

"thought": true // Thoughts don't have signatures

},

{

"text": "Here is a step-by-step guide to baking macarons, presented in three separate images.\n\n### Step 1: Piping the Batter\n\nThe first step after making your macaron batter is to pipe it onto a baking sheet. This requires a steady hand to create uniform circles.\n\n",

"thought_signature": "<Signature_A>" // The first non-thought part always has a signature

},

{

"inline_data": {

"data": "<base64_image_data_3>",

"mime_type": "image/png"

},

"thought_signature": "<Signature_B>" // All image parts have a signatures

},

{

"text": "\n\n### Step 2: Baking and Developing Feet\n\nOnce piped, the macarons are baked in the oven. A key sign of a successful bake is the development of \"feet\"—the ruffled edge at the base of each macaron shell.\n\n"

// Follow-up text parts don't have signatures

},

{

"inline_data": {

"data": "<base64_image_data_4>",

"mime_type": "image/png"

},

"thought_signature": "<Signature_C>" // All image parts have a signatures

},

{

"text": "\n\n### Step 3: Assembling the Macaron\n\nThe final step is to pair the cooled macaron shells by size and sandwich them together with your desired filling, creating the classic macaron dessert.\n\n"

},

{

"inline_data": {

"data": "<base64_image_data_5>",

"mime_type": "image/png"

},

"thought_signature": "<Signature_D>" // All image parts have a signatures

}

]

Outros modos de geração de imagens

O Gemini oferece suporte a outros modos de interação com imagens com base na estrutura e no contexto do comando, incluindo:

- Texto para imagens e texto (intercalado): gera imagens com texto relacionado.

- Exemplo de comando: "Gere uma receita ilustrada de paella".

- Imagens e texto para imagens e texto (intercalados): usa imagens e texto de entrada para criar novas imagens e texto relacionados.

- Comando de exemplo: (com uma imagem de um quarto mobiliado) "Quais outros sofás de cores ficariam bons no meu espaço? Você pode atualizar a imagem?"

Gerar imagens em lote

Se você precisar gerar muitas imagens, use a API Batch. Você recebe limites de taxa mais altos em troca de um tempo de resposta de até 24 horas.

Confira a documentação da API Batch para geração de imagens e o cookbook para exemplos e código de imagens da API Batch.

Guia e estratégias para a criação de comandos

Para dominar a geração de imagens, é preciso entender um princípio fundamental:

Descreva a cena, não apenas liste palavras-chave. O principal ponto forte do modelo é a compreensão profunda da linguagem. Um parágrafo narrativo e descritivo quase sempre produz uma imagem melhor e mais coerente do que uma lista de palavras desconectadas.

Comandos para gerar imagens

As estratégias a seguir vão ajudar você a criar comandos eficazes para gerar exatamente as imagens que procura.

1. Cenas fotorrealistas

Para imagens realistas, use termos de fotografia. Mencione ângulos de câmera, tipos de lente, iluminação e detalhes para guiar o modelo a um resultado fotorrealista.

Modelo

A photorealistic [shot type] of [subject], [action or expression], set in

[environment]. The scene is illuminated by [lighting description], creating

a [mood] atmosphere. Captured with a [camera/lens details], emphasizing

[key textures and details]. The image should be in a [aspect ratio] format.

Comando

A photorealistic close-up portrait of an elderly Japanese ceramicist with

deep, sun-etched wrinkles and a warm, knowing smile. He is carefully

inspecting a freshly glazed tea bowl. The setting is his rustic,

sun-drenched workshop. The scene is illuminated by soft, golden hour light

streaming through a window, highlighting the fine texture of the clay.

Captured with an 85mm portrait lens, resulting in a soft, blurred background

(bokeh). The overall mood is serene and masterful. Vertical portrait

orientation.

Python

from google import genai

from google.genai import types

client = genai.Client()

response = client.models.generate_content(

model="gemini-2.5-flash-image",

contents="A photorealistic close-up portrait of an elderly Japanese ceramicist with deep, sun-etched wrinkles and a warm, knowing smile. He is carefully inspecting a freshly glazed tea bowl. The setting is his rustic, sun-drenched workshop with pottery wheels and shelves of clay pots in the background. The scene is illuminated by soft, golden hour light streaming through a window, highlighting the fine texture of the clay and the fabric of his apron. Captured with an 85mm portrait lens, resulting in a soft, blurred background (bokeh). The overall mood is serene and masterful.",

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("photorealistic_example.png")

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class PhotorealisticScene {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-2.5-flash-image",

"""

A photorealistic close-up portrait of an elderly Japanese ceramicist

with deep, sun-etched wrinkles and a warm, knowing smile. He is

carefully inspecting a freshly glazed tea bowl. The setting is his

rustic, sun-drenched workshop with pottery wheels and shelves of

clay pots in the background. The scene is illuminated by soft,

golden hour light streaming through a window, highlighting the

fine texture of the clay and the fabric of his apron. Captured

with an 85mm portrait lens, resulting in a soft, blurred

background (bokeh). The overall mood is serene and masterful.

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("photorealistic_example.png"), blob.data().get());

}

}

}

}

}

}

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

"A photorealistic close-up portrait of an elderly Japanese ceramicist with deep, sun-etched wrinkles and a warm, knowing smile. He is carefully inspecting a freshly glazed tea bowl. The setting is his rustic, sun-drenched workshop with pottery wheels and shelves of clay pots in the background. The scene is illuminated by soft, golden hour light streaming through a window, highlighting the fine texture of the clay and the fabric of his apron. Captured with an 85mm portrait lens, resulting in a soft, blurred background (bokeh). The overall mood is serene and masterful.";

const response = await ai.models.generateContent({

model: "gemini-2.5-flash-image",

contents: prompt,

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("photorealistic_example.png", buffer);

console.log("Image saved as photorealistic_example.png");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-2.5-flash-image",

genai.Text("A photorealistic close-up portrait of an elderly Japanese ceramicist with deep, sun-etched wrinkles and a warm, knowing smile. He is carefully inspecting a freshly glazed tea bowl. The setting is his rustic, sun-drenched workshop with pottery wheels and shelves of clay pots in the background. The scene is illuminated by soft, golden hour light streaming through a window, highlighting the fine texture of the clay and the fabric of his apron. Captured with an 85mm portrait lens, resulting in a soft, blurred background (bokeh). The overall mood is serene and masterful."),

)

for _, part := range result.Candidates[0].Content.Parts {

if part.Text != "" {

fmt.Println(part.Text)

} else if part.InlineData != nil {

imageBytes := part.InlineData.Data

outputFilename := "photorealistic_example.png"

_ = os.WriteFile(outputFilename, imageBytes, 0644)

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-2.5-flash-image:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{

"parts": [

{"text": "A photorealistic close-up portrait of an elderly Japanese ceramicist with deep, sun-etched wrinkles and a warm, knowing smile. He is carefully inspecting a freshly glazed tea bowl. The setting is his rustic, sun-drenched workshop with pottery wheels and shelves of clay pots in the background. The scene is illuminated by soft, golden hour light streaming through a window, highlighting the fine texture of the clay and the fabric of his apron. Captured with an 85mm portrait lens, resulting in a soft, blurred background (bokeh). The overall mood is serene and masterful."}

]

}]

}'

2. Ilustrações e adesivos estilizados

Para criar adesivos, ícones ou recursos, seja explícito sobre o estilo e peça um plano de fundo transparente.

Modelo

A [style] sticker of a [subject], featuring [key characteristics] and a

[color palette]. The design should have [line style] and [shading style].

The background must be transparent.

Comando

A kawaii-style sticker of a happy red panda wearing a tiny bamboo hat. It's

munching on a green bamboo leaf. The design features bold, clean outlines,

simple cel-shading, and a vibrant color palette. The background must be white.

Python

from google import genai

from google.genai import types

client = genai.Client()

response = client.models.generate_content(

model="gemini-2.5-flash-image",

contents="A kawaii-style sticker of a happy red panda wearing a tiny bamboo hat. It's munching on a green bamboo leaf. The design features bold, clean outlines, simple cel-shading, and a vibrant color palette. The background must be white.",

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("red_panda_sticker.png")

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class StylizedIllustration {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-2.5-flash-image",

"""

A kawaii-style sticker of a happy red panda wearing a tiny bamboo

hat. It's munching on a green bamboo leaf. The design features

bold, clean outlines, simple cel-shading, and a vibrant color

palette. The background must be white.

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("red_panda_sticker.png"), blob.data().get());

}

}

}

}

}

}

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

"A kawaii-style sticker of a happy red panda wearing a tiny bamboo hat. It's munching on a green bamboo leaf. The design features bold, clean outlines, simple cel-shading, and a vibrant color palette. The background must be white.";

const response = await ai.models.generateContent({

model: "gemini-2.5-flash-image",

contents: prompt,

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("red_panda_sticker.png", buffer);

console.log("Image saved as red_panda_sticker.png");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-2.5-flash-image",

genai.Text("A kawaii-style sticker of a happy red panda wearing a tiny bamboo hat. It's munching on a green bamboo leaf. The design features bold, clean outlines, simple cel-shading, and a vibrant color palette. The background must be white."),

)

for _, part := range result.Candidates[0].Content.Parts {

if part.Text != "" {

fmt.Println(part.Text)

} else if part.InlineData != nil {

imageBytes := part.InlineData.Data

outputFilename := "red_panda_sticker.png"

_ = os.WriteFile(outputFilename, imageBytes, 0644)

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-2.5-flash-image:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{

"parts": [

{"text": "A kawaii-style sticker of a happy red panda wearing a tiny bamboo hat. It is munching on a green bamboo leaf. The design features bold, clean outlines, simple cel-shading, and a vibrant color palette. The background must be white."}

]

}]

}'

3. Texto preciso em imagens

O Gemini é excelente em renderização de texto. Seja claro sobre o texto, o estilo da fonte (de forma descritiva) e o design geral. Use a prévia de imagens do Gemini 3 Pro para produção de recursos profissionais.

Modelo

Create a [image type] for [brand/concept] with the text "[text to render]"

in a [font style]. The design should be [style description], with a

[color scheme].

Comando

Create a modern, minimalist logo for a coffee shop called 'The Daily Grind'. The text should be in a clean, bold, sans-serif font. The color scheme is black and white. Put the logo in a circle. Use a coffee bean in a clever way.

Python

from google import genai

from google.genai import types

client = genai.Client()

response = client.models.generate_content(

model="gemini-3-pro-image-preview",

contents="Create a modern, minimalist logo for a coffee shop called 'The Daily Grind'. The text should be in a clean, bold, sans-serif font. The color scheme is black and white. Put the logo in a circle. Use a coffee bean in a clever way.",

config=types.GenerateContentConfig(

image_config=types.ImageConfig(

aspect_ratio="1:1",

)

)

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("logo_example.jpg")

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import com.google.genai.types.ImageConfig;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class AccurateTextInImages {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.imageConfig(ImageConfig.builder()

.aspectRatio("1:1")

.build())

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-3-pro-image-preview",

"""

Create a modern, minimalist logo for a coffee shop called 'The Daily Grind'. The text should be in a clean, bold, sans-serif font. The color scheme is black and white. Put the logo in a circle. Use a coffee bean in a clever way.

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("logo_example.jpg"), blob.data().get());

}

}

}

}

}

}

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

"Create a modern, minimalist logo for a coffee shop called 'The Daily Grind'. The text should be in a clean, bold, sans-serif font. The color scheme is black and white. Put the logo in a circle. Use a coffee bean in a clever way.";

const response = await ai.models.generateContent({

model: "gemini-3-pro-image-preview",

contents: prompt,

config: {

imageConfig: {

aspectRatio: "1:1",

},

},

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("logo_example.jpg", buffer);

console.log("Image saved as logo_example.jpg");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-3-pro-image-preview",

genai.Text("Create a modern, minimalist logo for a coffee shop called 'The Daily Grind'. The text should be in a clean, bold, sans-serif font. The color scheme is black and white. Put the logo in a circle. Use a coffee bean in a clever way."),

&genai.GenerateContentConfig{

ImageConfig: &genai.ImageConfig{

AspectRatio: "1:1",

},

},

)

for _, part := range result.Candidates[0].Content.Parts {

if part.Text != "" {

fmt.Println(part.Text)

} else if part.InlineData != nil {

imageBytes := part.InlineData.Data

outputFilename := "logo_example.jpg"

_ = os.WriteFile(outputFilename, imageBytes, 0644)

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-image-preview:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{

"parts": [

{"text": "Create a modern, minimalist logo for a coffee shop called The Daily Grind. The text should be in a clean, bold, sans-serif font. The color scheme is black and white. Put the logo in a circle. Use a coffee bean in a clever way."}

]

}],

"generationConfig": {

"imageConfig": {

"aspectRatio": "1:1"

}

}

}'

4. Simulações de produtos e fotografia comercial

Perfeito para criar fotos de produtos limpas e profissionais para e-commerce, publicidade ou branding.

Modelo

A high-resolution, studio-lit product photograph of a [product description]

on a [background surface/description]. The lighting is a [lighting setup,

e.g., three-point softbox setup] to [lighting purpose]. The camera angle is

a [angle type] to showcase [specific feature]. Ultra-realistic, with sharp

focus on [key detail]. [Aspect ratio].

Comando

A high-resolution, studio-lit product photograph of a minimalist ceramic

coffee mug in matte black, presented on a polished concrete surface. The

lighting is a three-point softbox setup designed to create soft, diffused

highlights and eliminate harsh shadows. The camera angle is a slightly

elevated 45-degree shot to showcase its clean lines. Ultra-realistic, with

sharp focus on the steam rising from the coffee. Square image.

Python

from google import genai

from google.genai import types

client = genai.Client()

response = client.models.generate_content(

model="gemini-2.5-flash-image",

contents="A high-resolution, studio-lit product photograph of a minimalist ceramic coffee mug in matte black, presented on a polished concrete surface. The lighting is a three-point softbox setup designed to create soft, diffused highlights and eliminate harsh shadows. The camera angle is a slightly elevated 45-degree shot to showcase its clean lines. Ultra-realistic, with sharp focus on the steam rising from the coffee. Square image.",

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("product_mockup.png")

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class ProductMockup {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-2.5-flash-image",

"""

A high-resolution, studio-lit product photograph of a minimalist

ceramic coffee mug in matte black, presented on a polished

concrete surface. The lighting is a three-point softbox setup

designed to create soft, diffused highlights and eliminate harsh

shadows. The camera angle is a slightly elevated 45-degree shot

to showcase its clean lines. Ultra-realistic, with sharp focus

on the steam rising from the coffee. Square image.

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("product_mockup.png"), blob.data().get());

}

}

}

}

}

}

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

"A high-resolution, studio-lit product photograph of a minimalist ceramic coffee mug in matte black, presented on a polished concrete surface. The lighting is a three-point softbox setup designed to create soft, diffused highlights and eliminate harsh shadows. The camera angle is a slightly elevated 45-degree shot to showcase its clean lines. Ultra-realistic, with sharp focus on the steam rising from the coffee. Square image.";

const response = await ai.models.generateContent({

model: "gemini-2.5-flash-image",

contents: prompt,

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("product_mockup.png", buffer);

console.log("Image saved as product_mockup.png");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-2.5-flash-image",

genai.Text("A high-resolution, studio-lit product photograph of a minimalist ceramic coffee mug in matte black, presented on a polished concrete surface. The lighting is a three-point softbox setup designed to create soft, diffused highlights and eliminate harsh shadows. The camera angle is a slightly elevated 45-degree shot to showcase its clean lines. Ultra-realistic, with sharp focus on the steam rising from the coffee. Square image."),

)

for _, part := range result.Candidates[0].Content.Parts {

if part.Text != "" {

fmt.Println(part.Text)

} else if part.InlineData != nil {

imageBytes := part.InlineData.Data

outputFilename := "product_mockup.png"

_ = os.WriteFile(outputFilename, imageBytes, 0644)

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-2.5-flash-image:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{

"parts": [

{"text": "A high-resolution, studio-lit product photograph of a minimalist ceramic coffee mug in matte black, presented on a polished concrete surface. The lighting is a three-point softbox setup designed to create soft, diffused highlights and eliminate harsh shadows. The camera angle is a slightly elevated 45-degree shot to showcase its clean lines. Ultra-realistic, with sharp focus on the steam rising from the coffee. Square image."}

]

}]

}'

5. Design minimalista e com espaço negativo

Excelente para criar planos de fundo para sites, apresentações ou materiais de marketing em que o texto será sobreposto.

Modelo

A minimalist composition featuring a single [subject] positioned in the

[bottom-right/top-left/etc.] of the frame. The background is a vast, empty

[color] canvas, creating significant negative space. Soft, subtle lighting.

[Aspect ratio].

Comando

A minimalist composition featuring a single, delicate red maple leaf

positioned in the bottom-right of the frame. The background is a vast, empty

off-white canvas, creating significant negative space for text. Soft,

diffused lighting from the top left. Square image.

Python

from google import genai

from google.genai import types

client = genai.Client()

response = client.models.generate_content(

model="gemini-2.5-flash-image",

contents="A minimalist composition featuring a single, delicate red maple leaf positioned in the bottom-right of the frame. The background is a vast, empty off-white canvas, creating significant negative space for text. Soft, diffused lighting from the top left. Square image.",

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("minimalist_design.png")

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class MinimalistDesign {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-2.5-flash-image",

"""

A minimalist composition featuring a single, delicate red maple

leaf positioned in the bottom-right of the frame. The background

is a vast, empty off-white canvas, creating significant negative

space for text. Soft, diffused lighting from the top left.

Square image.

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("minimalist_design.png"), blob.data().get());

}

}

}

}

}

}

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

"A minimalist composition featuring a single, delicate red maple leaf positioned in the bottom-right of the frame. The background is a vast, empty off-white canvas, creating significant negative space for text. Soft, diffused lighting from the top left. Square image.";

const response = await ai.models.generateContent({

model: "gemini-2.5-flash-image",

contents: prompt,

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("minimalist_design.png", buffer);

console.log("Image saved as minimalist_design.png");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-2.5-flash-image",

genai.Text("A minimalist composition featuring a single, delicate red maple leaf positioned in the bottom-right of the frame. The background is a vast, empty off-white canvas, creating significant negative space for text. Soft, diffused lighting from the top left. Square image."),

)

for _, part := range result.Candidates[0].Content.Parts {

if part.Text != "" {

fmt.Println(part.Text)

} else if part.InlineData != nil {

imageBytes := part.InlineData.Data

outputFilename := "minimalist_design.png"

_ = os.WriteFile(outputFilename, imageBytes, 0644)

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-2.5-flash-image:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \