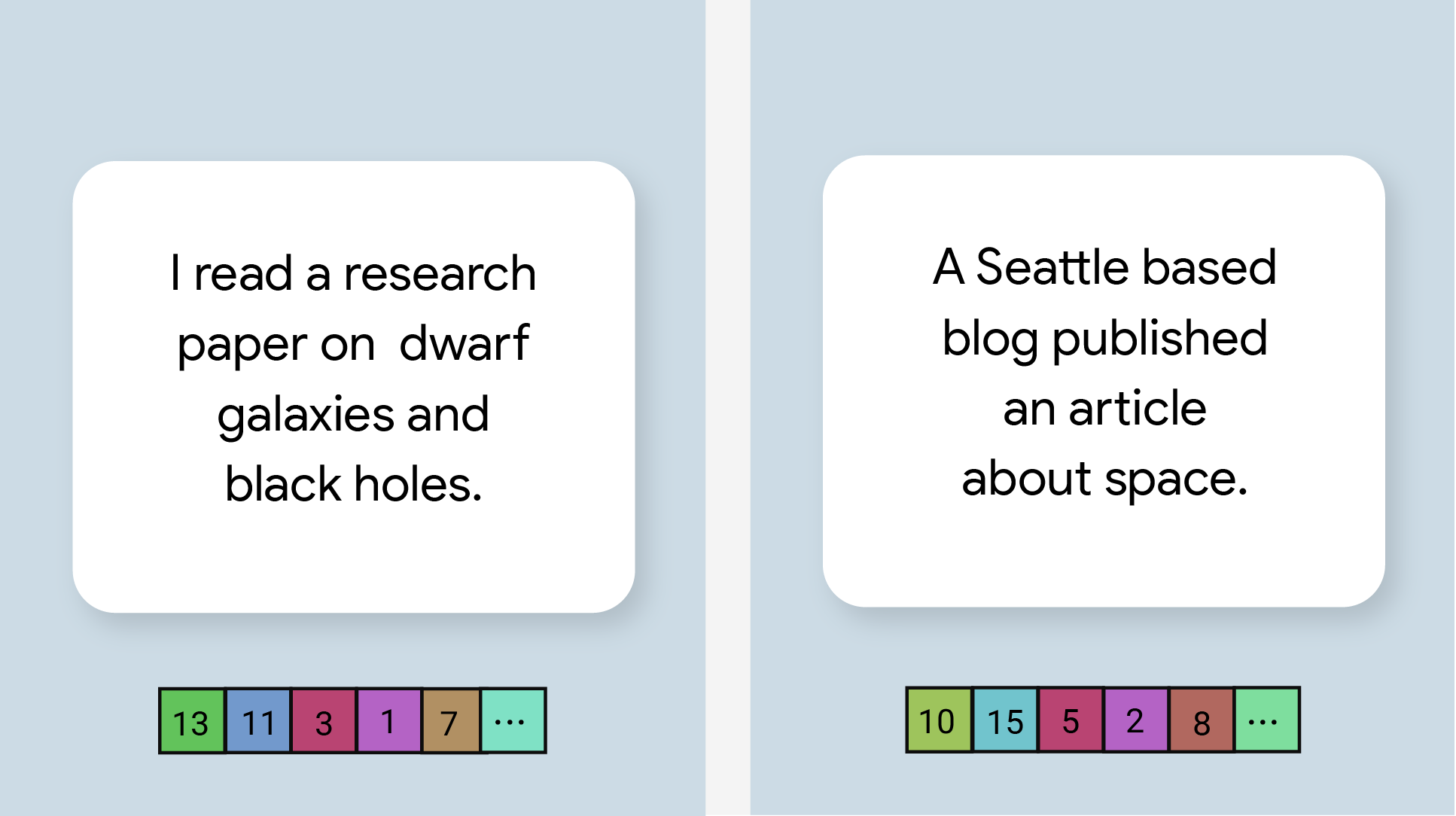

Tugas MediaPipe Text Embedder memungkinkan Anda membuat representasi numerik data teks untuk menangkap makna semantiknya. Fungsi ini sering digunakan untuk membandingkan kesamaan semantik dari dua bagian teks menggunakan teknik perbandingan matematika seperti Kesamaan Kosinus. Tugas ini beroperasi pada data teks dengan model machine learning (ML), dan menghasilkan representasi numerik data teks sebagai daftar vektor fitur berdimensi tinggi, yang juga dikenal sebagai vektor penyematan, dalam bentuk floating point atau kuantisasi.

Mulai

Mulai gunakan tugas ini dengan mengikuti salah satu panduan penerapan ini untuk platform target Anda. Panduan khusus platform ini akan memandu Anda dalam penerapan dasar tugas ini, termasuk model yang direkomendasikan, dan contoh kode dengan opsi konfigurasi yang direkomendasikan:

- Android - Contoh kode - Panduan

- Python - Contoh kode - Panduan

- Web - Contoh kode - Panduan

Detail tugas

Bagian ini menjelaskan kemampuan, input, output, dan opsi konfigurasi tugas ini.

Fitur

- Pemrosesan teks input - Mendukung tokenisasi di luar grafik untuk model tanpa tokenisasi dalam grafik.

- Komputasi kemiripan penyematan - Fungsi utilitas bawaan untuk menghitung kemiripan kosinus antara dua vektor fitur.

- Kuantisasi - Mendukung kuantisasi skalar untuk vektor fitur.

| Input tugas | Output tugas |

|---|---|

Penyematan Teks menerima jenis data input berikut:

|

Text Embedder menghasilkan daftar penyematan yang terdiri dari:

|

Opsi konfigurasi

Tugas ini memiliki opsi konfigurasi berikut:

| Nama Opsi | Deskripsi | Rentang Nilai | Nilai Default |

|---|---|---|---|

l2_normalize |

Apakah akan menormalisasi vektor fitur yang ditampilkan dengan norma L2. Gunakan opsi ini hanya jika model belum berisi Opsi TFLite L2_NORMALIZATION native. Pada umumnya, hal ini sudah terjadi dan normalisasi L2 akan dicapai melalui inferensi TFLite tanpa memerlukan opsi ini. | Boolean |

False |

quantize |

Apakah penyematan yang ditampilkan harus dikuantifikasi ke byte melalui kuantisasi skalar. Secara implisit, penyematan diasumsikan sebagai unit-norm dan oleh karena itu, dimensi apa pun dijamin memiliki nilai dalam [-1,0, 1,0]. Gunakan opsi l2_normalize jika tidak demikian. | Boolean |

False |

Model

Kami menawarkan model default yang direkomendasikan saat Anda mulai mengembangkan dengan tugas ini.

Model Universal Sentence Encoder (direkomendasikan)

Model ini menggunakan arsitektur encoder ganda dan dilatih pada berbagai set data pertanyaan-jawaban.

Pertimbangkan pasangan kalimat berikut:

- ("it's a charming and often affecting journey", "what a great and fantastic trip")

- ("Saya suka ponsel saya", "Saya benci ponsel saya")

- ("Restoran ini memiliki gimmick yang bagus", "Kita perlu memeriksa ulang detail rencana kita")

Penyematan teks dalam dua pasangan pertama akan memiliki kesamaan kosinus yang lebih tinggi daripada penyematan dalam pasangan ketiga karena dua pasangan kalimat pertama berbagi topik umum "sentimen perjalanan" dan "pendapat ponsel", sedangkan pasangan kalimat ketiga tidak memiliki topik yang sama.

Perhatikan bahwa meskipun dua kalimat dalam pasangan kedua memiliki sentimen yang berlawanan, keduanya memiliki skor kesamaan yang tinggi karena memiliki topik yang sama.

| Nama model | Bentuk input | Jenis kuantisasi | Versi |

|---|---|---|---|

| Encoder Kalimat Universal | string, string, string | Tidak ada (float32) | Terbaru |

Tolok ukur tugas

Berikut adalah tolok ukur tugas untuk seluruh pipeline berdasarkan model terlatih di atas. Hasil latensi adalah latensi rata-rata di Pixel 6 yang menggunakan CPU / GPU.

| Nama Model | Latensi CPU | Latensi GPU |

|---|---|---|

| Encoder Kalimat Universal | 18,21 md | - |