The MediaPipe Text Embedder task lets you create a numeric representation of text data to capture its semantic meaning. This functionality is frequently used to compare the semantic similarity of two pieces of text using mathematical comparison techniques such as Cosine Similarity. This task operates on text data with a machine learning (ML) model, and outputs a numeric representation of the text data as a list of high-dimensional feature vectors, also known as embedding vectors, in either floating-point or quantized form.

Get Started

Start using this task by following one of these implementation guides for your target platform. These platform-specific guides walk you through a basic implementation of this task, including a recommended model, and code example with recommended configuration options:

- Android - Code example - Guide

- Python - Code example - Guide

- Web - Code example - Guide

Task details

This section describes the capabilities, inputs, outputs, and configuration options of this task.

Features

- Input text processing - Supports out-of-graph tokenization for models without in-graph tokenization.

- Embedding similarity computation - Built-in utility function to compute the cosine similarity between two feature vectors.

- Quantization - Supports scalar quantization for the feature vectors.

| Task inputs | Task outputs |

|---|---|

Text Embedder accepts the following input data type:

|

Text Embedder outputs a list of embeddings consisting of:

|

Configurations options

This task has the following configuration options:

| Option Name | Description | Value Range | Default Value |

|---|---|---|---|

l2_normalize |

Whether to normalize the returned feature vector with L2 norm. Use this option only if the model does not already contain a native L2_NORMALIZATION TFLite Op. In most cases, this is already the case and L2 normalization is thus achieved through TFLite inference with no need for this option. | Boolean |

False |

quantize |

Whether the returned embedding should be quantized to bytes via scalar quantization. Embeddings are implicitly assumed to be unit-norm and therefore any dimension is guaranteed to have a value in [-1.0, 1.0]. Use the l2_normalize option if this is not the case. | Boolean |

False |

Models

We offer a default, recommended model when you start developing with this task.

Universal Sentence Encoder model (recommended)

This model uses a dual encoder architecture and was trained on various question-answer datasets.

Consider the following pairs of sentences:

- ("it's a charming and often affecting journey", "what a great and fantastic trip")

- ("I like my phone", "I hate my phone")

- ("This restaurant has a great gimmick", "We need to double-check the details of our plan")

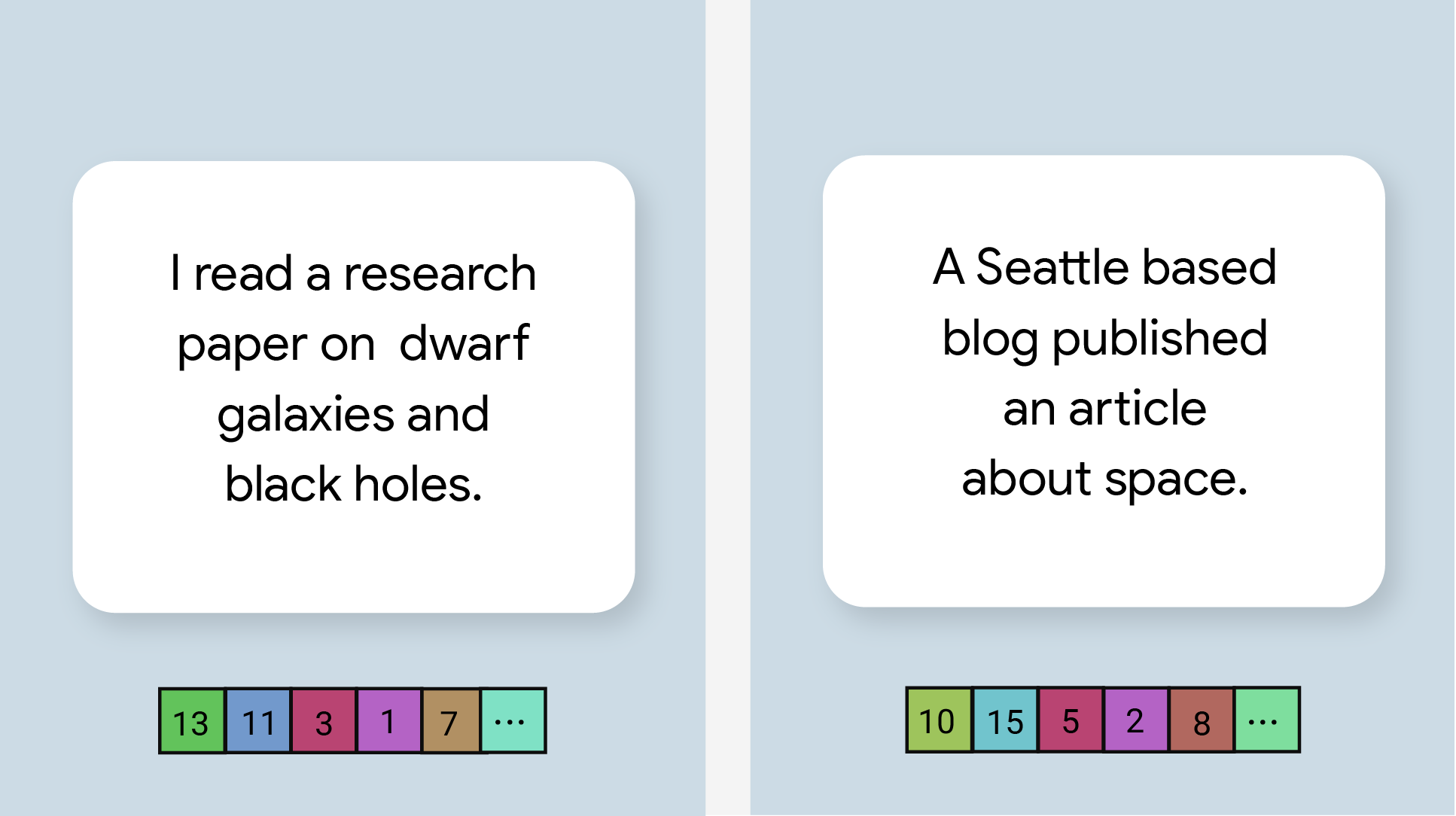

The text embeddings in the first two pairs will have a higher cosine similarity than the embeddings in the third pair because the first two pairs of sentences share a common topic of "trip sentiment" and "phone opinion" respectively while the third pair of sentences do not share a common topic.

Note that although the two sentences in the second pair have opposing sentiments, they have a high similarity score because they share a common topic.

| Model name | Input shape | Quantization type | Versions |

|---|---|---|---|

| Universal Sentence Encoder | string, string, string | None (float32) | Latest |

Task benchmarks

Here's the task benchmarks for the whole pipeline based on the above pre-trained models. The latency result is the average latency on Pixel 6 using CPU / GPU.

| Model Name | CPU Latency | GPU Latency |

|---|---|---|

| Universal Sentence Encoder | 18.21ms | - |