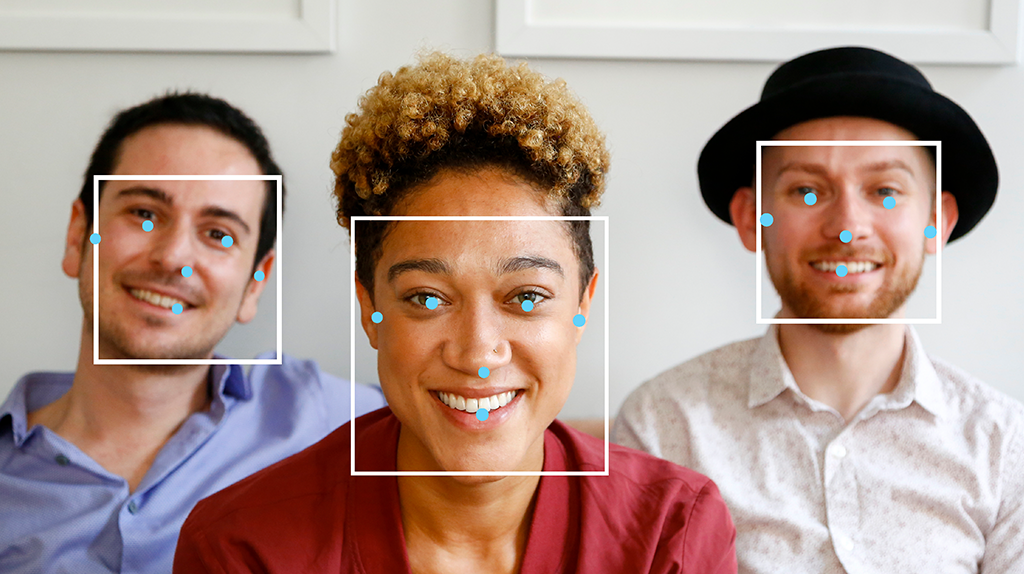

The MediaPipe Face Detector task lets you detect faces in an image or video. You can use this task to locate faces and facial features within a frame. This task uses a machine learning (ML) model that works with single images or a continuous stream of images. The task outputs face locations, along with the following facial key points: left eye, right eye, nose tip, mouth, left eye tragion, and right eye tragion.

Get Started

Start using this task by following one of these implementation guides for your target platform. These platform-specific guides walk you through a basic implementation of this task, including a recommended model, and code example with recommended configuration options:

- Android - Code example - Guide

- Python - Code example- Guide

- Web - Code example - Guide

- iOS - Code example - Guide

Task details

This section describes the capabilities, inputs, outputs, and configuration options of this task.

Features

- Input image processing - Processing includes image rotation, resizing, normalization, and color space conversion.

- Score threshold - Filter results based on prediction scores.

| Task inputs | Task outputs |

|---|---|

The Face Detector accepts an input of one of the following data types:

|

The Face Detector outputs the following results:

|

Configurations options

This task has the following configuration options:

| Option Name | Description | Value Range | Default Value |

|---|---|---|---|

running_mode |

Sets the running mode for the task. There are three

modes: IMAGE: The mode for single image inputs. VIDEO: The mode for decoded frames of a video. LIVE_STREAM: The mode for a livestream of input data, such as from a camera. In this mode, resultListener must be called to set up a listener to receive results asynchronously. |

{IMAGE, VIDEO, LIVE_STREAM} |

IMAGE |

min_detection_confidence |

The minimum confidence score for the face detection to be considered successful. | Float [0,1] |

0.5 |

min_suppression_threshold |

The minimum non-maximum-suppression threshold for face detection to be considered overlapped. | Float [0,1] |

0.3 |

result_callback |

Sets the result listener to receive the detection results

asynchronously when the Face Detector is in the live stream

mode. Can only be used when running mode is set to LIVE_STREAM. |

N/A |

Not set |

Models

Face detection models can vary depending on their intended use cases, such as short-range and long-range detection. Models also typically make trade-offs between performance, accuracy, resolution, and resource requirements, and in some cases, include additional features.

The models listed in this section are variants of BlazeFace, a lightweight and accurate face detector optimized for mobile GPU inference. BlazeFace models are suitable for applications like 3D facial keypoint estimation, expression classification, and face region segmentation. BlazeFace uses a lightweight feature extraction network similar to MobileNetV1/V2.

BlazeFace (short-range)

A lightweight model for detecting single or multiple faces within selfie-like images from a smartphone camera or webcam. The model is optimized for front-facing phone camera images at short range. The model architecture uses a Single Shot Detector (SSD) convolutional network technique with a custom encoder. For more information, see the research paper on Single Shot MultiBox Detector.

| Model name | Input shape | Quantization type | Model Card | Versions |

|---|---|---|---|---|

| BlazeFace (short-range) | 128 x 128 | float 16 | info | Latest |

BlazeFace (full-range)

A relatively lightweight model for detecting single or multiple faces within images from a smartphone camera or webcam. The model is optimized for full-range images, like those taken with a back-facing phone camera images. The model architecture uses a technique similar to a CenterNet convolutional network with a custom encoder.

| Model name | Input shape | Quantization type | Model Card | Versions |

|---|---|---|---|---|

| BlazeFace (full-range) | 128 x 128 | float 16 | info | Coming soon |

BlazeFace Sparse (full-range)

A lighter version of the regular full-range BlazeFace model, roughly 60% smaller in size. The model is optimized for full-range images, like those taken with a back-facing phone camera images. The model architecture uses a technique similar to a CenterNet convolutional network with a custom encoder.

| Model name | Input shape | Quantization type | Model Card | Versions |

|---|---|---|---|---|

| BlazeFace Sparse (full-range) | 128 x 128 | float 16 | info | Coming soon |

Task benchmarks

Here's the task benchmarks for the whole pipeline based on the above pre-trained models. The latency result is the average latency on Pixel 6 using CPU / GPU.

| Model Name | CPU Latency | GPU Latency |

|---|---|---|

| BlazeFace (short-range) | 2.94ms | 7.41ms |