The MediaPipe Image Generator task lets you generate images based on a text prompt. This task uses a text-to-image model to generate images using diffusion techniques.

The task accepts a text prompt as input, along with an optional condition image that the model can augment and use as a reference for generation. For more on conditioned text-to-image generation, see On-device diffusion plugins for conditioned text-to-image generation.

Image Generator can also generate images based on specific concepts provided to the model during training or retraining. For more information, see customize with LoRA.

Get Started

Start using this task by following one of these implementation guides for your target platform. These platform-specific guides walk you through a basic implementation of this task, with code examples that use a default model and the recommended configuration options:

- Android - Code example - Guide

- Customize with LoRA - Code example - Colab

Task details

This section describes the capabilities, inputs, outputs, and configuration options of this task.

Features

You can use the Image Generator to implement the following:

- Text-to-image generation - Generate images with a text prompt.

- Image generation with condition images - Generate images with a text prompt and a reference image. Image Generator uses condition images in ways similar to ControlNet.

- Image generation with LoRA weights - Generate images of specific people, objects, and styles with a text prompt using customized model weights.

| Task inputs | Task outputs |

|---|---|

The Image Generator accepts the following inputs:

|

The Image Generator outputs the following results:

|

Configurations options

This task has the following configuration options:

| Option Name | Description | Value Range |

|---|---|---|

imageGeneratorModelDirectory |

The image generator model directory storing the model weights. | PATH |

loraWeightsFilePath |

Sets the path to LoRA weights file. Optional and only applicable if the model was customized with LoRA. | PATH |

errorListener |

Sets an optional error listener. | N/A |

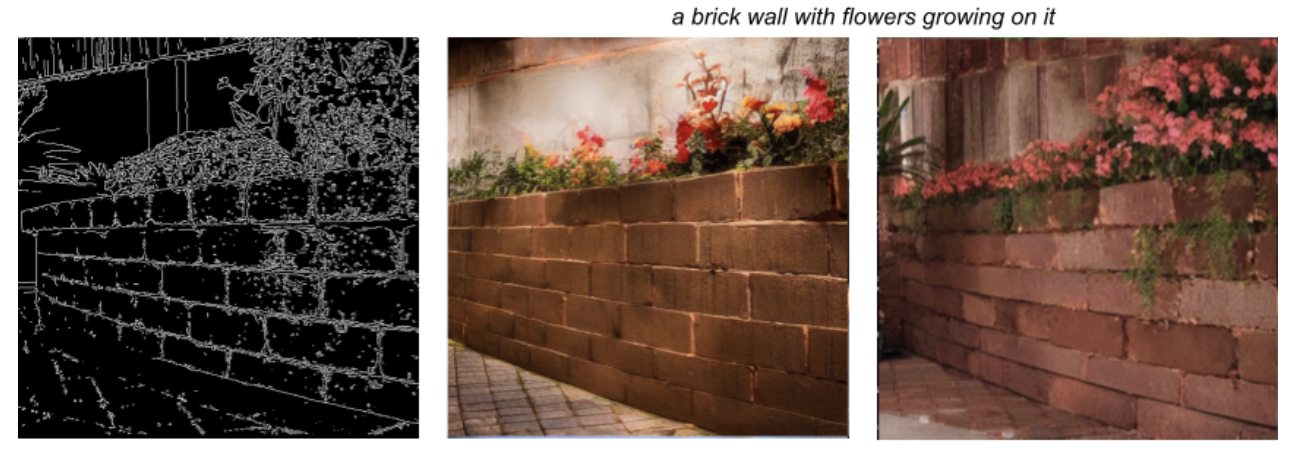

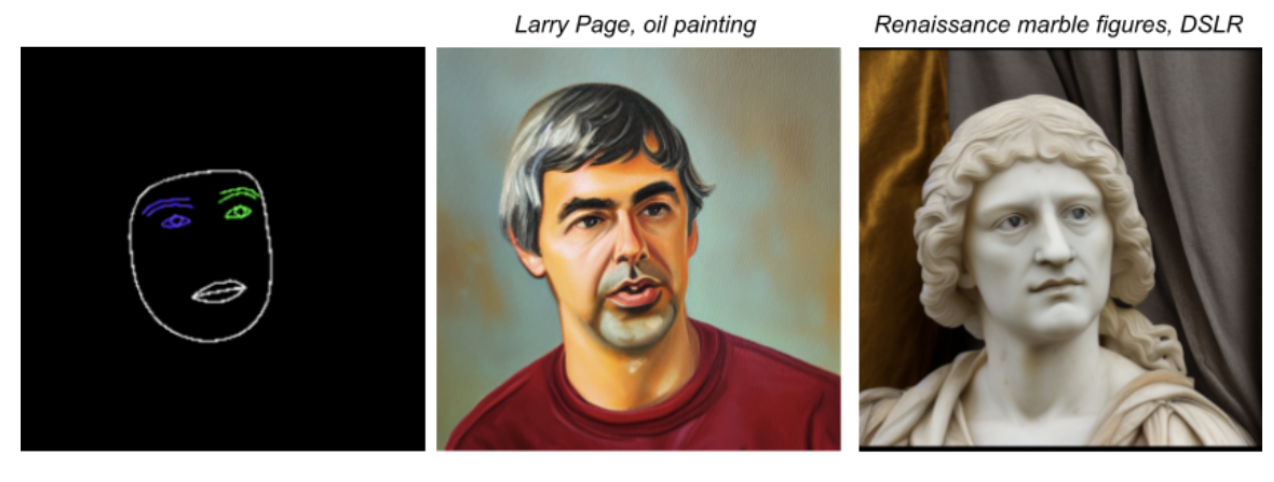

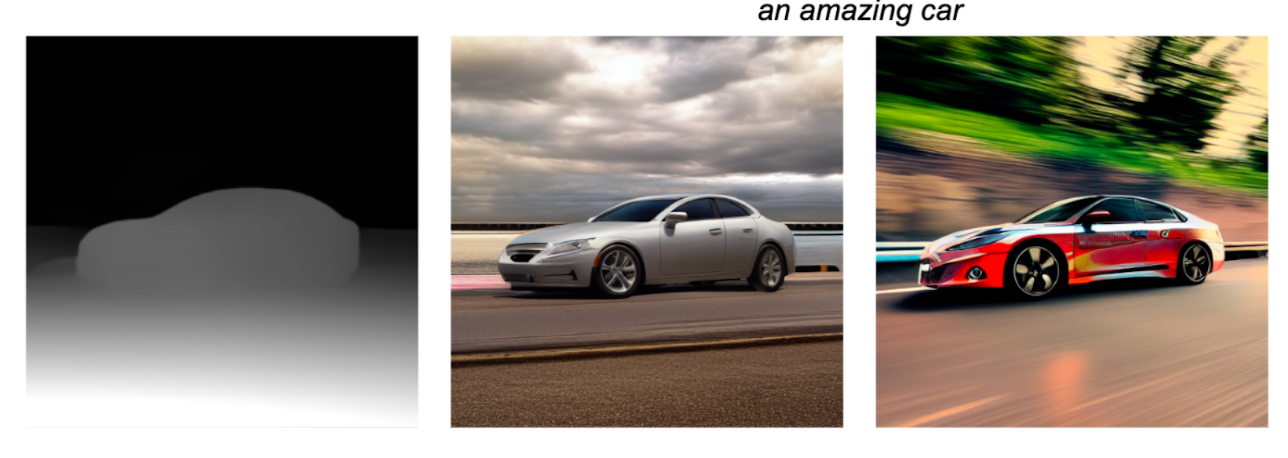

The task also supports plugin models, which lets users include condition images in the task input, which the foundation model can augment and use as a reference for generation. These condition images can be face landmarks, edge outlines, and depth estimates, which the model uses as additional context and information to generate images.

When adding a plugin model to the foundation model, also configure the plugin

options. The Face landmark plugin uses faceConditionOptions, the Canny edge

plugin uses edgeConditionOptions, and the Depth plugin uses

depthConditionOptions.

Canny edge options

Configure the following options in edgeConditionOptions.

| Option Name | Description | Value Range | Default Value |

|---|---|---|---|

threshold1 |

First threshold for the hysteresis procedure. | Float |

100 |

threshold2 |

Second threshold for the hysteresis procedure. | Float |

200 |

apertureSize |

Aperture size for the Sobel operator. Typical range is between 3-7. | Integer |

3 |

l2Gradient |

Whether the L2 norm is used to calculate the image gradient magnitude, instead of the default L1 norm. | BOOLEAN |

False |

EdgePluginModelBaseOptions |

The BaseOptions object that sets the path

for the plugin model. |

BaseOptions object |

N/A |

For more information on how these configuration options work, see Canny edge detector.

Face landmark options

Configure the following options in faceConditionOptions.

| Option Name | Description | Value Range | Default Value |

|---|---|---|---|

minFaceDetectionConfidence |

The minimum confidence score for the face detection to be considered successful. | Float [0.0,1.0] |

0.5 |

minFacePresenceConfidence |

The minimum confidence score of face presence score in the face landmark detection. | Float [0.0,1.0] |

0.5 |

faceModelBaseOptions |

The BaseOptions object that sets the path

for the model that creates the condition image. |

BaseOptions object |

N/A |

FacePluginModelBaseOptions |

The BaseOptions object that sets the path

for the plugin model. |

BaseOptions object |

N/A |

For more information on how these configuration options work, see the Face Landmarker task.

Depth options

Configure the following options in depthConditionOptions.

| Option Name | Description | Value Range | Default Value |

|---|---|---|---|

depthModelBaseOptions |

The BaseOptions object that sets the path

for the model that creates the condition image. |

BaseOptions object |

N/A |

depthPluginModelBaseOptions |

The BaseOptions object that sets the path

for the plugin model. |

BaseOptions object |

N/A |

Models

The Image Generator requires a foundation model, which is a text-to-image AI model that uses diffusion techniques to generate new images. The foundation models listed in this section are lightweight models optimized to run on high-end smartphones.

Plugin models are optional and complement the foundational models, enabling users to provide an additional condition image along with a text prompt, for more specific image generation. Customizing the foundation models using LoRA weights is an option that teach the foundation model about a specific concept, such as an object, person, or style, and inject them into generated images.

Foundation models

The foundation models are latent text-to-image diffusion models that generate

images from a text prompt. The Image Generator requires that the foundation model

match the stable-diffusion-v1-5/stable-diffusion-v1-5 EMA-only model format, based on the

following model:

The following foundation models are also compatible with the Image Generator:

After downloading a foundation model, use the image_generator_converter to convert the model into the appropriate on-device format for the Image Generator.

Install the necessary dependencies:

$ pip install torch typing_extensions numpy Pillow requests pytorch_lightning absl-py

Run the

convert.py

script:

$ python3 convert.py --ckpt_path <ckpt_path> --output_path <output_path>

Plugin models

The plugin models in this section are developed by Google and must be used in combination with a foundation model. Plugin models enable Image Generator to accept a condition image along with a text prompt as input, which lets you control the structure of generated images. The plugin models provide capabilities similar to ControlNet, with a novel architecture specifically for on-device diffusion.

The plugin models must be specified in the base options and may require you to download additional model files. Each plugin has unique requirements for the condition image, which can be generated by the Image Generator.

Canny Edge plugin

The Canny Edge plugin accepts a condition image that outlines the intended edges of the generated image. The foundation model uses the edges implied by the condition image, and generates a new image based on the text prompt. The Image Generator contains built-in capabilities to create condition images, and only requires downloading the plugin model.

The Canny Edge plugin contains the following configuration options:

| Option Name | Description | Value Range | Default Value |

|---|---|---|---|

threshold1 |

First threshold for the hysteresis procedure. | Float |

100 |

threshold2 |

Second threshold for the hysteresis procedure. | Float |

200 |

apertureSize |

Aperture size for the Sobel operator. Typical range is between 3-7. | Integer |

3 |

l2Gradient |

Whether the L2 norm is used to calculate the image gradient magnitude, instead of the default L1 norm. | BOOLEAN |

False |

EdgePluginModelBaseOptions |

The BaseOptions object that sets the path

for the plugin model. |

BaseOptions object |

N/A |

For more information on how these configuration options work, see Canny edge detector.

Face Landmark plugin

The Face Landmark plugin accepts the output from the MediaPipe Face Landmarker as the condition image. The Face Landmarker provides a detailed face mesh of a single face, which maps the presence and location of facial features. The foundation model uses the facial mapping implied by the condition image, and generates a new face over the mesh.

The Face landmark plugin also requires the Face Landmarker model bundle to create the condition image. This model bundle is the same bundle used by the Face Landmarker task.

Download Face landmark model bundle

The Face Landmark plugin contains the following configuration options:

| Option Name | Description | Value Range | Default Value |

|---|---|---|---|

minFaceDetectionConfidence |

The minimum confidence score for the face detection to be considered successful. | Float [0.0,1.0] |

0.5 |

minFacePresenceConfidence |

The minimum confidence score of face presence score in the face landmark detection. | Float [0.0,1.0] |

0.5 |

faceModelBaseOptions |

The BaseOptions object that sets the path

for the model that creates the condition image. |

BaseOptions object |

N/A |

FacePluginModelBaseOptions |

The BaseOptions object that sets the path

for the plugin model. |

BaseOptions object |

N/A |

For more information on how these configuration options work, see the Face Landmarker task.

Depth plugin

The Depth plugin accepts a condition image that specifies the monocular depth of an object. The foundation model uses the condition image to infer the size and depth of the object to be generated, and generates a new image based on the text prompt.

The Depth plugin also requires a Depth estimation model to create the condition image.

Download Depth estimation model

The Depth plugin contains the following configuration options:

| Option Name | Description | Value Range | Default Value |

|---|---|---|---|

depthModelBaseOptions |

The BaseOptions object that sets the path

for the model that creates the condition image. |

BaseOptions object |

N/A |

depthPluginModelBaseOptions |

The BaseOptions object that sets the path

for the plugin model. |

BaseOptions object |

N/A |

Customization with LoRA

Customizing a model with LoRA can enable the Image Generator to generate images based on specific concepts, which are identified by unique tokens during training. With the new LoRA weights after training, the model is able to generate images of the new concept when the token is specified in the text prompt.

Creating LoRA weights requires training a foundation model on images of a specific object, person, or style, which enables the model to recognize the new concept and apply it when generating images. If you are creating LoRa weights to generate images of specific people and faces, only use this solution on your face or faces of people who have given you permission to do so.

Below is the output from a customized model trained on images of teapots from the DreamBooth dataset, using the token "monadikos teapot":

Prompt: a monadikos teapot beside a mirror

The customized model received the token in the prompt and injected a teapot that it learned to depict from the LoRA weights, and places it the image beside a mirror as requested in the prompt.

For more information, see the customization guide, which uses Model Garden on Vertex AI to customize a model by applying LoRA weights to a foundation model.