-

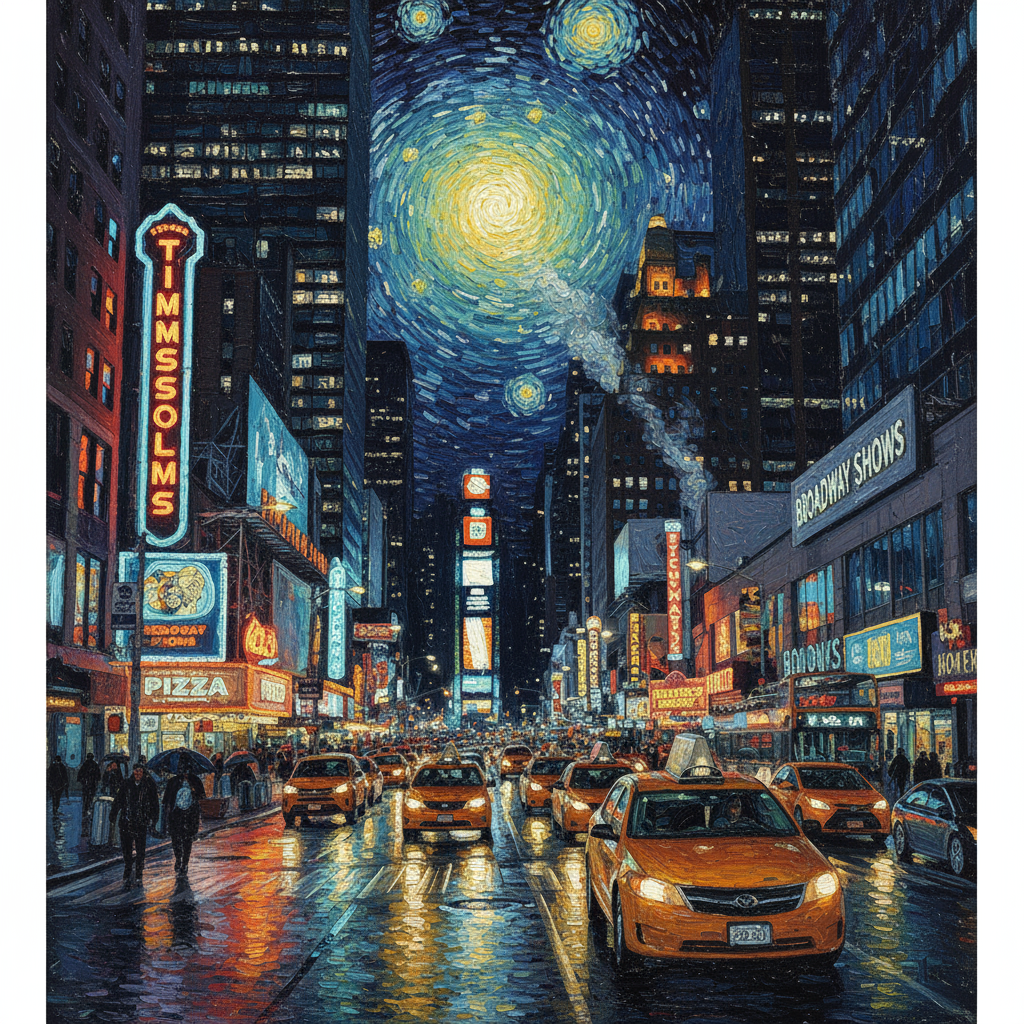

Generato da Nano Banana Pro Prompt: "Presenta una scena chiara, in miniatura, isometrica, dall'alto, a 45°, in 3D, di Londra, con i suoi monumenti e gli elementi architettonici più iconici. Utilizza texture morbide e raffinate con materiali PBR realistici e illuminazione e ombre delicate e realistiche. Integra le condizioni meteo attuali direttamente nell'ambiente della città per creare un'atmosfera coinvolgente. Utilizza una composizione pulita e minimalista con uno sfondo morbido e in tinta unita. Nella parte superiore centrale, inserisci il titolo "Londra" in grassetto grande, un'icona meteo in evidenza sotto, poi la data (testo piccolo) e la temperatura (testo medio). Tutto il testo deve essere centrato con una spaziatura uniforme e può sovrapporsi leggermente alla parte superiore degli edifici."Scopri di più sul grounding della ricerca e provalo in AI Studio -

Generato da Nano Banana Pro Prompt: "Inserisci questo logo in un annuncio di lusso per un profumo al profumo di banana. Il logo è perfettamente integrato nella bottiglia".Prova la conservazione dei dettagli ad alta fedeltà di Nano Banana in AI Studio -

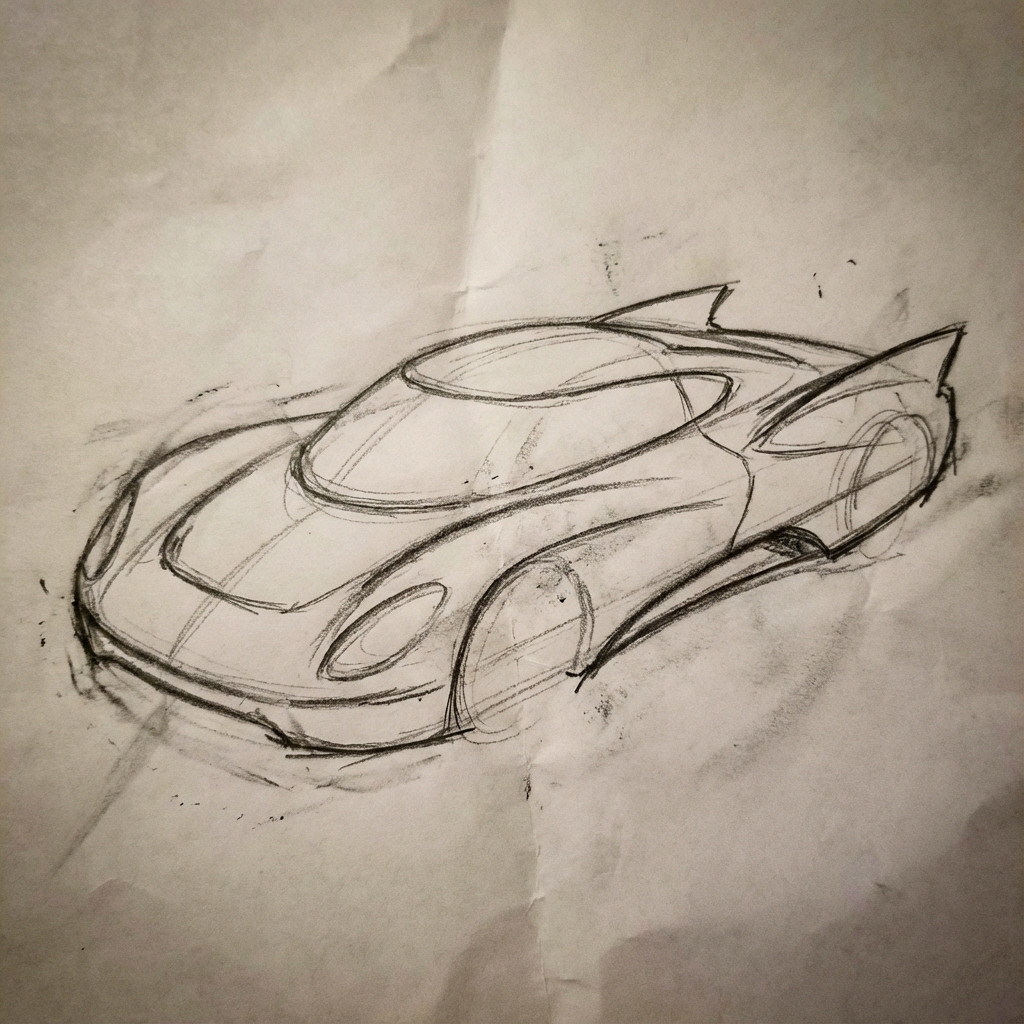

Generato da Nano Banana Pro Prompt: "Ripristina fedelmente questa immagine con alta fedeltà alla qualità fotografica moderna, a colori, esegui l'upscaling a 4K"Genera immagini con una risoluzione fino a 4K. Prova Nano Banana AI Studio -

Generato da Nano Banana Pro Prompt: "Una foto della copertina lucida di una rivista, con le parole "Nano Banana Pro" in grassetto e di grandi dimensioni. Il testo è in un carattere serif e riempie la visualizzazione. Nessun altro testo. Davanti al testo c'è il ritratto di una persona con un bellissimo abito. Inserisci il numero di uscita e la data odierna nell'angolo, insieme a un codice a barre e un prezzo. La rivista è su uno scaffale contro un muro di mattoni, all'interno di un negozio di design. Un manichino indossa lo stesso outfit." -

Generato da Nano Banana Pro Prompt: "Utilizza la ricerca per scoprire come è stato accolto il lancio di Gemini 3 Flash. Utilizza queste informazioni per scrivere un breve articolo sull'argomento (con i titoli). Restituisci una foto dell'articolo così come appariva in una rivista patinata incentrata sul design. È una foto di una singola pagina piegata, che mostra l'articolo su Gemini 3 Flash. Una foto hero. Titolo in serif." -

Generato da Nano Banana Pro Prompt: "Una foto di una scena quotidiana in un caffè affollato che serve la colazione. In primo piano c'è un uomo anime con i capelli blu, una delle persone è un disegno a matita, un'altra è una persona in claymation"Sperimenta diversi stili artistici con Nano Banana in AI Studio -

Generato da Nano Banana Pro Prompt: "Un'icona che rappresenta un cane carino. Lo sfondo è bianco. Crea le icone in uno stile 3D colorato e tattile. Nessun testo."Crea icone, adesivi e asset con Nano Banana in AI Studio -

Generato da Nano Banana Pro Prompt: "Crea una foto perfettamente isometrica. Non è una miniatura, ma una foto acquisita che è risultata perfettamente isometrica. È una foto di un bellissimo interno di un ufficio moderno".Prova la generazione di immagini fotorealistiche in AI Studio

Nano Banana è il nome delle funzionalità di generazione di immagini native di Gemini. Gemini può generare ed elaborare immagini in modo conversazionale con testo, immagini o una combinazione di entrambi. In questo modo puoi creare, modificare e iterare le immagini con un controllo senza precedenti.

Nano Banana si riferisce a due modelli distinti disponibili nell'API Gemini:

- Nano Banana: il modello Gemini 2.5 Flash Image (

gemini-2.5-flash-image). Questo modello è progettato per velocità ed efficienza, ottimizzato per attività a basso volume e bassa latenza. - Nano Banana Pro: il modello Gemini 3 Pro Image Preview (

gemini-3-pro-image-preview). Questo modello è progettato per la produzione di asset professionali, utilizza il ragionamento avanzato ("Thinking") per seguire istruzioni complesse e riprodurre testo ad alta fedeltà.

Tutte le immagini generate includono una filigrana SynthID.

Generazione di immagini (da testo a immagine)

Python

from google import genai

from google.genai import types

from PIL import Image

client = genai.Client()

prompt = ("Create a picture of a nano banana dish in a fancy restaurant with a Gemini theme")

response = client.models.generate_content(

model="gemini-2.5-flash-image",

contents=[prompt],

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("generated_image.png")

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

"Create a picture of a nano banana dish in a fancy restaurant with a Gemini theme";

const response = await ai.models.generateContent({

model: "gemini-2.5-flash-image",

contents: prompt,

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("gemini-native-image.png", buffer);

console.log("Image saved as gemini-native-image.png");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-2.5-flash-image",

genai.Text("Create a picture of a nano banana dish in a " +

" fancy restaurant with a Gemini theme"),

)

for _, part := range result.Candidates[0].Content.Parts {

if part.Text != "" {

fmt.Println(part.Text)

} else if part.InlineData != nil {

imageBytes := part.InlineData.Data

outputFilename := "gemini_generated_image.png"

_ = os.WriteFile(outputFilename, imageBytes, 0644)

}

}

}

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class TextToImage {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-2.5-flash-image",

"Create a picture of a nano banana dish in a fancy restaurant with a Gemini theme",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("_01_generated_image.png"), blob.data().get());

}

}

}

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-2.5-flash-image:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{

"parts": [

{"text": "Create a picture of a nano banana dish in a fancy restaurant with a Gemini theme"}

]

}]

}'

Modifica delle immagini (da testo e immagine a immagine)

Promemoria: assicurati di disporre dei diritti necessari per le immagini che carichi. Non generare contenuti che violano i diritti di altre persone, inclusi video o immagini che ingannano, molestano o danneggiano. L'utilizzo di questo servizio di AI generativa è soggetto alle nostre Norme relative all'uso vietato.

Fornisci un'immagine e utilizza prompt di testo per aggiungere, rimuovere o modificare elementi, cambiare lo stile o regolare la classificazione del colore.

Il seguente esempio mostra il caricamento di immagini codificate base64.

Per più immagini, payload più grandi e tipi MIME supportati, consulta la pagina Comprensione

delle immagini.

Python

from google import genai

from google.genai import types

from PIL import Image

client = genai.Client()

prompt = (

"Create a picture of my cat eating a nano-banana in a "

"fancy restaurant under the Gemini constellation",

)

image = Image.open("/path/to/cat_image.png")

response = client.models.generate_content(

model="gemini-2.5-flash-image",

contents=[prompt, image],

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("generated_image.png")

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const imagePath = "path/to/cat_image.png";

const imageData = fs.readFileSync(imagePath);

const base64Image = imageData.toString("base64");

const prompt = [

{ text: "Create a picture of my cat eating a nano-banana in a" +

"fancy restaurant under the Gemini constellation" },

{

inlineData: {

mimeType: "image/png",

data: base64Image,

},

},

];

const response = await ai.models.generateContent({

model: "gemini-2.5-flash-image",

contents: prompt,

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("gemini-native-image.png", buffer);

console.log("Image saved as gemini-native-image.png");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

imagePath := "/path/to/cat_image.png"

imgData, _ := os.ReadFile(imagePath)

parts := []*genai.Part{

genai.NewPartFromText("Create a picture of my cat eating a nano-banana in a fancy restaurant under the Gemini constellation"),

&genai.Part{

InlineData: &genai.Blob{

MIMEType: "image/png",

Data: imgData,

},

},

}

contents := []*genai.Content{

genai.NewContentFromParts(parts, genai.RoleUser),

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-2.5-flash-image",

contents,

)

for _, part := range result.Candidates[0].Content.Parts {

if part.Text != "" {

fmt.Println(part.Text)

} else if part.InlineData != nil {

imageBytes := part.InlineData.Data

outputFilename := "gemini_generated_image.png"

_ = os.WriteFile(outputFilename, imageBytes, 0644)

}

}

}

Java

import com.google.genai.Client;

import com.google.genai.types.Content;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

public class TextAndImageToImage {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-2.5-flash-image",

Content.fromParts(

Part.fromText("""

Create a picture of my cat eating a nano-banana in

a fancy restaurant under the Gemini constellation

"""),

Part.fromBytes(

Files.readAllBytes(

Path.of("src/main/resources/cat.jpg")),

"image/jpeg")),

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("gemini_generated_image.png"), blob.data().get());

}

}

}

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-2.5-flash-image:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H 'Content-Type: application/json' \

-d "{

\"contents\": [{

\"parts\":[

{\"text\": \"'Create a picture of my cat eating a nano-banana in a fancy restaurant under the Gemini constellation\"},

{

\"inline_data\": {

\"mime_type\":\"image/jpeg\",

\"data\": \"<BASE64_IMAGE_DATA>\"

}

}

]

}]

}"

Modifica di immagini in più passaggi

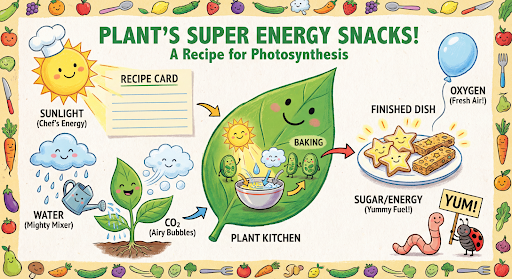

Continua a generare e modificare immagini in modo conversazionale. La chat o la conversazione multi-turno è il modo consigliato per iterare le immagini. L'esempio seguente mostra un prompt per generare un'infografica sulla fotosintesi.

Python

from google import genai

from google.genai import types

client = genai.Client()

chat = client.chats.create(

model="gemini-3-pro-image-preview",

config=types.GenerateContentConfig(

response_modalities=['TEXT', 'IMAGE'],

tools=[{"google_search": {}}]

)

)

message = "Create a vibrant infographic that explains photosynthesis as if it were a recipe for a plant's favorite food. Show the \"ingredients\" (sunlight, water, CO2) and the \"finished dish\" (sugar/energy). The style should be like a page from a colorful kids' cookbook, suitable for a 4th grader."

response = chat.send_message(message)

for part in response.parts:

if part.text is not None:

print(part.text)

elif image:= part.as_image():

image.save("photosynthesis.png")

JavaScript

import { GoogleGenAI } from "@google/genai";

const ai = new GoogleGenAI({});

async function main() {

const chat = ai.chats.create({

model: "gemini-3-pro-image-preview",

config: {

responseModalities: ['TEXT', 'IMAGE'],

tools: [{googleSearch: {}}],

},

});

await main();

const message = "Create a vibrant infographic that explains photosynthesis as if it were a recipe for a plant's favorite food. Show the \"ingredients\" (sunlight, water, CO2) and the \"finished dish\" (sugar/energy). The style should be like a page from a colorful kids' cookbook, suitable for a 4th grader."

let response = await chat.sendMessage({message});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("photosynthesis.png", buffer);

console.log("Image saved as photosynthesis.png");

}

}

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

defer client.Close()

model := client.GenerativeModel("gemini-3-pro-image-preview")

model.GenerationConfig = &pb.GenerationConfig{

ResponseModalities: []pb.ResponseModality{genai.Text, genai.Image},

}

chat := model.StartChat()

message := "Create a vibrant infographic that explains photosynthesis as if it were a recipe for a plant's favorite food. Show the \"ingredients\" (sunlight, water, CO2) and the \"finished dish\" (sugar/energy). The style should be like a page from a colorful kids' cookbook, suitable for a 4th grader."

resp, err := chat.SendMessage(ctx, genai.Text(message))

if err != nil {

log.Fatal(err)

}

for _, part := range resp.Candidates[0].Content.Parts {

if txt, ok := part.(genai.Text); ok {

fmt.Printf("%s", string(txt))

} else if img, ok := part.(genai.ImageData); ok {

err := os.WriteFile("photosynthesis.png", img.Data, 0644)

if err != nil {

log.Fatal(err)

}

}

}

}

Java

import com.google.genai.Chat;

import com.google.genai.Client;

import com.google.genai.types.Content;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.GoogleSearch;

import com.google.genai.types.ImageConfig;

import com.google.genai.types.Part;

import com.google.genai.types.RetrievalConfig;

import com.google.genai.types.Tool;

import com.google.genai.types.ToolConfig;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

public class MultiturnImageEditing {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.tools(Tool.builder()

.googleSearch(GoogleSearch.builder().build())

.build())

.build();

Chat chat = client.chats.create("gemini-3-pro-image-preview", config);

GenerateContentResponse response = chat.sendMessage("""

Create a vibrant infographic that explains photosynthesis

as if it were a recipe for a plant's favorite food.

Show the "ingredients" (sunlight, water, CO2)

and the "finished dish" (sugar/energy).

The style should be like a page from a colorful

kids' cookbook, suitable for a 4th grader.

""");

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("photosynthesis.png"), blob.data().get());

}

}

}

// ...

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-image-preview:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{

"role": "user",

"parts": [

{"text": "Create a vibrant infographic that explains photosynthesis as if it were a recipe for a plants favorite food. Show the \"ingredients\" (sunlight, water, CO2) and the \"finished dish\" (sugar/energy). The style should be like a page from a colorful kids cookbook, suitable for a 4th grader."}

]

}],

"generationConfig": {

"responseModalities": ["TEXT", "IMAGE"]

}

}'

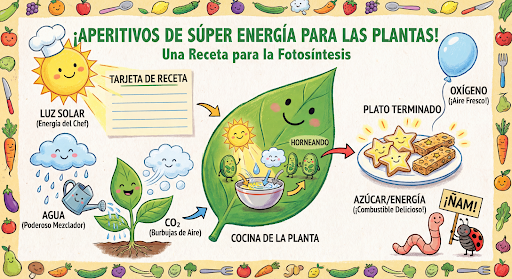

Puoi quindi utilizzare la stessa chat per cambiare la lingua del grafico in spagnolo.

Python

message = "Update this infographic to be in Spanish. Do not change any other elements of the image."

aspect_ratio = "16:9" # "1:1","2:3","3:2","3:4","4:3","4:5","5:4","9:16","16:9","21:9"

resolution = "2K" # "1K", "2K", "4K"

response = chat.send_message(message,

config=types.GenerateContentConfig(

image_config=types.ImageConfig(

aspect_ratio=aspect_ratio,

image_size=resolution

),

))

for part in response.parts:

if part.text is not None:

print(part.text)

elif image:= part.as_image():

image.save("photosynthesis_spanish.png")

JavaScript

const message = 'Update this infographic to be in Spanish. Do not change any other elements of the image.';

const aspectRatio = '16:9';

const resolution = '2K';

let response = await chat.sendMessage({

message,

config: {

responseModalities: ['TEXT', 'IMAGE'],

imageConfig: {

aspectRatio: aspectRatio,

imageSize: resolution,

},

tools: [{googleSearch: {}}],

},

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("photosynthesis2.png", buffer);

console.log("Image saved as photosynthesis2.png");

}

}

Go

message = "Update this infographic to be in Spanish. Do not change any other elements of the image."

aspect_ratio = "16:9" // "1:1","2:3","3:2","3:4","4:3","4:5","5:4","9:16","16:9","21:9"

resolution = "2K" // "1K", "2K", "4K"

model.GenerationConfig.ImageConfig = &pb.ImageConfig{

AspectRatio: aspect_ratio,

ImageSize: resolution,

}

resp, err = chat.SendMessage(ctx, genai.Text(message))

if err != nil {

log.Fatal(err)

}

for _, part := range resp.Candidates[0].Content.Parts {

if txt, ok := part.(genai.Text); ok {

fmt.Printf("%s", string(txt))

} else if img, ok := part.(genai.ImageData); ok {

err := os.WriteFile("photosynthesis_spanish.png", img.Data, 0644)

if err != nil {

log.Fatal(err)

}

}

}

Java

String aspectRatio = "16:9"; // "1:1","2:3","3:2","3:4","4:3","4:5","5:4","9:16","16:9","21:9"

String resolution = "2K"; // "1K", "2K", "4K"

config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.imageConfig(ImageConfig.builder()

.aspectRatio(aspectRatio)

.imageSize(resolution)

.build())

.build();

response = chat.sendMessage(

"Update this infographic to be in Spanish. " +

"Do not change any other elements of the image.",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("photosynthesis_spanish.png"), blob.data().get());

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-image-preview:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H 'Content-Type: application/json' \

-d '{

"contents": [

{

"role": "user",

"parts": [{"text": "Create a vibrant infographic that explains photosynthesis..."}]

},

{

"role": "model",

"parts": [{"inline_data": {"mime_type": "image/png", "data": "<PREVIOUS_IMAGE_DATA>"}}]

},

{

"role": "user",

"parts": [{"text": "Update this infographic to be in Spanish. Do not change any other elements of the image."}]

}

],

"tools": [{"google_search": {}}],

"generationConfig": {

"responseModalities": ["TEXT", "IMAGE"],

"imageConfig": {

"aspectRatio": "16:9",

"imageSize": "2K"

}

}

}'

Novità di Gemini 3 Pro Image

Gemini 3 Pro Image (gemini-3-pro-image-preview) è un modello all'avanguardia per la generazione e la modifica di immagini ottimizzato per la produzione di asset professionali.

Progettato per affrontare i workflow più impegnativi grazie al ragionamento avanzato, è

ideale per attività di creazione e modifica complesse e in più passaggi.

- Output ad alta risoluzione: funzionalità di generazione integrate per immagini 1K, 2K e 4K.

- Rendering avanzato del testo: in grado di generare testo leggibile e stilizzato per infografiche, menu, diagrammi e asset di marketing.

- Grounding con la Ricerca Google: il modello può utilizzare la Ricerca Google come strumento per verificare i fatti e generare immagini basate su dati in tempo reale (ad es. mappe meteo attuali, grafici azionari, eventi recenti).

- Modalità di pensiero: il modello utilizza un processo di "pensiero" per ragionare su prompt complessi. Genera "immagini di pensiero" provvisorie (visibili nel backend ma non addebitate) per perfezionare la composizione prima di produrre l'output finale di alta qualità.

- Fino a 14 immagini di riferimento: ora puoi combinare fino a 14 immagini di riferimento per produrre l'immagine finale.

Utilizzare fino a 14 immagini di riferimento

L'anteprima di Gemini 3 Pro ti consente di combinare fino a 14 immagini di riferimento. Queste 14 immagini possono includere:

- Fino a 6 immagini di oggetti ad alta fedeltà da includere nell'immagine finale

Fino a 5 immagini di esseri umani per mantenere la coerenza del personaggio

Python

from google import genai

from google.genai import types

from PIL import Image

prompt = "An office group photo of these people, they are making funny faces."

aspect_ratio = "5:4" # "1:1","2:3","3:2","3:4","4:3","4:5","5:4","9:16","16:9","21:9"

resolution = "2K" # "1K", "2K", "4K"

client = genai.Client()

response = client.models.generate_content(

model="gemini-3-pro-image-preview",

contents=[

prompt,

Image.open('person1.png'),

Image.open('person2.png'),

Image.open('person3.png'),

Image.open('person4.png'),

Image.open('person5.png'),

],

config=types.GenerateContentConfig(

response_modalities=['TEXT', 'IMAGE'],

image_config=types.ImageConfig(

aspect_ratio=aspect_ratio,

image_size=resolution

),

)

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif image:= part.as_image():

image.save("office.png")

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

'An office group photo of these people, they are making funny faces.';

const aspectRatio = '5:4';

const resolution = '2K';

const contents = [

{ text: prompt },

{

inlineData: {

mimeType: "image/jpeg",

data: base64ImageFile1,

},

},

{

inlineData: {

mimeType: "image/jpeg",

data: base64ImageFile2,

},

},

{

inlineData: {

mimeType: "image/jpeg",

data: base64ImageFile3,

},

},

{

inlineData: {

mimeType: "image/jpeg",

data: base64ImageFile4,

},

},

{

inlineData: {

mimeType: "image/jpeg",

data: base64ImageFile5,

},

}

];

const response = await ai.models.generateContent({

model: 'gemini-3-pro-image-preview',

contents: contents,

config: {

responseModalities: ['TEXT', 'IMAGE'],

imageConfig: {

aspectRatio: aspectRatio,

imageSize: resolution,

},

},

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("image.png", buffer);

console.log("Image saved as image.png");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

defer client.Close()

model := client.GenerativeModel("gemini-3-pro-image-preview")

model.GenerationConfig = &pb.GenerationConfig{

ResponseModalities: []pb.ResponseModality{genai.Text, genai.Image},

ImageConfig: &pb.ImageConfig{

AspectRatio: "5:4",

ImageSize: "2K",

},

}

img1, err := os.ReadFile("person1.png")

if err != nil { log.Fatal(err) }

img2, err := os.ReadFile("person2.png")

if err != nil { log.Fatal(err) }

img3, err := os.ReadFile("person3.png")

if err != nil { log.Fatal(err) }

img4, err := os.ReadFile("person4.png")

if err != nil { log.Fatal(err) }

img5, err := os.ReadFile("person5.png")

if err != nil { log.Fatal(err) }

parts := []genai.Part{

genai.Text("An office group photo of these people, they are making funny faces."),

genai.ImageData{MIMEType: "image/png", Data: img1},

genai.ImageData{MIMEType: "image/png", Data: img2},

genai.ImageData{MIMEType: "image/png", Data: img3},

genai.ImageData{MIMEType: "image/png", Data: img4},

genai.ImageData{MIMEType: "image/png", Data: img5},

}

resp, err := model.GenerateContent(ctx, parts...)

if err != nil {

log.Fatal(err)

}

for _, part := range resp.Candidates[0].Content.Parts {

if txt, ok := part.(genai.Text); ok {

fmt.Printf("%s", string(txt))

} else if img, ok := part.(genai.ImageData); ok {

err := os.WriteFile("office.png", img.Data, 0644)

if err != nil {

log.Fatal(err)

}

}

}

}

Java

import com.google.genai.Client;

import com.google.genai.types.Content;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.ImageConfig;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

public class GroupPhoto {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.imageConfig(ImageConfig.builder()

.aspectRatio("5:4")

.imageSize("2K")

.build())

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-3-pro-image-preview",

Content.fromParts(

Part.fromText("An office group photo of these people, they are making funny faces."),

Part.fromBytes(Files.readAllBytes(Path.of("person1.png")), "image/png"),

Part.fromBytes(Files.readAllBytes(Path.of("person2.png")), "image/png"),

Part.fromBytes(Files.readAllBytes(Path.of("person3.png")), "image/png"),

Part.fromBytes(Files.readAllBytes(Path.of("person4.png")), "image/png"),

Part.fromBytes(Files.readAllBytes(Path.of("person5.png")), "image/png")

), config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("office.png"), blob.data().get());

}

}

}

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-image-preview:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H 'Content-Type: application/json' \

-d "{

\"contents\": [{

\"parts\":[

{\"text\": \"An office group photo of these people, they are making funny faces.\"},

{\"inline_data\": {\"mime_type\":\"image/png\", \"data\": \"<BASE64_DATA_IMG_1>\"}},

{\"inline_data\": {\"mime_type\":\"image/png\", \"data\": \"<BASE64_DATA_IMG_2>\"}},

{\"inline_data\": {\"mime_type\":\"image/png\", \"data\": \"<BASE64_DATA_IMG_3>\"}},

{\"inline_data\": {\"mime_type\":\"image/png\", \"data\": \"<BASE64_DATA_IMG_4>\"}},

{\"inline_data\": {\"mime_type\":\"image/png\", \"data\": \"<BASE64_DATA_IMG_5>\"}}

]

}],

\"generationConfig\": {

\"responseModalities\": [\"TEXT\", \"IMAGE\"],

\"imageConfig\": {

\"aspectRatio\": \"5:4\",

\"imageSize\": \"2K\"

}

}

}"

Grounding con la Ricerca Google

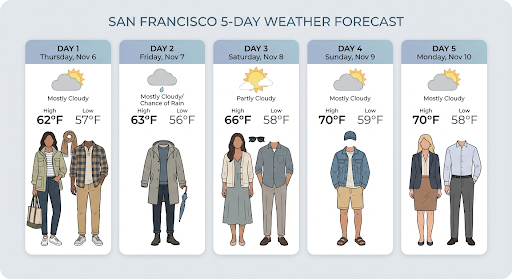

Utilizza lo strumento Ricerca Google per generare immagini basate su informazioni in tempo reale, come previsioni meteo, grafici azionari o eventi recenti.

Tieni presente che quando utilizzi Grounding con la Ricerca Google con la generazione di immagini, i risultati di ricerca basati su immagini non vengono passati al modello di generazione e sono esclusi dalla risposta.

Python

from google import genai

prompt = "Visualize the current weather forecast for the next 5 days in San Francisco as a clean, modern weather chart. Add a visual on what I should wear each day"

aspect_ratio = "16:9" # "1:1","2:3","3:2","3:4","4:3","4:5","5:4","9:16","16:9","21:9"

client = genai.Client()

response = client.models.generate_content(

model="gemini-3-pro-image-preview",

contents=prompt,

config=types.GenerateContentConfig(

response_modalities=['Text', 'Image'],

image_config=types.ImageConfig(

aspect_ratio=aspect_ratio,

),

tools=[{"google_search": {}}]

)

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif image:= part.as_image():

image.save("weather.png")

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt = 'Visualize the current weather forecast for the next 5 days in San Francisco as a clean, modern weather chart. Add a visual on what I should wear each day';

const aspectRatio = '16:9';

const resolution = '2K';

const response = await ai.models.generateContent({

model: 'gemini-3-pro-image-preview',

contents: prompt,

config: {

responseModalities: ['TEXT', 'IMAGE'],

imageConfig: {

aspectRatio: aspectRatio,

imageSize: resolution,

},

tools: [{ googleSearch: {} }]

},

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("image.png", buffer);

console.log("Image saved as image.png");

}

}

}

main();

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.GoogleSearch;

import com.google.genai.types.ImageConfig;

import com.google.genai.types.Part;

import com.google.genai.types.Tool;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class SearchGrounding {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.imageConfig(ImageConfig.builder()

.aspectRatio("16:9")

.build())

.tools(Tool.builder()

.googleSearch(GoogleSearch.builder().build())

.build())

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-3-pro-image-preview", """

Visualize the current weather forecast for the next 5 days

in San Francisco as a clean, modern weather chart.

Add a visual on what I should wear each day

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("weather.png"), blob.data().get());

}

}

}

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-image-preview:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{"parts": [{"text": "Visualize the current weather forecast for the next 5 days in San Francisco as a clean, modern weather chart. Add a visual on what I should wear each day"}]}],

"tools": [{"google_search": {}}],

"generationConfig": {

"responseModalities": ["TEXT", "IMAGE"],

"imageConfig": {"aspectRatio": "16:9"}

}

}'

La risposta include groundingMetadata, che contiene i seguenti campi obbligatori:

searchEntryPoint: contiene HTML e CSS per il rendering dei suggerimenti di ricerca richiesti.groundingChunks: restituisce le prime tre fonti web utilizzate per basare l'immagine generata

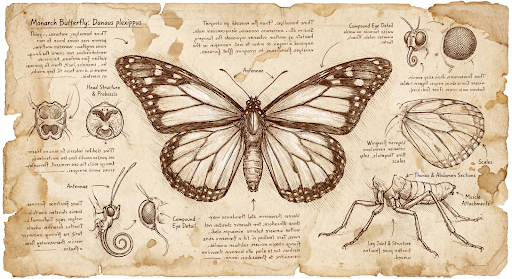

Generare immagini con una risoluzione fino a 4K

Gemini 3 Pro Image genera immagini a 1000 pixel per impostazione predefinita, ma può anche produrre immagini a 2000 e 4000 pixel. Per generare asset a risoluzione più elevata, specifica image_size in

generation_config.

Devi utilizzare una "K" maiuscola (ad es. 1K, 2K, 4K). Parametri in minuscolo (ad es. 1000) verrà rifiutato.

Python

from google import genai

from google.genai import types

prompt = "Da Vinci style anatomical sketch of a dissected Monarch butterfly. Detailed drawings of the head, wings, and legs on textured parchment with notes in English."

aspect_ratio = "1:1" # "1:1","2:3","3:2","3:4","4:3","4:5","5:4","9:16","16:9","21:9"

resolution = "1K" # "1K", "2K", "4K"

client = genai.Client()

response = client.models.generate_content(

model="gemini-3-pro-image-preview",

contents=prompt,

config=types.GenerateContentConfig(

response_modalities=['TEXT', 'IMAGE'],

image_config=types.ImageConfig(

aspect_ratio=aspect_ratio,

image_size=resolution

),

)

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif image:= part.as_image():

image.save("butterfly.png")

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

'Da Vinci style anatomical sketch of a dissected Monarch butterfly. Detailed drawings of the head, wings, and legs on textured parchment with notes in English.';

const aspectRatio = '1:1';

const resolution = '1K';

const response = await ai.models.generateContent({

model: 'gemini-3-pro-image-preview',

contents: prompt,

config: {

responseModalities: ['TEXT', 'IMAGE'],

imageConfig: {

aspectRatio: aspectRatio,

imageSize: resolution,

},

},

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("image.png", buffer);

console.log("Image saved as image.png");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

defer client.Close()

model := client.GenerativeModel("gemini-3-pro-image-preview")

model.GenerationConfig = &pb.GenerationConfig{

ResponseModalities: []pb.ResponseModality{genai.Text, genai.Image},

ImageConfig: &pb.ImageConfig{

AspectRatio: "1:1",

ImageSize: "1K",

},

}

prompt := "Da Vinci style anatomical sketch of a dissected Monarch butterfly. Detailed drawings of the head, wings, and legs on textured parchment with notes in English."

resp, err := model.GenerateContent(ctx, genai.Text(prompt))

if err != nil {

log.Fatal(err)

}

for _, part := range resp.Candidates[0].Content.Parts {

if txt, ok := part.(genai.Text); ok {

fmt.Printf("%s", string(txt))

} else if img, ok := part.(genai.ImageData); ok {

err := os.WriteFile("butterfly.png", img.Data, 0644)

if err != nil {

log.Fatal(err)

}

}

}

}

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.GoogleSearch;

import com.google.genai.types.ImageConfig;

import com.google.genai.types.Part;

import com.google.genai.types.Tool;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class HiRes {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.imageConfig(ImageConfig.builder()

.aspectRatio("16:9")

.imageSize("4K")

.build())

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-3-pro-image-preview", """

Da Vinci style anatomical sketch of a dissected Monarch butterfly.

Detailed drawings of the head, wings, and legs on textured

parchment with notes in English.

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("butterfly.png"), blob.data().get());

}

}

}

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-image-preview:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{"parts": [{"text": "Da Vinci style anatomical sketch of a dissected Monarch butterfly. Detailed drawings of the head, wings, and legs on textured parchment with notes in English."}]}],

"tools": [{"google_search": {}}],

"generationConfig": {

"responseModalities": ["TEXT", "IMAGE"],

"imageConfig": {"aspectRatio": "1:1", "imageSize": "1K"}

}

}'

Di seguito è riportata un'immagine di esempio generata da questo prompt:

Procedura di ragionamento

Il modello Gemini 3 Pro Image Preview è un modello di ragionamento e utilizza un processo di ragionamento ("Ragionamento") per i prompt complessi. Questa funzionalità è attivata per impostazione predefinita e non può essere disattivata nell'API. Per saperne di più sul processo di pensiero, consulta la guida Il pensiero di Gemini.

Il modello genera fino a due immagini provvisorie per testare la composizione e la logica. L'ultima immagine all'interno di Pensiero è anche l'immagine finale renderizzata.

Puoi controllare i pensieri che hanno portato alla produzione dell'immagine finale.

Python

for part in response.parts:

if part.thought:

if part.text:

print(part.text)

elif image:= part.as_image():

image.show()

JavaScript

for (const part of response.candidates[0].content.parts) {

if (part.thought) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, 'base64');

fs.writeFileSync('image.png', buffer);

console.log('Image saved as image.png');

}

}

}

Firme dei pensieri

Le firme del pensiero sono rappresentazioni criptate del

processo di pensiero interno del modello e vengono utilizzate per preservare il contesto del ragionamento

nelle interazioni multi-turno. Tutte le risposte includono un campo thought_signature. Come regola generale, se ricevi una firma del pensiero nella risposta di un modello, devi restituirla esattamente come l'hai ricevuta quando invii la cronologia della conversazione nel turno successivo. La mancata circolazione delle firme del pensiero

potrebbe causare l'esito negativo della risposta. Per ulteriori spiegazioni sulle firme in generale, consulta la documentazione relativa alla firma del pensiero.

Ecco come funzionano le firme dei pensieri:

- Tutte le parti

inline_datacon l'immaginemimetypeche fanno parte della risposta devono avere una firma. - Se all'inizio (prima di qualsiasi immagine) sono presenti alcune parti di testo subito dopo i pensieri, anche la prima parte di testo deve avere una firma.

- Se le parti

inline_datacon l'immaginemimetypefanno parte dei pensieri, non avranno firme.

Il seguente codice mostra un esempio di dove sono incluse le firme dei pensieri:

[

{

"inline_data": {

"data": "<base64_image_data_0>",

"mime_type": "image/png"

},

"thought": true // Thoughts don't have signatures

},

{

"inline_data": {

"data": "<base64_image_data_1>",

"mime_type": "image/png"

},

"thought": true // Thoughts don't have signatures

},

{

"inline_data": {

"data": "<base64_image_data_2>",

"mime_type": "image/png"

},

"thought": true // Thoughts don't have signatures

},

{

"text": "Here is a step-by-step guide to baking macarons, presented in three separate images.\n\n### Step 1: Piping the Batter\n\nThe first step after making your macaron batter is to pipe it onto a baking sheet. This requires a steady hand to create uniform circles.\n\n",

"thought_signature": "<Signature_A>" // The first non-thought part always has a signature

},

{

"inline_data": {

"data": "<base64_image_data_3>",

"mime_type": "image/png"

},

"thought_signature": "<Signature_B>" // All image parts have a signatures

},

{

"text": "\n\n### Step 2: Baking and Developing Feet\n\nOnce piped, the macarons are baked in the oven. A key sign of a successful bake is the development of \"feet\"—the ruffled edge at the base of each macaron shell.\n\n"

// Follow-up text parts don't have signatures

},

{

"inline_data": {

"data": "<base64_image_data_4>",

"mime_type": "image/png"

},

"thought_signature": "<Signature_C>" // All image parts have a signatures

},

{

"text": "\n\n### Step 3: Assembling the Macaron\n\nThe final step is to pair the cooled macaron shells by size and sandwich them together with your desired filling, creating the classic macaron dessert.\n\n"

},

{

"inline_data": {

"data": "<base64_image_data_5>",

"mime_type": "image/png"

},

"thought_signature": "<Signature_D>" // All image parts have a signatures

}

]

Altre modalità di generazione di immagini

Gemini supporta altre modalità di interazione con le immagini in base alla struttura e al contesto del prompt, tra cui:

- Testo in immagini e testo (interleaved): restituisce immagini con testo correlato.

- Prompt di esempio: "Genera una ricetta illustrata per una paella".

- Immagini e testo in immagini e testo (interleaved): utilizza immagini e testo di input per creare nuove immagini e testo correlati.

- Prompt di esempio: (con un'immagine di una stanza arredata) "Quali altri divani di colore diverso si adatterebbero al mio spazio? Puoi aggiornare l'immagine?"

Generare immagini in batch

Se devi generare molte immagini, puoi utilizzare l'API Batch. In cambio di un tempo di risposta fino a 24 ore, ottieni limiti di frequenza più elevati.

Consulta la documentazione sulla generazione di immagini dell'API Batch e il cookbook per esempi e codice di immagini dell'API Batch.

Guida e strategie per i prompt

Per padroneggiare la generazione di immagini, devi partire da un principio fondamentale:

Descrivi la scena, non elencare solo le parole chiave. Il punto di forza principale del modello è la sua profonda comprensione del linguaggio. Un paragrafo narrativo e descrittivo produrrà quasi sempre un'immagine migliore e più coerente rispetto a un elenco di parole scollegate.

Prompt per la generazione di immagini

Le seguenti strategie ti aiuteranno a creare prompt efficaci per generare esattamente le immagini che stai cercando.

1. Scene fotorealistiche

Per immagini realistiche, utilizza termini fotografici. Menziona angolazioni della fotocamera, tipi di obiettivo, illuminazione e dettagli precisi per guidare il modello verso un risultato fotorealistico.

Modello

A photorealistic [shot type] of [subject], [action or expression], set in

[environment]. The scene is illuminated by [lighting description], creating

a [mood] atmosphere. Captured with a [camera/lens details], emphasizing

[key textures and details]. The image should be in a [aspect ratio] format.

Prompt

A photorealistic close-up portrait of an elderly Japanese ceramicist with

deep, sun-etched wrinkles and a warm, knowing smile. He is carefully

inspecting a freshly glazed tea bowl. The setting is his rustic,

sun-drenched workshop. The scene is illuminated by soft, golden hour light

streaming through a window, highlighting the fine texture of the clay.

Captured with an 85mm portrait lens, resulting in a soft, blurred background

(bokeh). The overall mood is serene and masterful. Vertical portrait

orientation.

Python

from google import genai

from google.genai import types

client = genai.Client()

response = client.models.generate_content(

model="gemini-2.5-flash-image",

contents="A photorealistic close-up portrait of an elderly Japanese ceramicist with deep, sun-etched wrinkles and a warm, knowing smile. He is carefully inspecting a freshly glazed tea bowl. The setting is his rustic, sun-drenched workshop with pottery wheels and shelves of clay pots in the background. The scene is illuminated by soft, golden hour light streaming through a window, highlighting the fine texture of the clay and the fabric of his apron. Captured with an 85mm portrait lens, resulting in a soft, blurred background (bokeh). The overall mood is serene and masterful.",

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("photorealistic_example.png")

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class PhotorealisticScene {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-2.5-flash-image",

"""

A photorealistic close-up portrait of an elderly Japanese ceramicist

with deep, sun-etched wrinkles and a warm, knowing smile. He is

carefully inspecting a freshly glazed tea bowl. The setting is his

rustic, sun-drenched workshop with pottery wheels and shelves of

clay pots in the background. The scene is illuminated by soft,

golden hour light streaming through a window, highlighting the

fine texture of the clay and the fabric of his apron. Captured

with an 85mm portrait lens, resulting in a soft, blurred

background (bokeh). The overall mood is serene and masterful.

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("photorealistic_example.png"), blob.data().get());

}

}

}

}

}

}

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

"A photorealistic close-up portrait of an elderly Japanese ceramicist with deep, sun-etched wrinkles and a warm, knowing smile. He is carefully inspecting a freshly glazed tea bowl. The setting is his rustic, sun-drenched workshop with pottery wheels and shelves of clay pots in the background. The scene is illuminated by soft, golden hour light streaming through a window, highlighting the fine texture of the clay and the fabric of his apron. Captured with an 85mm portrait lens, resulting in a soft, blurred background (bokeh). The overall mood is serene and masterful.";

const response = await ai.models.generateContent({

model: "gemini-2.5-flash-image",

contents: prompt,

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("photorealistic_example.png", buffer);

console.log("Image saved as photorealistic_example.png");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-2.5-flash-image",

genai.Text("A photorealistic close-up portrait of an elderly Japanese ceramicist with deep, sun-etched wrinkles and a warm, knowing smile. He is carefully inspecting a freshly glazed tea bowl. The setting is his rustic, sun-drenched workshop with pottery wheels and shelves of clay pots in the background. The scene is illuminated by soft, golden hour light streaming through a window, highlighting the fine texture of the clay and the fabric of his apron. Captured with an 85mm portrait lens, resulting in a soft, blurred background (bokeh). The overall mood is serene and masterful."),

)

for _, part := range result.Candidates[0].Content.Parts {

if part.Text != "" {

fmt.Println(part.Text)

} else if part.InlineData != nil {

imageBytes := part.InlineData.Data

outputFilename := "photorealistic_example.png"

_ = os.WriteFile(outputFilename, imageBytes, 0644)

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-2.5-flash-image:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{

"parts": [

{"text": "A photorealistic close-up portrait of an elderly Japanese ceramicist with deep, sun-etched wrinkles and a warm, knowing smile. He is carefully inspecting a freshly glazed tea bowl. The setting is his rustic, sun-drenched workshop with pottery wheels and shelves of clay pots in the background. The scene is illuminated by soft, golden hour light streaming through a window, highlighting the fine texture of the clay and the fabric of his apron. Captured with an 85mm portrait lens, resulting in a soft, blurred background (bokeh). The overall mood is serene and masterful."}

]

}]

}'

2. Illustrazioni e adesivi stilizzati

Per creare adesivi, icone o asset, specifica lo stile e richiedi uno sfondo trasparente.

Modello

A [style] sticker of a [subject], featuring [key characteristics] and a

[color palette]. The design should have [line style] and [shading style].

The background must be transparent.

Prompt

A kawaii-style sticker of a happy red panda wearing a tiny bamboo hat. It's

munching on a green bamboo leaf. The design features bold, clean outlines,

simple cel-shading, and a vibrant color palette. The background must be white.

Python

from google import genai

from google.genai import types

client = genai.Client()

response = client.models.generate_content(

model="gemini-2.5-flash-image",

contents="A kawaii-style sticker of a happy red panda wearing a tiny bamboo hat. It's munching on a green bamboo leaf. The design features bold, clean outlines, simple cel-shading, and a vibrant color palette. The background must be white.",

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("red_panda_sticker.png")

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class StylizedIllustration {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-2.5-flash-image",

"""

A kawaii-style sticker of a happy red panda wearing a tiny bamboo

hat. It's munching on a green bamboo leaf. The design features

bold, clean outlines, simple cel-shading, and a vibrant color

palette. The background must be white.

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("red_panda_sticker.png"), blob.data().get());

}

}

}

}

}

}

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

"A kawaii-style sticker of a happy red panda wearing a tiny bamboo hat. It's munching on a green bamboo leaf. The design features bold, clean outlines, simple cel-shading, and a vibrant color palette. The background must be white.";

const response = await ai.models.generateContent({

model: "gemini-2.5-flash-image",

contents: prompt,

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("red_panda_sticker.png", buffer);

console.log("Image saved as red_panda_sticker.png");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-2.5-flash-image",

genai.Text("A kawaii-style sticker of a happy red panda wearing a tiny bamboo hat. It's munching on a green bamboo leaf. The design features bold, clean outlines, simple cel-shading, and a vibrant color palette. The background must be white."),

)

for _, part := range result.Candidates[0].Content.Parts {

if part.Text != "" {

fmt.Println(part.Text)

} else if part.InlineData != nil {

imageBytes := part.InlineData.Data

outputFilename := "red_panda_sticker.png"

_ = os.WriteFile(outputFilename, imageBytes, 0644)

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-2.5-flash-image:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{

"parts": [

{"text": "A kawaii-style sticker of a happy red panda wearing a tiny bamboo hat. It is munching on a green bamboo leaf. The design features bold, clean outlines, simple cel-shading, and a vibrant color palette. The background must be white."}

]

}]

}'

3. Testo accurato nelle immagini

Gemini eccelle nel rendering del testo. Descrivi in modo chiaro il testo, lo stile del carattere (in modo descrittivo) e il design complessivo. Utilizza l'anteprima di Gemini 3 Pro Image per la produzione di asset professionali.

Modello

Create a [image type] for [brand/concept] with the text "[text to render]"

in a [font style]. The design should be [style description], with a

[color scheme].

Prompt

Create a modern, minimalist logo for a coffee shop called 'The Daily Grind'. The text should be in a clean, bold, sans-serif font. The color scheme is black and white. Put the logo in a circle. Use a coffee bean in a clever way.

Python

from google import genai

from google.genai import types

client = genai.Client()

response = client.models.generate_content(

model="gemini-3-pro-image-preview",

contents="Create a modern, minimalist logo for a coffee shop called 'The Daily Grind'. The text should be in a clean, bold, sans-serif font. The color scheme is black and white. Put the logo in a circle. Use a coffee bean in a clever way.",

config=types.GenerateContentConfig(

image_config=types.ImageConfig(

aspect_ratio="1:1",

)

)

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("logo_example.jpg")

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import com.google.genai.types.ImageConfig;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class AccurateTextInImages {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.imageConfig(ImageConfig.builder()

.aspectRatio("1:1")

.build())

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-3-pro-image-preview",

"""

Create a modern, minimalist logo for a coffee shop called 'The Daily Grind'. The text should be in a clean, bold, sans-serif font. The color scheme is black and white. Put the logo in a circle. Use a coffee bean in a clever way.

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("logo_example.jpg"), blob.data().get());

}

}

}

}

}

}

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

"Create a modern, minimalist logo for a coffee shop called 'The Daily Grind'. The text should be in a clean, bold, sans-serif font. The color scheme is black and white. Put the logo in a circle. Use a coffee bean in a clever way.";

const response = await ai.models.generateContent({

model: "gemini-3-pro-image-preview",

contents: prompt,

config: {

imageConfig: {

aspectRatio: "1:1",

},

},

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("logo_example.jpg", buffer);

console.log("Image saved as logo_example.jpg");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-3-pro-image-preview",

genai.Text("Create a modern, minimalist logo for a coffee shop called 'The Daily Grind'. The text should be in a clean, bold, sans-serif font. The color scheme is black and white. Put the logo in a circle. Use a coffee bean in a clever way."),

&genai.GenerateContentConfig{

ImageConfig: &genai.ImageConfig{

AspectRatio: "1:1",

},

},

)

for _, part := range result.Candidates[0].Content.Parts {

if part.Text != "" {

fmt.Println(part.Text)

} else if part.InlineData != nil {

imageBytes := part.InlineData.Data

outputFilename := "logo_example.jpg"

_ = os.WriteFile(outputFilename, imageBytes, 0644)

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-image-preview:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{

"parts": [

{"text": "Create a modern, minimalist logo for a coffee shop called The Daily Grind. The text should be in a clean, bold, sans-serif font. The color scheme is black and white. Put the logo in a circle. Use a coffee bean in a clever way."}

]

}],

"generationConfig": {

"imageConfig": {

"aspectRatio": "1:1"

}

}

}'

4. Mockup di prodotti e fotografia commerciale

Ideale per creare foto di prodotti pulite e professionali per l'e-commerce, la pubblicità o il branding.

Modello

A high-resolution, studio-lit product photograph of a [product description]

on a [background surface/description]. The lighting is a [lighting setup,

e.g., three-point softbox setup] to [lighting purpose]. The camera angle is

a [angle type] to showcase [specific feature]. Ultra-realistic, with sharp

focus on [key detail]. [Aspect ratio].

Prompt

A high-resolution, studio-lit product photograph of a minimalist ceramic

coffee mug in matte black, presented on a polished concrete surface. The

lighting is a three-point softbox setup designed to create soft, diffused

highlights and eliminate harsh shadows. The camera angle is a slightly

elevated 45-degree shot to showcase its clean lines. Ultra-realistic, with

sharp focus on the steam rising from the coffee. Square image.

Python

from google import genai

from google.genai import types

client = genai.Client()

response = client.models.generate_content(

model="gemini-2.5-flash-image",

contents="A high-resolution, studio-lit product photograph of a minimalist ceramic coffee mug in matte black, presented on a polished concrete surface. The lighting is a three-point softbox setup designed to create soft, diffused highlights and eliminate harsh shadows. The camera angle is a slightly elevated 45-degree shot to showcase its clean lines. Ultra-realistic, with sharp focus on the steam rising from the coffee. Square image.",

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("product_mockup.png")

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class ProductMockup {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-2.5-flash-image",

"""

A high-resolution, studio-lit product photograph of a minimalist

ceramic coffee mug in matte black, presented on a polished

concrete surface. The lighting is a three-point softbox setup

designed to create soft, diffused highlights and eliminate harsh

shadows. The camera angle is a slightly elevated 45-degree shot

to showcase its clean lines. Ultra-realistic, with sharp focus

on the steam rising from the coffee. Square image.

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("product_mockup.png"), blob.data().get());

}

}

}

}

}

}

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

"A high-resolution, studio-lit product photograph of a minimalist ceramic coffee mug in matte black, presented on a polished concrete surface. The lighting is a three-point softbox setup designed to create soft, diffused highlights and eliminate harsh shadows. The camera angle is a slightly elevated 45-degree shot to showcase its clean lines. Ultra-realistic, with sharp focus on the steam rising from the coffee. Square image.";

const response = await ai.models.generateContent({

model: "gemini-2.5-flash-image",

contents: prompt,

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("product_mockup.png", buffer);

console.log("Image saved as product_mockup.png");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-2.5-flash-image",

genai.Text("A high-resolution, studio-lit product photograph of a minimalist ceramic coffee mug in matte black, presented on a polished concrete surface. The lighting is a three-point softbox setup designed to create soft, diffused highlights and eliminate harsh shadows. The camera angle is a slightly elevated 45-degree shot to showcase its clean lines. Ultra-realistic, with sharp focus on the steam rising from the coffee. Square image."),

)

for _, part := range result.Candidates[0].Content.Parts {

if part.Text != "" {

fmt.Println(part.Text)

} else if part.InlineData != nil {

imageBytes := part.InlineData.Data

outputFilename := "product_mockup.png"

_ = os.WriteFile(outputFilename, imageBytes, 0644)

}

}

}

REST

curl -s -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-2.5-flash-image:generateContent" \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [{

"parts": [

{"text": "A high-resolution, studio-lit product photograph of a minimalist ceramic coffee mug in matte black, presented on a polished concrete surface. The lighting is a three-point softbox setup designed to create soft, diffused highlights and eliminate harsh shadows. The camera angle is a slightly elevated 45-degree shot to showcase its clean lines. Ultra-realistic, with sharp focus on the steam rising from the coffee. Square image."}

]

}]

}'

5. Design minimalista e con spazio negativo

Ideale per creare sfondi per siti web, presentazioni o materiali di marketing in cui verrà sovrapposto il testo.

Modello

A minimalist composition featuring a single [subject] positioned in the

[bottom-right/top-left/etc.] of the frame. The background is a vast, empty

[color] canvas, creating significant negative space. Soft, subtle lighting.

[Aspect ratio].

Prompt

A minimalist composition featuring a single, delicate red maple leaf

positioned in the bottom-right of the frame. The background is a vast, empty

off-white canvas, creating significant negative space for text. Soft,

diffused lighting from the top left. Square image.

Python

from google import genai

from google.genai import types

client = genai.Client()

response = client.models.generate_content(

model="gemini-2.5-flash-image",

contents="A minimalist composition featuring a single, delicate red maple leaf positioned in the bottom-right of the frame. The background is a vast, empty off-white canvas, creating significant negative space for text. Soft, diffused lighting from the top left. Square image.",

)

for part in response.parts:

if part.text is not None:

print(part.text)

elif part.inline_data is not None:

image = part.as_image()

image.save("minimalist_design.png")

Java

import com.google.genai.Client;

import com.google.genai.types.GenerateContentConfig;

import com.google.genai.types.GenerateContentResponse;

import com.google.genai.types.Part;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class MinimalistDesign {

public static void main(String[] args) throws IOException {

try (Client client = new Client()) {

GenerateContentConfig config = GenerateContentConfig.builder()

.responseModalities("TEXT", "IMAGE")

.build();

GenerateContentResponse response = client.models.generateContent(

"gemini-2.5-flash-image",

"""

A minimalist composition featuring a single, delicate red maple

leaf positioned in the bottom-right of the frame. The background

is a vast, empty off-white canvas, creating significant negative

space for text. Soft, diffused lighting from the top left.

Square image.

""",

config);

for (Part part : response.parts()) {

if (part.text().isPresent()) {

System.out.println(part.text().get());

} else if (part.inlineData().isPresent()) {

var blob = part.inlineData().get();

if (blob.data().isPresent()) {

Files.write(Paths.get("minimalist_design.png"), blob.data().get());

}

}

}

}

}

}

JavaScript

import { GoogleGenAI } from "@google/genai";

import * as fs from "node:fs";

async function main() {

const ai = new GoogleGenAI({});

const prompt =

"A minimalist composition featuring a single, delicate red maple leaf positioned in the bottom-right of the frame. The background is a vast, empty off-white canvas, creating significant negative space for text. Soft, diffused lighting from the top left. Square image.";

const response = await ai.models.generateContent({

model: "gemini-2.5-flash-image",

contents: prompt,

});

for (const part of response.candidates[0].content.parts) {

if (part.text) {

console.log(part.text);

} else if (part.inlineData) {

const imageData = part.inlineData.data;

const buffer = Buffer.from(imageData, "base64");

fs.writeFileSync("minimalist_design.png", buffer);

console.log("Image saved as minimalist_design.png");

}

}

}

main();

Go

package main

import (

"context"

"fmt"

"log"

"os"

"google.golang.org/genai"

)

func main() {

ctx := context.Background()

client, err := genai.NewClient(ctx, nil)

if err != nil {

log.Fatal(err)

}

result, _ := client.Models.GenerateContent(

ctx,

"gemini-2.5-flash-image",