Gemini Robotics-ER 1.5 是一种视觉-语言模型 (VLM),可将 Gemini 的智能体功能引入机器人领域。它专为物理世界中的高级推理而设计,可让机器人解读复杂的视觉数据、执行空间推理,并根据自然语言命令规划行动。

主要功能和优势:

- 增强的自主性:机器人可以推理、适应并响应开放式环境中的变化。

- 自然语言互动:通过使用自然语言分配复杂任务,让机器人更易于使用。

- 任务编排:将自然语言命令分解为子任务,并与现有的机器人控制器和行为集成,以完成长期任务。

- 功能多样:可定位和识别对象、了解对象关系、规划抓取和轨迹,以及解读动态场景。

本文档介绍了该模型的功能,并通过几个示例重点介绍了该模型的自主能力。

如果您想立即开始使用,可以在 Google AI Studio 中试用该模型。

安全

虽然 Gemini Robotics-ER 1.5 在设计时就考虑到了安全性,但您有责任确保机器人周围的环境安全。生成式 AI 模型可能会出错,而实体机器人可能会造成损坏。安全是我们的首要考虑因素,在将生成式 AI 模型与现实世界中的机器人技术结合使用时,确保其安全性是我们当前研究的一个重要领域。如需了解详情,请访问 Google DeepMind 机器人安全页面。

入门:查找场景中的对象

以下示例展示了一个常见的机器人技术用例。此示例展示了如何使用 generateContent 方法将图片和文本提示传递给模型,以获取包含已识别对象及其相应 2D 点的列表。该模型会针对在图片中识别出的商品返回点,并返回其归一化的二维坐标和标签。

您可以将此输出与机器人 API 搭配使用,也可以调用视觉语言动作 (VLA) 模型或任何其他第三方用户定义的函数,以生成供机器人执行的动作。

Python

from google import genai

from google.genai import types

PROMPT = """

Point to no more than 10 items in the image. The label returned

should be an identifying name for the object detected.

The answer should follow the json format: [{"point": <point>,

"label": <label1>}, ...]. The points are in [y, x] format

normalized to 0-1000.

"""

client = genai.Client()

# Load your image

with open("my-image.png", 'rb') as f:

image_bytes = f.read()

image_response = client.models.generate_content(

model="gemini-robotics-er-1.5-preview",

contents=[

types.Part.from_bytes(

data=image_bytes,

mime_type='image/png',

),

PROMPT

],

config = types.GenerateContentConfig(

temperature=0.5,

thinking_config=types.ThinkingConfig(thinking_budget=0)

)

)

print(image_response.text)

REST

# First, ensure you have the image file locally.

# Encode the image to base64

IMAGE_BASE64=$(base64 -w 0 my-image.png)

curl -X POST \

"https://generativelanguage.googleapis.com/v1beta/models/gemini-robotics-er-1.5-preview:generateContent \

-H "x-goog-api-key: $GEMINI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"contents": [

{

"parts": [

{

"inlineData": {

"mimeType": "image/png",

"data": "'"${IMAGE_BASE64}"'"

}

},

{

"text": "Point to no more than 10 items in the image. The label returned should be an identifying name for the object detected. The answer should follow the json format: [{\"point\": [y, x], \"label\": <label1>}, ...]. The points are in [y, x] format normalized to 0-1000."

}

]

}

],

"generationConfig": {

"temperature": 0.5,

"thinkingConfig": {

"thinkingBudget": 0

}

}

}'

输出将是一个包含对象的 JSON 数组,每个对象都包含一个 point(归一化的 [y, x] 坐标)和一个用于标识对象的 label。

JSON

[

{"point": [376, 508], "label": "small banana"},

{"point": [287, 609], "label": "larger banana"},

{"point": [223, 303], "label": "pink starfruit"},

{"point": [435, 172], "label": "paper bag"},

{"point": [270, 786], "label": "green plastic bowl"},

{"point": [488, 775], "label": "metal measuring cup"},

{"point": [673, 580], "label": "dark blue bowl"},

{"point": [471, 353], "label": "light blue bowl"},

{"point": [492, 497], "label": "bread"},

{"point": [525, 429], "label": "lime"}

]

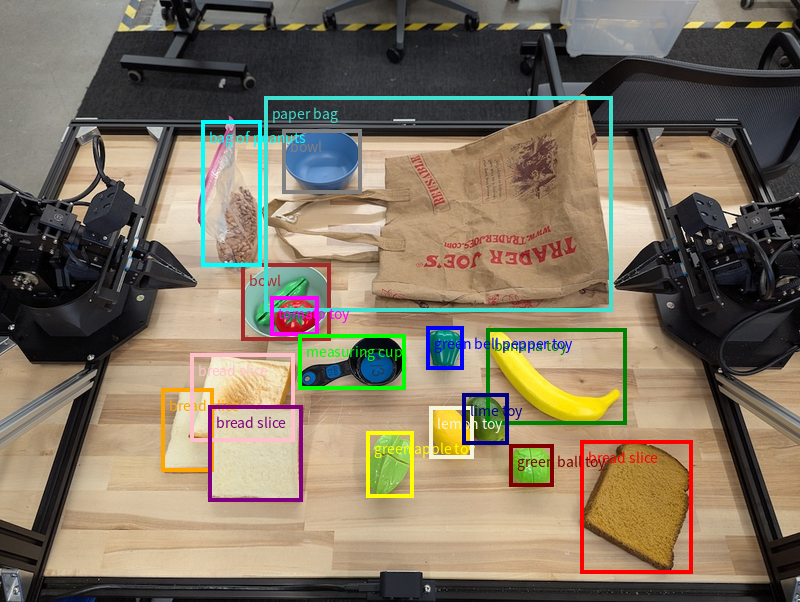

下图展示了如何显示这些点:

运作方式

Gemini Robotics-ER 1.5 使机器人能够利用空间理解能力在物理世界中进行情境化工作。它接受图片/视频/音频输入和自然语言提示,以执行以下操作:

- 了解对象和场景上下文:识别对象,并推理对象与场景的关系,包括其可供性。

- 理解任务指令:解读以自然语言给出的任务,例如“找到香蕉”。

- 在空间和时间上进行推理:了解动作序列以及对象随时间推移与场景的互动方式。

- 提供结构化输出:返回表示对象位置的坐标(点或边界框)。

这样,机器人就可以通过编程方式“看到”并“理解”周围环境。

Gemini Robotics-ER 1.5 也是 agentic 的,这意味着它可以将复杂任务(例如“将苹果放入碗中”)分解为子任务,从而编排长期任务:

- 子任务排序:将命令分解为一系列逻辑步骤。

- 函数调用/代码执行:通过调用现有的机器人函数/工具或执行生成的代码来执行步骤。

如需详细了解如何使用 Gemini 进行函数调用,请参阅函数调用页面。

将思考预算与 Gemini Robotics-ER 1.5 搭配使用

Gemini Robotics-ER 1.5 具有灵活的思考预算,可让您在延迟与准确性之间取得平衡。对于物体检测等空间理解任务,模型只需少量思考预算即可实现高性能。对于计数和重量估计等更复杂的推理任务,较大的思考预算会带来更好的效果。这样,您就可以在需要低延迟响应的任务和需要高准确度结果的任务之间取得平衡。

如需详细了解思考预算,请参阅思考核心功能页面。

机器人智能体功能

本部分将介绍 Gemini Robotics-ER 1.5 的各种功能,演示如何将该模型用于机器人感知、推理和规划应用。

本部分中的示例演示了从在图像中指点和查找对象到规划轨迹和编排长时程任务的各种功能。为简单起见,代码段已简化,仅显示提示和对 generate_content API 的调用。完整的可运行代码以及其他示例可在 Robotics cookbook 中找到。

指向对象

在机器人技术中,视觉语言模型 (VLM) 的常见应用场景是在图片或视频帧中指点和查找对象。以下示例要求模型在图片中查找特定对象,并在图片中返回其坐标。

Python

from google import genai

from google.genai import types

client = genai.Client()

# Load your image and set up your prompt

with open('path/to/image-with-objects.jpg', 'rb') as f:

image_bytes = f.read()

queries = [

"bread",

"starfruit",

"banana",

]

prompt = f"""

Get all points matching the following objects: {', '.join(queries)}. The

label returned should be an identifying name for the object detected.

The answer should follow the json format:

[{{"point": , "label": }}, ...]. The points are in

[y, x] format normalized to 0-1000.

"""

image_response = client.models.generate_content(

model="gemini-robotics-er-1.5-preview",

contents=[

types.Part.from_bytes(

data=image_bytes,

mime_type='image/jpeg',

),

prompt

],

config = types.GenerateContentConfig(

temperature=0.5,

thinking_config=types.ThinkingConfig(thinking_budget=0)

)

)

print(image_response.text)

输出结果将与“入门”示例类似,是一个包含所发现对象的坐标及其标签的 JSON。

[

{"point": [671, 317], "label": "bread"},

{"point": [738, 307], "label": "bread"},

{"point": [702, 237], "label": "bread"},

{"point": [629, 307], "label": "bread"},

{"point": [833, 800], "label": "bread"},

{"point": [609, 663], "label": "banana"},

{"point": [770, 483], "label": "starfruit"}

]

使用以下提示,让模型解读“水果”等抽象类别,而不是具体对象,并找到图片中的所有实例。

Python

prompt = f"""

Get all points for fruit. The label returned should be an identifying

name for the object detected.

""" + """The answer should follow the json format:

[{"point": <point>, "label": <label1>}, ...]. The points are in

[y, x] format normalized to 0-1000."""

如需了解其他图片处理技术,请访问图片理解页面。

跟踪视频中的对象

Gemini Robotics-ER 1.5 还可以分析视频帧,以跟踪对象随时间的变化。如需查看支持的视频格式列表,请参阅视频输入。

以下是用于在模型分析的每个帧中查找特定对象的基本提示:

Python

# Define the objects to find

queries = [

"pen (on desk)",

"pen (in robot hand)",

"laptop (opened)",

"laptop (closed)",

]

base_prompt = f"""

Point to the following objects in the provided image: {', '.join(queries)}.

The answer should follow the json format:

[{{"point": , "label": }}, ...].

The points are in [y, x] format normalized to 0-1000.

If no objects are found, return an empty JSON list [].

"""

输出结果显示了笔和笔记本电脑在视频帧中的跟踪情况。

![]()

如需查看完整的可运行代码,请参阅机器人技术食谱。

对象检测和边界框

除了单个点之外,该模型还可以返回 2D 边界框,提供一个包含对象的矩形区域。

此示例请求获取桌面上可识别对象的 2D 边界框。系统会指示模型将输出限制为 25 个对象,并为多个实例指定唯一名称。

Python

from google import genai

from google.genai import types

client = genai.Client()

# Load your image and set up your prompt

with open('path/to/image-with-objects.jpg', 'rb') as f:

image_bytes = f.read()

prompt = """

Return bounding boxes as a JSON array with labels. Never return masks

or code fencing. Limit to 25 objects. Include as many objects as you

can identify on the table.

If an object is present multiple times, name them according to their

unique characteristic (colors, size, position, unique characteristics, etc..).

The format should be as follows: [{"box_2d": [ymin, xmin, ymax, xmax],

"label": <label for the object>}] normalized to 0-1000. The values in

box_2d must only be integers

"""

image_response = client.models.generate_content(

model="gemini-robotics-er-1.5-preview",

contents=[

types.Part.from_bytes(

data=image_bytes,

mime_type='image/jpeg',

),

prompt

],

config = types.GenerateContentConfig(

temperature=0.5,

thinking_config=types.ThinkingConfig(thinking_budget=0)

)

)

print(image_response.text)

以下内容显示了模型返回的方框。

如需查看完整的可运行代码,请参阅 Robotics cookbook。 图片理解页面还提供了分割和对象检测等视觉任务的其他示例。

如需查看更多边界框示例,请参阅图片理解页面。

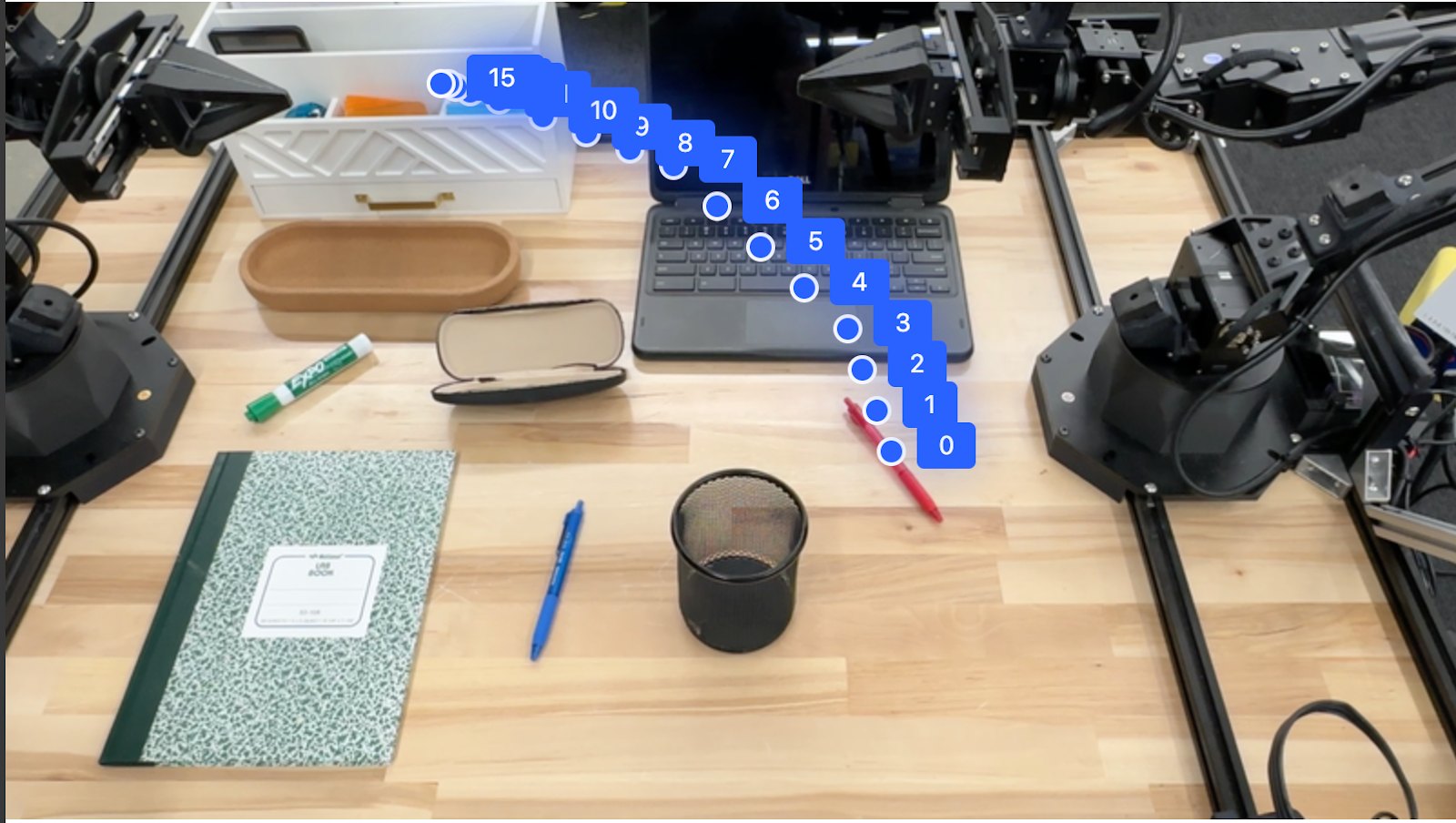

轨迹

Gemini Robotics-ER 1.5 可以生成定义轨迹的点序列,这对于引导机器人运动非常有用。

此示例请求将红笔移动到整理器的轨迹,包括起点和一系列中间点。

Python

from google import genai

from google.genai import types

client = genai.Client()

# Load your image and set up your prompt

with open('path/to/image-with-objects.jpg', 'rb') as f:

image_bytes = f.read()

points_data = []

prompt = """

Place a point on the red pen, then 15 points for the trajectory of

moving the red pen to the top of the organizer on the left.

The points should be labeled by order of the trajectory, from '0'

(start point at left hand) to <n> (final point)

The answer should follow the json format:

[{"point": <point>, "label": <label1>}, ...].

The points are in [y, x] format normalized to 0-1000.

"""

image_response = client.models.generate_content(

model="gemini-robotics-er-1.5-preview",

contents=[

types.Part.from_bytes(

data=image_bytes,

mime_type='image/jpeg',

),

prompt

],

config = types.GenerateContentConfig(

temperature=0.5,

)

)

print(image_response.text)

响应是一组坐标,用于描述红色笔应遵循的轨迹,以完成将其移动到整理器顶部的任务:

[

{"point": [550, 610], "label": "0"},

{"point": [500, 600], "label": "1"},

{"point": [450, 590], "label": "2"},

{"point": [400, 580], "label": "3"},

{"point": [350, 550], "label": "4"},

{"point": [300, 520], "label": "5"},

{"point": [250, 490], "label": "6"},

{"point": [200, 460], "label": "7"},

{"point": [180, 430], "label": "8"},

{"point": [160, 400], "label": "9"},

{"point": [140, 370], "label": "10"},

{"point": [120, 340], "label": "11"},

{"point": [110, 320], "label": "12"},

{"point": [105, 310], "label": "13"},

{"point": [100, 305], "label": "14"},

{"point": [100, 300], "label": "15"}

]

编排

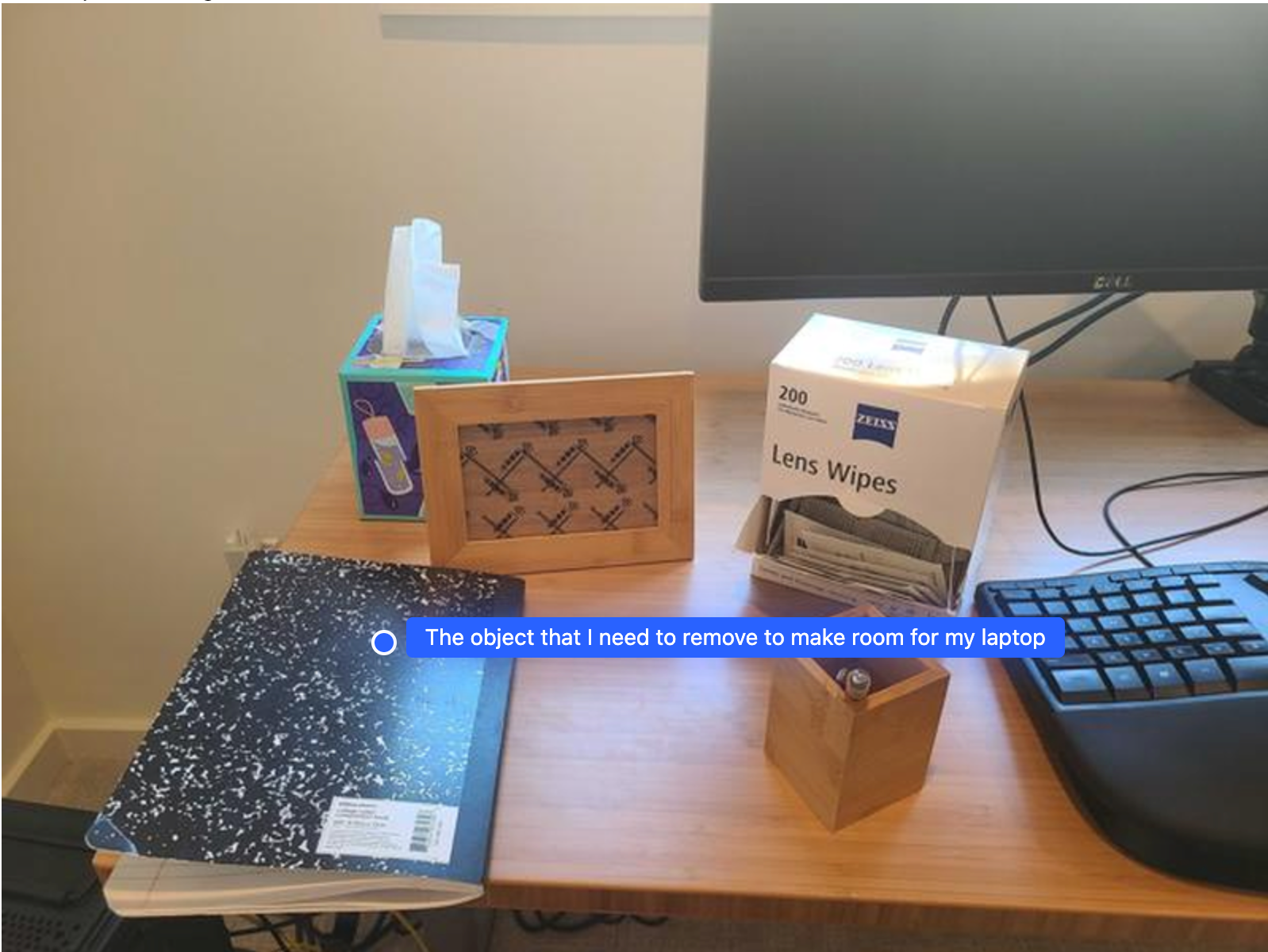

Gemini Robotics-ER 1.5 可以执行更高级别的空间推理,根据上下文理解推断行动或确定最佳位置。

为笔记本电脑腾出空间

此示例展示了 Gemini Robotics-ER 如何对空间进行推理。该提示要求模型确定需要移动哪个对象才能为另一项物品腾出空间。

Python

from google import genai

from google.genai import types

client = genai.Client()

# Load your image and set up your prompt

with open('path/to/image-with-objects.jpg', 'rb') as f:

image_bytes = f.read()

prompt = """

Point to the object that I need to remove to make room for my laptop

The answer should follow the json format: [{"point": <point>,

"label": <label1>}, ...]. The points are in [y, x] format normalized to 0-1000.

"""

image_response = client.models.generate_content(

model="gemini-robotics-er-1.5-preview",

contents=[

types.Part.from_bytes(

data=image_bytes,

mime_type='image/jpeg',

),

prompt

],

config = types.GenerateContentConfig(

temperature=0.5,

thinking_config=types.ThinkingConfig(thinking_budget=0)

)

)

print(image_response.text)

响应包含回答用户问题的对象的二维坐标,在本例中,该对象应移动以腾出空间放置笔记本电脑。

[

{"point": [672, 301], "label": "The object that I need to remove to make room for my laptop"}

]

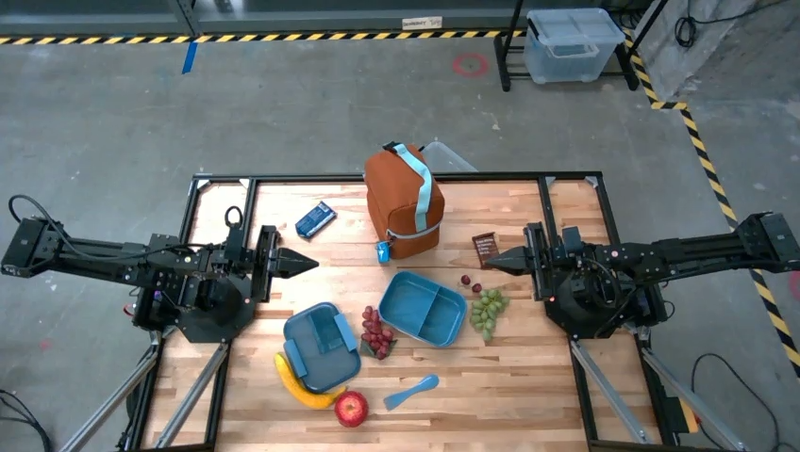

打包午餐

该模型还可以为多步骤任务提供指令,并指出每个步骤的相关对象。此示例展示了模型如何规划一系列步骤来打包午餐袋。

Python

from google import genai

from google.genai import types

client = genai.Client()

# Load your image and set up your prompt

with open('path/to/image-of-lunch.jpg', 'rb') as f:

image_bytes = f.read()

prompt = """

Explain how to pack the lunch box and lunch bag. Point to each

object that you refer to. Each point should be in the format:

[{"point": [y, x], "label": }], where the coordinates are

normalized between 0-1000.

"""

image_response = client.models.generate_content(

model="gemini-robotics-er-1.5-preview",

contents=[

types.Part.from_bytes(

data=image_bytes,

mime_type='image/jpeg',

),

prompt

],

config = types.GenerateContentConfig(

temperature=0.5,

thinking_config=types.ThinkingConfig(thinking_budget=0)

)

)

print(image_response.text)

此提示的回答是一组关于如何根据图片输入打包午餐袋的分步说明。

输入图片

模型输出

Based on the image, here is a plan to pack the lunch box and lunch bag:

1. **Pack the fruit into the lunch box.** Place the [apple](apple), [banana](banana), [red grapes](red grapes), and [green grapes](green grapes) into the [blue lunch box](blue lunch box).

2. **Add the spoon to the lunch box.** Put the [blue spoon](blue spoon) inside the lunch box as well.

3. **Close the lunch box.** Secure the lid on the [blue lunch box](blue lunch box).

4. **Place the lunch box inside the lunch bag.** Put the closed [blue lunch box](blue lunch box) into the [brown lunch bag](brown lunch bag).

5. **Pack the remaining items into the lunch bag.** Place the [blue snack bar](blue snack bar) and the [brown snack bar](brown snack bar) into the [brown lunch bag](brown lunch bag).

Here is the list of objects and their locations:

* [{"point": [899, 440], "label": "apple"}]

* [{"point": [814, 363], "label": "banana"}]

* [{"point": [727, 470], "label": "red grapes"}]

* [{"point": [675, 608], "label": "green grapes"}]

* [{"point": [706, 529], "label": "blue lunch box"}]

* [{"point": [864, 517], "label": "blue spoon"}]

* [{"point": [499, 401], "label": "blue snack bar"}]

* [{"point": [614, 705], "label": "brown snack bar"}]

* [{"point": [448, 501], "label": "brown lunch bag"}]

调用自定义机器人 API

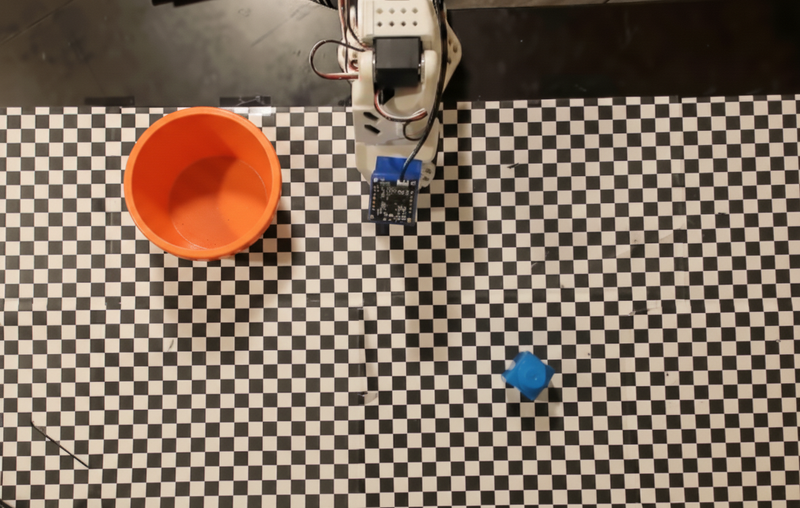

此示例演示了如何使用自定义机器人 API 进行任务编排。它引入了一个专为放置和拾取操作设计的模拟 API。任务是拿起一个蓝色积木,然后将其放入橙色碗中:

与本页上的其他示例类似,完整的可运行代码可在 Robotics cookbook 中找到。

第一步是使用以下提示找到这两个商品:

Python

prompt = """

Locate and point to the blue block and the orange bowl. The label

returned should be an identifying name for the object detected.

The answer should follow the json format: [{"point": <point>, "label": <label1>}, ...].

The points are in [y, x] format normalized to 0-1000.

"""

模型响应包含积木和碗的归一化坐标:

[

{"point": [389, 252], "label": "orange bowl"},

{"point": [727, 659], "label": "blue block"}

]

此示例使用以下模拟机器人 API:

Python

def move(x, y, high):

print(f"moving to coordinates: {x}, {y}, {15 if high else 5}")

def setGripperState(opened):

print("Opening gripper" if opened else "Closing gripper")

def returnToOrigin():

print("Returning to origin pose")

下一步是调用一系列 API 函数,其中包含执行操作所需的逻辑。以下提示包含机器人 API 的说明,模型在编排此任务时应使用该 API。

Python

prompt = f"""

You are a robotic arm with six degrees-of-freedom. You have the

following functions available to you:

def move(x, y, high):

# moves the arm to the given coordinates. The boolean value 'high' set

to True means the robot arm should be lifted above the scene for

avoiding obstacles during motion. 'high' set to False means the robot

arm should have the gripper placed on the surface for interacting with

objects.

def setGripperState(opened):

# Opens the gripper if opened set to true, otherwise closes the gripper

def returnToOrigin():

# Returns the robot to an initial state. Should be called as a cleanup

operation.

The origin point for calculating the moves is at normalized point

y={robot_origin_y}, x={robot_origin_x}. Use this as the new (0,0) for

calculating moves, allowing x and y to be negative.

Perform a pick and place operation where you pick up the blue block at

normalized coordinates ({block_x}, {block_y}) (relative coordinates:

{block_relative_x}, {block_relative_y}) and place it into the orange

bowl at normalized coordinates ({bowl_x}, {bowl_y})

(relative coordinates: {bowl_relative_x}, {bowl_relative_y}).

Provide the sequence of function calls as a JSON list of objects, where

each object has a "function" key (the function name) and an "args" key

(a list of arguments for the function).

Also, include your reasoning before the JSON output.

For example:

Reasoning: To pick up the block, I will first move the arm to a high

position above the block, open the gripper, move down to the block,

close the gripper, lift the arm, move to a high position above the bowl,

move down to the bowl, open the gripper, and then lift the arm back to

a high position.

"""

以下内容展示了模型基于提示和模拟机器人 API 可能生成的输出。输出包括模型思考过程以及由此规划的任务。它还显示了模型按顺序排列的机器人函数调用的输出。

Reasoning: To perform the pick and place operation, I will first move the

robotic arm to a high position above the blue block to ensure clearance. Then, I

will open the gripper in preparation for grasping. Next, I will lower the arm to

the surface level to position the gripper around the block and close the gripper

to secure it. After picking up the block, I will lift the arm to clear the

surface and move towards the orange bowl, maintaining a high position to avoid

collisions. Once positioned above the bowl, I will lower the arm to place the

block inside. I will then open the gripper to release the block. Finally, I will

lift the arm out of the bowl and call `returnToOrigin` to reset the robot's

state.

[

{

"function": "move",

"args": [

163,

427,

true

]

},

{

"function": "setGripperState",

"args": [

true

]

},

{

"function": "move",

"args": [

163,

427,

false

]

},

{

"function": "setGripperState",

"args": [

false

]

},

{

"function": "move",

"args": [

163,

427,

true

]

},

{

"function": "move",

"args": [

-247,

90,

true

]

},

{

"function": "move",

"args": [

-247,

90,

false

]

},

{

"function": "setGripperState",

"args": [

true

]

},

{

"function": "move",

"args": [

-247,

90,

true

]

},

{

"function": "returnToOrigin",

"args": []

}

]

Executing Function Calls:

moving to coordinates: 163, 427, 15

Opening gripper

moving to coordinates: 163, 427, 5

Closing gripper

moving to coordinates: 163, 427, 15

moving to coordinates: -247, 90, 15

moving to coordinates: -247, 90, 5

Opening gripper

moving to coordinates: -247, 90, 15

Returning to origin pose

代码执行

Gemini Robotics-ER 1.5 可以建议并执行 Python 代码来完成需要动态操作的任务,例如放大图像区域以获得更清晰的细节。

此示例演示了模型如何建议使用代码执行工具“放大”图片的特定区域,然后执行该操作来回答用户的问题。

Python

from google import genai

from google.genai import types

client = genai.Client()

# Load your image and set up your prompt

with open('path/to/image-of-object.jpg', 'rb') as f:

image_bytes = f.read()

prompt = """

What is the air quality reading? Using the code execution feature,

zoom in on the image to take a closer look.

"""

response = client.models.generate_content(

model="gemini-robotics-er-1.5-preview",

contents=[

types.Part.from_bytes(

data=image_bytes,

mime_type='image/jpeg',

),

prompt

],

config = types.GenerateContentConfig(

temperature=0.5,

tools=[types.Tool(code_execution=types.ToolCodeExecution)]

)

)

for part in response.candidates[0].content.parts:

if part.text is not None:

print(part.text)

if part.executable_code is not None:

print(part.executable_code.code)

if part.code_execution_result is not None:

print(part.code_execution_result.output)

以下是此任务的可能模型回答。输出显示了模型生成的代码,用于放大图片以更好地阅读屏幕内容。 它还会显示对裁剪所做的调整背后的思路。如需查看完整的示例输出,请参阅 Robotics cookbook。

# Detect relevant objects and output in json format

# [{"box_2d": [379, 407, 481, 553], "label": "display"}]

from PIL import Image

# Open the image

img = Image.open("input_file_0.jpeg")

# Define the coordinates for cropping (normalized to 0-1000)

# Based on visual inspection, the display is roughly centered around [430, 430] to [500, 500] in a 1000x1000 scale.

# Let's try to zoom in on the display area.

# Bounding box for display: [ymin, xmin, ymax, xmax] in pixels.

# From visual inspection of the original image (size 90489 bytes, resolution

unknown but let's assume a standard aspect ratio and roughly 1000x1000 for

relative positioning),

# the display is located at approximately y=380-480, x=410-540.

# Let's define a larger area around it for context.

# Let's say we want to crop from y=350 to y=550 and x=350 to x=650.

...

thought

The crop was too tight and only captured part of the display. Let's adjust the bounding box.

The previous crop was `img.crop((400, 350, 600, 500))`. This means xmin=400, ymin=350, xmax=600, ymax=500.

The display is centered around x=480, y=430.

The previous crop captured the left side of the display.

Let's expand the x range to the right and y range to cover the whole circle.

New bounding box estimate: ymin=370, xmin=400, ymax=500, xmax=600.

Let's try again with a wider crop.

# Detect relevant objects and output in json format

# [{"box_2d": [379, 407, 481, 553], "label": "display"}]

from PIL import Image

# Open the image

img = Image.open("input_file_0.jpeg")

# Define the coordinates for cropping (normalized to 0-1000)

…

The reading of 14 falls into the "Moderate" category.

The prompt asks for the "air quality reading", which can be interpreted as

either the numerical value or the categorical description. Given the numerical

value is explicitly displayed, it's the primary reading.

Let's provide the numerical value as the reading.

The reading is 014.

Based on the display on the air purifier, the air quality reading is **014**.

最佳做法

为了优化机器人应用的性能和准确性,务必要了解如何有效地与 Gemini 模型互动。本部分概述了有关以下方面的最佳实践和关键策略:精心设计提示、处理视觉数据以及合理安排任务,以获得最可靠的结果。

使用清晰简洁的语言。

使用自然语言:Gemini 模型旨在理解自然对话语言。以语义清晰的方式构建提示,使其与人们自然给出指令的方式相仿。

使用日常用语:选择使用常见的日常用语,而不是技术性或专业术语。如果模型对某个特定术语的回答不尽如人意,请尝试使用更常见的同义词重新措辞。

优化视觉输入。

放大以查看细节:如果拍摄的对象较小或在广角镜头中难以辨别,请使用边界框功能来隔离感兴趣的对象。然后,您可以根据此选择框剪裁图片,并将剪裁后的新图片发送给模型,以进行更详细的分析。

尝试不同的光线和颜色:具有挑战性的光线条件和较差的颜色对比度可能会影响模型的感知能力。

将复杂问题分解为较小的步骤。通过单独处理每个较小的步骤,您可以引导模型获得更精确、更成功的结果。

通过共识提高准确性。对于需要高精确度的任务,您可以多次使用同一提示查询模型。通过对返回的结果求平均值,您可以得出通常更准确、更可靠的“共识”。

限制

使用 Gemini Robotics-ER 1.5 进行开发时,请考虑以下限制:

- 预览版状态:该模型目前处于预览版阶段。API 和功能可能会发生变化,未经全面测试,可能不适合用于生产关键型应用。

- 延迟时间:复杂的查询、高分辨率输入或广泛的

thinking_budget可能会导致处理时间增加。 - 幻觉:与所有大语言模型一样,Gemini Robotics-ER 1.5 有时可能会产生“幻觉”或提供不正确的信息,尤其是在提示不明确或输入超出分布范围时。

- 对提示质量的依赖性:模型输出的质量在很大程度上取决于输入提示的清晰度和具体程度。模糊不清或结构不合理的提示可能会导致结果不理想。

- 计算成本:运行模型(尤其是使用视频输入或高

thinking_budget时)会消耗计算资源并产生费用。如需了解详情,请参阅思考页面。 - 输入类型:如需详细了解每种模式的限制,请参阅以下主题。

隐私权声明

您确认,本文档中提及的模型(以下简称“机器人模型”)会利用视频和音频数据来运行硬件并按照您的指令移动硬件。因此,您可能会操作机器人模型,以便机器人模型收集可识别个人的数据,例如语音、图像和肖像数据(“个人数据”)。如果您选择以会收集个人数据的方式操作机器人模型,则表示您同意,除非且直到可识别身份的个人充分了解并同意其个人数据可能会按照 https://ai.google.dev/gemini-api/terms(以下简称“条款”)中所述的方式提供给 Google 并由 Google 使用(包括按照标题为“Google 如何使用您的数据”的部分中所述的方式),否则您不得允许任何可识别身份的个人与机器人模型互动或出现在机器人模型周围的区域。您将确保此类通知允许收集和使用本条款中规定的个人数据,并且将尽商业上合理的努力,通过使用面部模糊处理等技术和在不包含可识别人员的区域运行机器人模型,尽可能减少个人数据的收集和分发。

价格

如需详细了解价格和可用地区,请参阅价格页面。

模型版本

| 属性 | 说明 |

|---|---|

| 模型代码 | gemini-robotics-er-1.5-preview |

| 支持的数据类型 |

输入源 文本、图片、视频、音频 输出 文本 |

| 令牌限制[*] |

输入 token 限制 1,048,576 输出 token 限制 65536 |

| 功能 |

音频生成 不受支持 Batch API 不受支持 缓存 不受支持 代码执行 支持 函数调用 支持 依托 Google 地图进行接地 不受支持 图片生成 不受支持 Live API 不受支持 搜索接地 支持 结构化输出 支持 思考型 支持 网址上下文 支持 |

| 版本 |

|

| 最新更新 | 2025 年 9 月 |

| 知识截点 | 2025 年 1 月 |

后续步骤

- 探索其他功能,并继续尝试不同的提示和输入,以发现 Gemini Robotics-ER 1.5 的更多应用。 如需查看更多示例,请参阅机器人技术实用指南。

- 如需了解 Gemini Robotics 模型在构建时如何考虑安全性,请访问 Google DeepMind 机器人安全页面。

- 如需了解 Gemini Robotics 模型的最新动态,请访问 Gemini Robotics 着陆页。