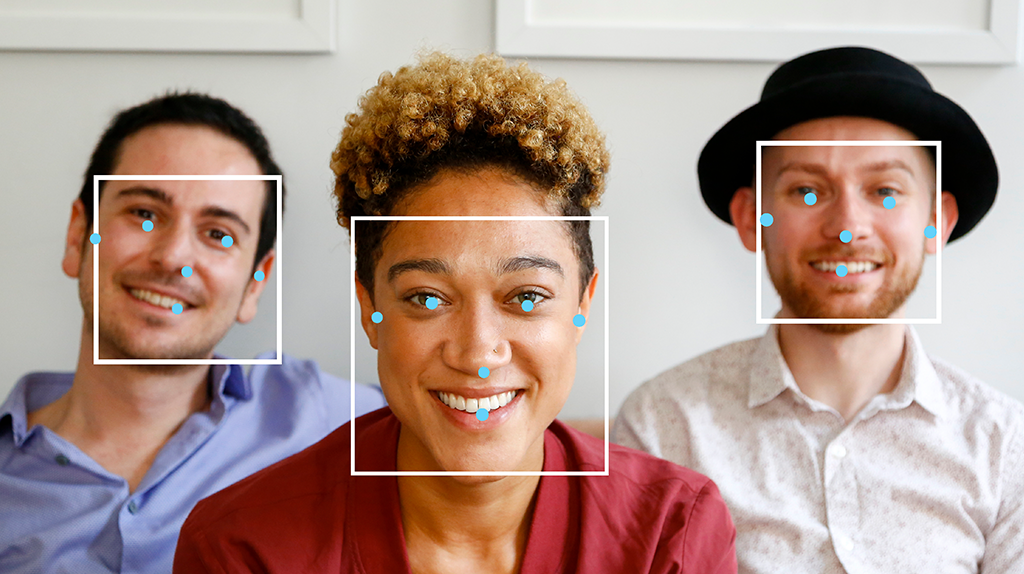

MediaPipe 臉孔偵測器工作可讓您偵測圖片或影片中的臉孔。您可以使用這個任務,找出影格內的臉部和臉部特徵。這項工作會使用機器學習 (ML) 模型,該模型可處理單張圖片或連續圖片串流。這項工作會輸出臉部位置,以及下列臉部要點:左眼、右眼、鼻尖、嘴巴、左眼外眥和右眼外眥。

開始使用

請按照下列目標平台的導入指南操作,開始使用此工作。這些平台專屬指南將逐步引導您完成這項工作的基本實作方式,包括建議的模型,以及含有建議設定選項的程式碼範例:

任務詳細資料

本節說明此工作的功能、輸入內容、輸出內容和設定選項。

功能

- 輸入圖像處理:處理作業包括圖像旋轉、大小調整、標準化和色彩空間轉換。

- 分數門檻:根據預測分數篩選結果。

| 工作輸入內容 | 任務輸出 |

|---|---|

臉部偵測器接受下列任一資料類型的輸入:

|

臉部偵測器會輸出以下結果:

|

設定選項

此工作提供下列設定選項:

| 選項名稱 | 說明 | 值範圍 | 預設值 |

|---|---|---|---|

running_mode |

設定工作執行模式。共有三種模式: IMAGE:單一圖片輸入模式。 VIDEO:影片解碼影格模式。 LIVE_STREAM:輸入資料 (例如來自攝影機的資料) 的直播模式。在這個模式中,必須呼叫 resultListener,才能設定事件監聽器,以非同步方式接收結果。 |

{IMAGE, VIDEO, LIVE_STREAM} |

IMAGE |

min_detection_confidence |

系統判定臉部偵測成功的最低可信度分數。 | Float [0,1] |

0.5 |

min_suppression_threshold |

臉部偵測系統判定為重疊的非最大抑制門檻下限。 | Float [0,1] |

0.3 |

result_callback |

在 Face Detector 處於即時串流模式時,將結果事件監聽器設為以非同步方式接收偵測結果。只有在執行模式設為 LIVE_STREAM 時才能使用。 |

N/A |

Not set |

模型

臉部偵測模型會因用途而異,例如短程和長程偵測。模型通常也會在效能、準確度、解析度和資源需求之間取得平衡,在某些情況下還會加入額外功能。

本節列出的模型是 BlazeFace 的變化版本,這是一種輕量且精確的人臉偵測器,經過最佳化處理行動 GPU 推論。BlazeFace 模型適合用於 3D 臉部關鍵點估算、表情分類和臉部區域區隔等應用。BlazeFace 使用輕量特徵擷取網路,類似於 MobileNetV1/V2。

BlazeFace (近距離)

輕量模型,可透過智慧型手機相機或網路攝影機,偵測自拍相片中的單一或多個面孔。這個模型經過最佳化處理,可處理近距離拍攝的前置手機鏡頭圖片。模型架構採用 Single Shot Detector (SSD) 卷積神經網路技術,並搭配自訂編碼器。如需更多資訊,請參閱 Single Shot MultiBox Detector 相關研究論文。

| 模型名稱 | 輸入形狀 | 量化類型 | 模型資訊卡 | 版本 |

|---|---|---|---|---|

| BlazeFace (短程) | 128 x 128 | float 16 | info | 最新 |

BlazeFace (全範圍)

這是相對輕量化的模型,可偵測智慧型手機相機或網路攝影機拍攝的圖片中是否有單一或多個面孔。這個模型經過最佳化調整,可處理全範圍圖片,例如使用手機後置鏡頭拍攝的圖片。模型架構採用的技術與 CenterNet 卷積網路相似,但使用的是自訂編碼器。

| 模型名稱 | 輸入形狀 | 量化類型 | 模型資訊卡 | 版本 |

|---|---|---|---|---|

| BlazeFace (全範圍) | 128 x 128 | float 16 | info | 即將推出 |

BlazeFace 稀疏 (全範圍)

這是一般全範圍 BlazeFace 模型的輕量版本,大小約為一般模型的 60%。這個模型經過最佳化處理,可處理全範圍圖片,例如使用手機後置鏡頭拍攝的圖片。模型架構採用的技術與 CenterNet 卷積網路相似,但使用的是自訂編碼器。

| 模型名稱 | 輸入形狀 | 量化類型 | 模型資訊卡 | 版本 |

|---|---|---|---|---|

| BlazeFace 稀疏 (全範圍) | 128 x 128 | float 16 | info | 即將推出 |

工作基準

以下是根據上述預先訓練模型,針對整個管道的作業基準。延遲時間結果是 Pixel 6 使用 CPU / GPU 的平均延遲時間。

| 模型名稱 | CPU 延遲時間 | GPU 延遲時間 |

|---|---|---|

| BlazeFace (近距離) | 2.94 毫秒 | 7.41 毫秒 |