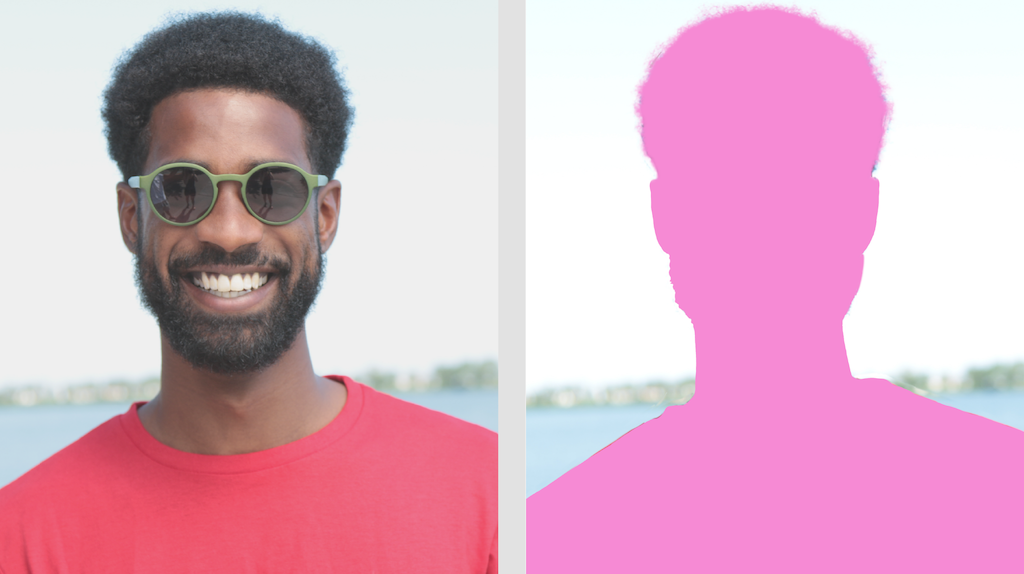

The MediaPipe Image Segmenter task lets you divide images into regions based on predefined categories. You can use this functionality to identify specific objects or textures, and then apply visual effects such as background blurring. This task includes several models specifically trained for segmenting people and their features within image data, including:

- Person and background

- Person's hair only

- Person's hair, face, skin, clothing, and accessories

This task operates on image data with a machine learning (ML) model with single images or a continuous video stream. It outputs a list of segmented regions, representing objects or areas in an image, depending on the model you choose.

Get Started

Start using this task by following one of these implementation guides for your target platform. These platform-specific guides walk you through a basic implementation of this task, including a recommended model, and code example with recommended configuration options:

- Android - Code example - Guide

- Python - Code example Guide

- Web - Code example - Guide

Task details

This section describes the capabilities, inputs, outputs, and configuration options of this task.

Features

- Input image processing - Processing includes image rotation, resizing, normalization, and color space conversion.

| Task inputs | Task outputs |

|---|---|

Input can be one of the following data types:

|

Image Segmenter outputs segmented image data, which can include one or

both of the following, depending on the configuration options you set:

|

Configurations options

This task has the following configuration options:

| Option Name | Description | Value Range | Default Value |

|---|---|---|---|

running_mode |

Sets the running mode for the task. There are three

modes: IMAGE: The mode for single image inputs. VIDEO: The mode for decoded frames of a video. LIVE_STREAM: The mode for a livestream of input data, such as from a camera. In this mode, resultListener must be called to set up a listener to receive results asynchronously. |

{IMAGE, VIDEO, LIVE_STREAM} |

IMAGE |

output_category_mask |

If set to True, the output includes a segmentation mask

as a uint8 image, where each pixel value indicates the winning category

value. |

{True, False} |

False |

output_confidence_masks |

If set to True, the output includes a segmentation mask

as a float value image, where each float value represents the confidence

score map of the category. |

{True, False} |

True |

display_names_locale |

Sets the language of labels to use for display names provided in the

metadata of the task's model, if available. Default is en for

English. You can add localized labels to the metadata of a custom model

using the TensorFlow Lite Metadata Writer API |

Locale code | en |

result_callback |

Sets the result listener to receive the segmentation results

asynchronously when the image segmenter is in the LIVE_STREAM mode.

Can only be used when running mode is set to LIVE_STREAM |

N/A | N/A |

Models

The Image Segmenter can be used with more than one ML model. Most of the following segmentation models are built and trained to perform segmentation with images of people. However, the DeepLab-v3 model is built as a general purpose image segmenter. Select the model that fits best for your application.

Selfie segmentation model

This model can segment the portrait of a person, and can be used for replacing or modifying the background in an image. The model output two categories, background at index 0 and person at index 1. This model has versions with different input shapes including square version and a landscape version which may be more efficient for applications where the input is always that shape, such as video calls.

| Model name | Input shape | Quantization type | Model Card | Versions |

|---|---|---|---|---|

| SelfieSegmenter (square) | 256 x 256 | float 16 | info | Latest |

| SelfieSegmenter (landscape) | 144 x 256 | float 16 | info | Latest |

Hair segmentation model

This model takes an image of a person, locates the hair on their head, and outputs a image segmentation map for their hair. You can use this model for recoloring hair or applying other hair effects. The model outputs the following segmentation categories:

0 - background

1 - hair

| Model name | Input shape | Quantization type | Model Card | Versions |

|---|---|---|---|---|

| HairSegmenter | 512 x 512 | None (float32) | info | Latest |

Multi-class selfie segmentation model

This model takes an image of a person, locates areas for different areas such as hair, skin, and clothing, and outputs an image segmentation map for these items. You can use this model for applying various effects to people in images or video. The model outputs the following segmentation categories:

0 - background

1 - hair

2 - body-skin

3 - face-skin

4 - clothes

5 - others (accessories)

| Model name | Input shape | Quantization type | Model Card | Versions |

|---|---|---|---|---|

| SelfieMulticlass (256 x 256) | 256 x 256 | None (float32) | info | Latest |

DeepLab-v3 model

This model identifies segments for a number of categories, including background, person, cat, dog, and potted plant. The model uses atrous spatial pyramid pooling to capture longer range information. For more information, see DeepLab-v3.

| Model name | Input shape | Quantization type | Versions |

|---|---|---|---|

| DeepLab-V3 | 257 x 257 | None (float32) | Latest |

Task benchmarks

Here's the task benchmarks for the whole pipeline based on the above pre-trained models. The latency result is the average latency on Pixel 6 using CPU / GPU.

| Model Name | CPU Latency | GPU Latency |

|---|---|---|

| SelfieSegmenter (square) | 33.46ms | 35.15ms |

| SelfieSegmenter (landscape) | 34.19ms | 33.55ms |

| HairSegmenter | 57.90ms | 52.14ms |

| SelfieMulticlass (256 x 256) | 217.76ms | 71.24ms |

| DeepLab-V3 | 123.93ms | 103.30ms |